“We have a headache with Arrhenius” was attributed to Takehisa Okada, Senior General Manager of Sony Corporation, when asked about Sony’s perspective on reliability prediction methods during a U.S. Japanese Technology Evaluation Center visit.[1]

The thermal environment is an important consideration in the design of electronics. Temperatures that arise during the use of electronics can present safety concerns, influence performance, and affect the overall product reliability. In terms of electronic product reliability, existing standards have focused on parts and often relate part failure rate to steady state temperature using an Arrhenius type equation. This treatment has lead to the rule of thumb that lowering the temperature by 10oC will double your product reliability. Unfortunately, this simple treatment of a complex interaction between material systems, chemical process, and device physics, when used in the design process, can result in unreliable and costly products.

In terms of electronics, the Arrhenius equation has been used to model the influence of temperature on the mean time to failure of electronic devices.The activation energy is related to physical processes that gives rise to failure (i.e., failure mechanisms). Failure mechanisms that are well suited for modeling with the Arrhenius equation include intermetallic growth, corrosion, metal migration, void formation, and dielectric breakdown. However, activation energies for these failures in electronic devices can vary to a large degree. An error in the value of the activation energy of 0.1 eV at 60oC can increase or decrease the mean time to failure by a factor of more than 30. In some cases, the failure mechanism will not occur unless the temperature is above the threshold temperature.

Reliability standards, such as Mil-HDBK-217, CNET, and Siemens’ SN29500, have been built on the Arrhenius equation and the assumption that part reliability is the problem [2]. Over the years, engineers have seriously questioned these standards. In 1996, the United States Army issued a directive that called for the preclusion of Mil-HDBK-217 (and similar documents) in Army Requests for Proposals, citing that the standard “has been shown to be unreliable and its use can lead to erroneous and misleading reliability predictions” [3].

Even the preparing agency (Air Force Rome Laboratory) states that the 217 standard “is not intended to predict field reliability and, in general, does not do a very good job at it in an absolute sense” [4]. In terms of parts being a reliability problem, the past decade has seen a dramatic increase in the reliability of electronic devices that is challenging existing test methods.

In other words, failure of individual components is not necessarily the dominant risk in electronic product reliability. In many instances, shorter acting failure mechanisms, such as conductive filament formation, stress relaxation at contact interfaces, and package-to-board interconnect fatigue (for which the Arrhenius equation is not a viable model) are more dominant in causing failures in electronic products. These failures mechanisms may also be related to no fault found type failures.

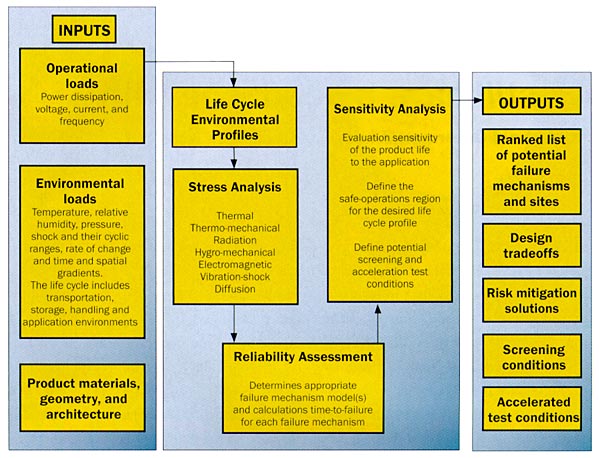

Figure 1 – Physics of failure process

While there has been a move towards performance-based reliability assessment, reliability estimates based on the steady state Arrhenius equation are still ingrained in the development of electronics. For instance, regulatory organizations, such as Underwriters Laboratory (UL991), refer to Military Handbook 217 for computational investigation of critical components. In addition, the formulation of reliability requirements based on constant failure rates continues to lead electronic product developers to use the Arrhenius equation for calculating part failure rates. Further, testing and burn-in specifications for electronics are still in part based on the assumption that steady state temperature is a leading failure driver.

To quantify the reliability of electronic products, one must have a process (see Figure 1) for an understanding of how the product will fail and for demonstrating that the failure is beyond the product’s intended useful life. Failure of electronic products is a function of physical processes (failure mechanisms) that cause devices and the assemblies to degrade and eventually fail.

To evaluate the risk related to competing failure mechanisms, one must assess all relevant failure mechanisms. This means understanding the materials, the geometries, external environment, and processes that can induce the failure, evaluating the sensitivity of identified failures to manufacturing parameters and environment or operational condition, and finally, determining if stress management or a design change can reduce the likelihood of failure.

Failures in electronic products can result from failure of individual packaged electronic devices, failure of electrical interconnection between a device and its carrier, a shift in performance parameters, which cause the product not to operate within defined limits.In general, wearout failure mechanisms that are inherent in packaged devices are far less prominent and take longer to occur than assembly-related wearout failures, such as those produced by normal temperature cycling and vibration.

In fact, the electronic assembly (i.e., printed circuit board assembly) could not survive the tests used to estimate the reliability of packaged electronic devices. Failure mechanisms that relate to temperature cycling, which can be a primary driver in the failure of electronics, are not adequately modeled by the Arrhenius equation.

Failures of electronic products are often linked to manufacturing issues. If the manufacturing process is not controlled or adequately understood, products may experience a high degree of early failures (infant mortality). High infant mortality is generally a sign that the manufacturing process is not under control.Failure related to infant mortality is generally not well suited for modeling by the Arrhenius equation.

Manufacturing-induced failures can generally be related to overstress occurring to packaging process steps. For example, electrostatic discharge (ESD), which can cause failure in electronic products, results if proper handling precautions are not taken.Contamination resulting from the manufacturing process can lead to corrosion or metal migration, and infrequent drill changes can lead to excessive wicking in plated through holes. Here, the training level of the assembler plays a more significant role on reliability than steady state temperature.

With regards to temperature and thermal management, reliability of the product can be “built in” if the development team understands the environment in which the product will operate, the required life expectancy, the material systems employed in physically realizing the product, and stresses that can induce product failure. Armed with this information, one must plan how to minimize manufacturing variability and provide appropriate stress management.

In addition to the application of a physics-of-failure approach to reliability, good supply chain management is necessary to ensure product. A good design may be sabotaged by defective materials or by improper part substitutions. In evaluating parts, materials and designs, the availability of general-purpose stress assessment software and documented failure mechanisms makes virtual qualification a real possibility.

Virtual qualification is the use of software and available technical information to demonstrate that a product can meet life expectancy objectives. The CALCE Electronic Products and Systems Center has developed methods and software for performing virtual qualification [5]. Virtual qualification also allows development of accelerated product qualification tests (physical tests) to demonstrate product reliability. In closing, the application of sound engineering approaches will increase the reliability far more than reducing the maximum sustained temperature by 10oC.

References

1. Kelly, M. J., Boulton, W. R., Kukowski, J. A., Meieran, E. S., Pecht, M. G., Peeples, J. W. and Tummala, R. R., Feb. 1995, JTEC Panel Report on Electronic Manufacturing and Packaging in Japan, Loyola College, MD.

2. Pecht, M., Lall, P., and Hakim, E., 1995, “Temperature as a Reliability Factor”, Eurotherm Seminar No. 45: Thermal Management of Electronic Systems, pp. 36.1-22.

3.Cushing, M., Krolewski, J., Stadterman, T., and Hum, B., 1996, “U.S. Army Reliability Standardization Improvement Policy and Its Impact”, IEEE Transactions on Components, Packaging, and Manufacturing Technology, Part A, Vol. 19, No. 2, pp. 277-278.

4. Pecht, M., Boullie, J., Hakim, E., Jain, A., Jackson, M., Knowles, I., Shroeder, R., Strange, A., and Wyler, J., February 1998, The Realism of FAA Reliability-Safety Requirements and Alternatives, IEEE AES Systems Magazine.

5.Osterman, M. and Stadterman, T., Jan 1999,”Failure-Assessment Software For Circuit-Card Assemblies”, Annual Reliability and Maintainability Symposium, pp. 269-276.