To introduce the reliability requirements we face for the future, we focus on telecommunication as an example. Personal telecommunication is becoming increasingly integrated into our daily lives. However, we cannot take full advantage of the technology unless the telecommunication system is as dependable as a car. Just as turning the ignition key should produce the right engine response first time, every time, so also should the “connect” instruction from an Internet terminal or any telecommunication device. In the future, Personal Trusted Devices combining all the functions of a phone organizer, secure web browser for shopping and personal finance, electronic cash, credit card, ID card, driver’s license, and keys to car, home, and work place will be technically possible. To gain widespread acceptance, how dependable must such a device and the infrastructure that supports it be?

To date the computer industry has been the first sector to utilize new technologies, but increasingly the telecommunication industry is taking the lead. Heavy competition and short design cycles force the use of technologies before there is adequate experience of their field reliability. Increasing the component power consumption and data clock frequencies of digital circuits reduces design tolerances and drives the demand for methods to predict technology and system reliability through simulation, augmented by accelerated laboratory tests.

Even in consumer electronics, where products have enjoyed relatively large design margins with respect to reliability and performance, products are being designed closer to their limits, forced by miniaturization. Reliability prediction must be linked to overall risk management, providing estimates of how big a reliability risk is taken when a new technology is used without previous field experience. From this, system integrators can compare the monetary benefits from increased sales and market share against the possible warranty and maintenance costs.

Currently emerging virtual prototyping and qualification tools simulate the effect of mechanical and thermomechanical stresses on reliability. For a subsystem or a single part, the reliability in a specified environment can be well predicted. However, the prediction accuracy decreases when the whole system is considered or when the geometry, material properties, usage profile or operating environment are not properly known. More work is needed in simulation tool and model development, in tool integration, and in capturing data on the reliability loads the system will encounter [1].

This article discusses the “knowledge gap” between temperature analysis and system lifetime assessment and how these principles can be applied in practice. The technical aspects are mainly based on work performed by CALCE Electronic Products and Systems Center at the University of Maryland [2-4].

Cooler Is Better?

The effects of temperature on electronic device failure have been obtained mainly through accelerated testing, during which the temperature and, in some cases, the power are substantially increased to make the test duration manageable. This data is then correlated with actual field failures. MIL-HDBK-217, “Reliability Prediction of Electronic Equipment”, which contains failure rate models for different electronic components is based on this correlated data. The total reliability calculations are then performed either by “parts count” or “part stress” analysis. Although now defunct, its basic methodology is the foundation for many in-house reliability programs still in use and has been adapted by Bellcore for telecommunications applications.

The fundamental difficulties related to the use of MIL-HDBK-217 have been previously discussed in many publications [2-5], and therefore only the main issues are pointed out here.

The basis of the handbook is the assumption that many of the chip level failure mechanisms occurring under accelerated test conditions are diffusion-dominated physical or chemical processes, where the failure rate is represented by an exponential equation. Using this relationship assumes that failure mechanisms active under test conditions are also active during operation. This is substantially incorrect, since some failure mechanisms have a temperature threshold below which the mechanism is not active, while others are suppressed at elevated temperatures.

In the temperature range -55 to 150oC, most of the reported failure mechanisms are not due to a high steady-state temperature. They either depend on temperature gradients, temperature cycle magnitude, or rate of change of temperature [5]. An additional fundamental difficulty in using MIL-HDBK-217 type models for new and emerging technologies and components is the lack of a wide, environmentally relevant database of test data and experience of field failures. Therefore the failure time calculations would be based on many assumptions, the validity of which is not known.

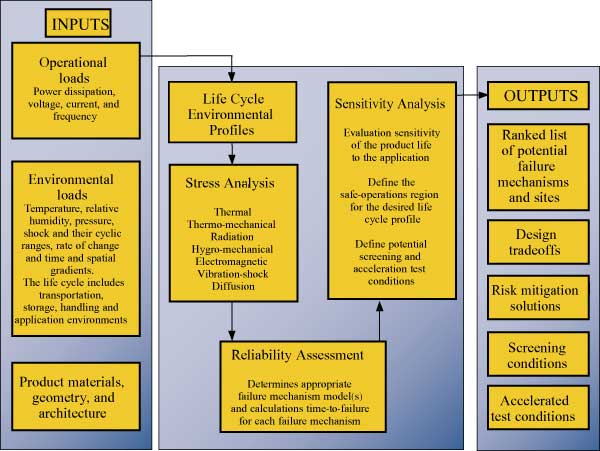

In 1993 the U.S. Army Material Systems Acquisition Activity and CALCE began working with the IEEE Reliability Society to develop an IEEE Reliability Prediction Standard for commercial and military use. The standard is based on “Physics-of-Failure” (PoF) approaches [2]. The PoF-based design and qualification process is schematically presented in Figure 1. Space restrictions prevent a lengthy discussion, but the figure shows how risks are mitigated through various analyses using input data from a spectrum of stakeholders.

Figure 1. Overview of PoF-based design and qualification process (courtesy of CALCE).The above-mentioned work culminated in two IEEE standards [6].

- The first, “IEEE Standard Reliability Program for the Development and Production of Electronic Systems and Equipment” (IEEE Std 1332-1998), was developed to ensure that every activity in a product reliability program adds value. In brief, IEEE 1332-1998 identifies three objectives for a reliability program, being that the supplier shall determine, meet, and verify the customer’s requirements and product needs.

- The second, “IEEE Standard Methodology for Reliability Prediction and Assessment for Electronic Systems and Equipment” (IEEE Std 1413-1999), identifies the required elements for an understandable, credible reliability prediction to provide sufficient information for the effective use of the results requiring thorough documentation of the reliability assessment.

The likely future implications of these standards for the electronics industry are not entirely clear. However, one can imagine their impact to be similar to the introduction of the ISO 9000 quality management system, when customers asked their suppliers whether their internal quality management systems were ISO 9000 certified and started to show a strong preference for certified suppliers. The impact of IEEE 1332-1998 and IEEE 1413-1999 may be more profound, since they are more directly related to what the customer is interested in (the quality of the supplier’s product).

IEEE 1332-1998 requires suppliers to work closely with customers, so a supplier’s compliance with IEEE 1332-1998 should be readily apparent. However, customers need to ask whether the reliability assessment of the product they are considering is IEEE 1413-1999 compliant and, if so, ask for the supporting documentation. “No” is unlikely to remain an acceptable answer for very long. Unfortunately, many early failures with a new technology, such as process-related manufacturing problems, are difficult to detect using the PoF approach. The above-mentioned IEEE standards take this difficulty into consideration by demanding that the assumed root causes be documented and brought to the awareness of the customer so that their relevance can be assessed.

In many electronics products utilizing high density packages, high clock frequencies, and high power, the dominant reliability problems are not related to components but to interconnects, especially solder joints. It is striking to observe that no international standards exist to specify the maximum allowable values of known stressors, such as the creep strain energy dissipated per operational cycle. In short, in many cases, products are tested using standards that don’t make sense, while no standards address the real causes of many of the problems that occur.

The Role of Thermal Design and Reliability Qualification in Practice

Thermal design has grown in importance over the past few years as the technical challenges and costs of cooling have increased. Central to this is thermal analysis, allowing designers to examine a range of cooling scenarios quickly and to ensure that catastrophic design mistakes are not made. Over 90% of the thermal analyses performed on electronics products over the last few years have been steady-state based. In part this has been driven by the focus on temperature during steady-state operation from a reliability standpoint. It is also relatively quick, compared to a transient analysis.

Thermal “uprating” is the assessment of a part to meet functional and performance requirements when used outside the manufacturer-specified temperature range [7]. In spite of thermal derating [8], uprating has recently become interesting as a means of achieving competitive advantage despite the legal issues it raises relating to warranty. To estimate the risks being taken with a given design, the extent to which the manufacturer’s limits on temperature are exceeded must be accurately known [7]. This presents a further challenge to thermal analysis.

Despite huge progress, the accuracy of thermal analysis remains seriously limited by:

Environmental uncertainties

- Limited knowledge of the actual operating environment of the equipment

- The actual system power on/power off cycle and loading

- Information on the peak and normal component operating powers, etc.

Limited design data

- Lack of thermal models of components suitable for design calculations

- Unknown material properties or material property data of unknown accuracy

- Unknown model accuracy and sensitivity

- Inherent errors associated with using CFD analysis of complex geometries [9]

The above classification reflects a natural, if somewhat idealized, division of responsibility. The system integrator is responsible for resolving environmental uncertainties through discussion with the customer/user of the equipment. The component supplier is responsible for providing behavioral models of the supplied components to allow the equipment manufacturer to model their performance within the system.

When the design cycles are short and new technologies are continually being adopted, a virtual qualification approach is needed. This allows design weaknesses to be identified by prediction and removed before the prototype used in highly accelerated life testing (HALT) is created. Although experimental tests are used to verify the design, their number can be greatly reduced by numerical simulation. Again, this places greater reliance on simulation, requiring better tool support, thermal and thermomechanical data, models, and material properties.

At present, analyses performed to investigate the impact of design changes on the thermal, electromagnetic compatibility, and thermomechanical behavior of the system are either performed separately or are not performed at all due to the time required to replicate design changes across a range of tools. The ability to perform such analyses concurrently will allow design tradeoffs to be investigated much faster and at minimum cost. The long-term aim is to identify weaknesses during design and use the knowledge to improve design practices. Ultimately this will provide an analysis environment in which physical designs of known reliability can be created.

We are still far away from a method that enables designers to address reliability requirements in a logical and user-friendly way. In order to realize this, progress is needed in the following areas:

- Accuracy of thermal analysis

- Accuracy of the reliability assessment based on thermal/thermomechanical input

- Knowledge of all active failure mechanisms

- System level thermal analysis to address application-driven stressors

- Reliable field data

- Failures caused by design errors distinguished from those related to reliability

- Physical understanding of various notoriously difficult failure mechanisms, e.g. solder joint reliability

- Realistic user-defined environmental profiles

This is a massive task and can only be achieved through the co-operative efforts between many experts in many different disciplines. Progress is hampered by “over the wall” design cultures. Also, electronics designers are often held responsible for reliability calculations. While the electronic engineer is certainly responsible for the power dissipation, the mechanical engineer should be responsible for the resulting temperature rise and, ultimately, reliability.

Apart from correct input data, accurate reliability analysis depends on the accuracy of a whole range of separate tools that must be combined: thermomechanical, EMC, vibration, humidity � and thermal.

About the PROFIT Project – Shrinking the Knowledge Gap

Recognizing the need to improve the data and methods used in physical design, the European Community is funding the PROFIT project: Prediction of Temperature Gradients Influencing the Quality of Electronic Products [10]. The partners in PROFIT are Philips Research (coordinating), Flomerics, ST Microelectronics, Infineon Technologies, Philips Semiconductors, Technical University of Budapest, MicRed, TIMA, CQM and Nokia.

PROFIT has a number of tasks relating to the main aim: To provide reliable and accurate temperature-related information to the designers responsible for yield improvement, performance, reliability, and safety. Only those activities directly related to reliability are discussed here.

Developing reliability analysis methodologies is beyond PROFIT’s scope but is being done in other related projects. Thermomechanical failures, especially related to interconnects, are of great interest. Nokia, Philips and Flomerics will investigate the benefits of using detailed system-level temperature information predicted for the equipment during operation to drive time-dependent thermomechanical stress calculations and, hence, predict system reliability.

In doing so, the partners hope to produce failure and lifetime predictions that are representative of the equipment’s field operation, taking into account the normal use cycle and changing environmental loads. If successful, it will then be possible to make this an integral part of the product design process, providing the potential to perform lifetime predictions at each design iteration. Nokia will devise a suitable demonstration of this aspect of the project results.

The participating semiconductor manufacturers (ST Microelectronics, Infineon Technologies and Philips Semiconductors) will use the project results to better understand the actual reliability of their products in different environments and, through modeling, be able to improve the thermal and thermomechanical behavior of their components. This will result in more usable thermal data for end users and package yield improvements.

The thermal software vendors (Flomerics and MicRed) will use the results to develop their tools to support their customers’ physical design activities so that a more holistic view of the physical performance of the system can be obtained. This involves combining the simulation of thermal performance with EMC and thermomechanical stress. Web-centric tools are also being developed to support the provision of data and models.

The RELIQUI project

Parallel to PROFIT, a separate project, RELIQUI (Reliability and Quality Integration) in which Philips, Nokia and CALCE participate, has been started to address a number of reliability issues. The ultimate goal is to make significant contributions to the creation of better quality products at a lower cost and in less time. The objective will be realized through the development of virtual qualification and prototyping tools, enabling the assessment of yield improvement, performance, reliability and safety (collectively referred to hereafter as “quality”) in every stage of the design process.

More specifically, the following sub-objectives can be distinguished:

- Exploring methods to enable design-to-limits

- Virtual qualification enabling earlier choice between alternative designs

- Improving physics-based understanding of final product reliability

- Realizing faster test methods

- Understanding the relationship between accelerated tests on parts and system performance

- The search for realistic temperature specifications

The project focuses on the quality assessment of complete products, which distinguishes the project from the many other projects that focus on some part of the system only (component, interconnect, board). The project also differs in the use of operational conditions rather than standardized test conditions. Another prominent feature of the project is the possibility to test many products, enabling statistically significant conclusions. Finally, the project will investigate coupling the results of system-level thermal analysis software to reliability software, thereby including the various results from the PROFIT project.

Summary

The “Physics of Failure” approach to reliability qualification has been compared to MIL-HDBK-217. The role of thermal design and reliability qualification has been discussed in the context of current industrial needs for short design cycles and rapid implementation of new technologies. Two multi-company projects targeting to improvement of reliability through better temperature-related information have been presented.

References

1. M. Lindell, P. Stoaks, D. Carey, P. Sandborn, 1998, “The Role of Physical Implementation in Virtual Prototyping of Electronic Systems”, IEEE Trans. CPMT, Part A, Vol. 21, No. 4, pp. 611-616.

2. M. Pecht, 1996, “Why the traditional reliability prediction models do not work – is there an alternative?”, ElectronicsCooling, Vol. 2, No. 1, pp. 10-12.

3. D. Das, 1999, “Use of Thermal Analysis Information in Avionics Equipment Development”, ElectronicsCooling, Vol. 5, No. 3, pp. 28-34.

4. M. Osterman, 2001, “We still have a headache with Arrhenius”, ElectronicsCooling, Vol. 7, No. 1, pp. 53-54.

5. P. Lall, M. Pecht, E. Hakim, 1997, “Influence of temperature on Microelectronics and System reliability”, CRC Press, New York.

6. http://standards.ieee.org/catalog/reliability.html

7. D. Humphrey, L. Condra, N. Pends�, D.Das, C. Wilkinson, M. Pecht, 2000, “An Avionics Guide to Uprating of Electronic Parts”, IEEE Trans. CPMT, Part A, Vol. 23, No. 3, pp. 595-599.

8. M. Jackson, P. Lall, D. Das, 1997, “Thermal Derating – A Factor of Safety or Ignorance”, IEEE Trans. CPMT, Part A, Vol. 20, No. 1, pp. 83-85.

9. C. Lasance, 2001, “The Conceivable Accuracy of Experimental and Numerical Thermal Analyses of Electronic Systems”, Proc. 17th SEMI-THERM, pp. 180-198.

10. C. Lasance, 2001, “The European Project PROFIT: Prediction of Temperature Gradients Influencing the Quality of Electronic Products”, Proc. 17th SEMI-THERM, pp. 120-125.