Introduction

The environment of every data center is complex and unique. Air currents within the data center have hot and cold air streams colliding, cold air exhausting upward from perforated tiles, hot air streams exhausting from tops and the rear of servers and storage racks. Not only are these air circulation patterns extremely complex, they can also be transient in nature due to the periodicity of the server and A/C cycling patterns. Air flow distribution within a data center has a major affect on the thermal environment of the data processing equipment located within these rooms, and a key requirement of datacom (data processing and communications) equipment is that the inlet temperature and humidity be maintained within the required specifications.

Air flow in a datacom room has a major affect on the cooling of computer rooms. Cooling concepts with a few different air flow directions are illustrated in [1]. A number of papers have focused on whether the air should be delivered from overhead or from underneath via a raised floor plenum [2-4], ceiling height requirements to eliminate “heat traps” or hot air stratification [2,5], raised floor heights [5], and proper distribution of the computer equipment in the data center [3,6] such that hot spots or high temperatures would not exist. Computer room cooling concepts can be classified according to the two main types of room construction: non-raised floor (or standard room) and raised floor. Some of the papers discuss and compare these concepts in general terms [4,7-9].

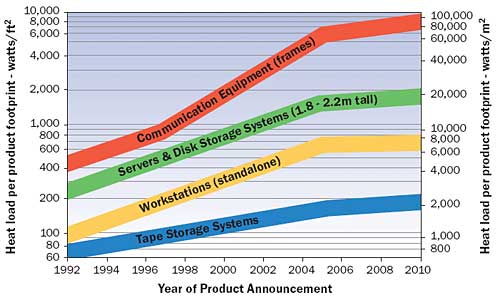

Not only are the air patterns extremely complex, but the power density of the data processing equipment is also increasing at a rapid rate. Due to technology compaction, the Information Technology (IT) industry has seen a large decrease in the floor space required to achieve a constant quantity of computing and storage capability. However, the energy efficiency of the equipment has not dropped at the same rate to offset the upward trend. This has resulted in a significant increase in power density and heat dissipation within the footprint of computer and telecommunications hardware. The heat dissipated by these systems is exhausted in the room, and the air distribution must have a greater precision so that the air temperature and humidity at the inlet to the data processing equipment is within the specifications of the computer manufacturer’s requirements. With the increased datacom equipment power densities, shown in Figure 1 [10], maintaining acceptable rack inlet conditions for reliable operation of the equipment is becoming a major challenge.

|

Figure 1. Original datacom equipment power trend chart (The Uptime Institute).Besides the power density of the equipment in the data center increasing significantly, there are other factors that play into data center thermal management. Managers of IT equipment need to deploy equipment quickly in order to get maximum use of a large financial asset. This could mean that minimal time is spent on site preparation, thereby potentially resulting in thermal issues once the equipment is installed. The construction cost of a data center is exceeding $15,000/m2 in some metropolitan areas. For these reasons the IT and facilities managers want to obtain the most out of their data center space and maximize the utilization of their infrastructure. Unfortunately, the current situation in many data centers does not allow time for this optimization. The IT equipment installed in a data center can come from many different manufacturers, each having different environmental specifications. With these multiple sets of requirements the IT facilities manager must overcool his entire data center to compensate for the equipment with the tightest requirements.

Herein lies the challenge of data center facility operators, especially with increased equipment heat loads as shown in Figure 1. How do operators maintain these environmental requirements for all the racks situated within a data center where the equipment is constantly changing? Unless proper attention is given to designing facilities capable of providing sufficient airflow and rack inlet air temperatures, hot spots can occur within the data center.

Formation of ASHRAE TC 9.9

ASHRAE (American Society of Heating, Refrigerating, and Air-Conditioning Engineers) is an international organization with over 50,000 members. Its mission is to provide important unbiased direction to the general population of engineers, equipment manufacturers, and facility owners with a vested interest in HVAC design and operation. In January 2002, the authors approached ASHRAE and proposed creating an independent committee specifically to address high-density electronic equipment heat loads. ASHRAE accepted the proposal, and eventually a technical committee, TC9.9 Mission Critical Facilities, Technology Spaces, & Electronic Equipment, was formed. The objective of this committee is to provide better communications between electronic/computer equipment manufacturers and facility operations personnel to ensure proper and fault tolerant operation of equipment within datacom (data processing and communications) facilities. The committee includes extensive representation from major electronics/computer manufacturers, as well as facility cooling equipment manufacturers and cooling system contractors.

Thermal Guidelines

The first priority of TC9.9 was to create a Thermal Guidelines document that would help provide design guidance to equipment manufacturers and help data center facility designers create efficient and fault tolerant operation within the data center. The resulting document, “Thermal Guidelines for Data Processing Environments”, published in January 2004 [11], addresses a variety of key issues, including those described below.

For data centers, the primary thermal management focus is on assuring that the temperature and humidity requirements of the data processing equipment are being met. These requirements can be a challenge especially when there may be many racks arranged in close proximity to each other. Unfortunately each manufacturer also has its own environmental specifications, and data centers that have all types of electronic equipment are faced with a wide variety of environmental specifications. In an effort to standardize, the ASHRAE TC9.9 committee first surveyed the environmental specifications of a number of data processing equipment manufacturers. From this survey, four distinct classes were created that would encompass the majority of the specifications. Also included within the guidelines was a comparison to the NEBS (Network Equipment Building System) specifications for the telecom industry to show both the differences and also to aid in possible convergence of the specifications in the future. The four data processing classes cover the entire environmental range from air-conditioned, server, and storage environments of classes 1 and 2 to the lesser controlled environments such as class 3 for workstations, PC’s, and portables, or class 4 for point of sales equipment with virtually no specific environmental control.

The class 1 environment is the most tightly controlled and would be most appropriate for data centers with mission critical operations. The dry bulb allowable temperature range is 15 to 32°C while the recommended is 20 to 25°C. The allowable relative humidity range is 20 to 80% while the recommended is 40 to 55%. Facilities should be designed and operated to target the recommended range while the equipment manufacturers design the equipment to operate in the allowable range. The maximum elevation for the equipment is 3,050 m with the dry bulb de-rated by 1°C/300 m above 900 m. Finally the maximum dewpoint is 17°C and the maximum rate of change is 5°C/hr. These are the product operating specifications. Additional information is given in the guidelines on the non-operating specifications.

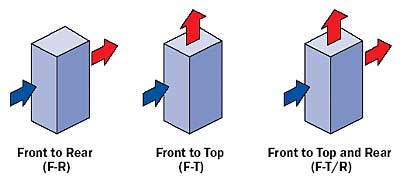

In order for seamless integration between the server and the data center to occur, certain protocols needed to be developed, especially in the area of airflow. Currently, manufacturers design their equipment air inlets and exhaust wherever it is convenient from an architectural packaging standpoint. As a result, there have been many cases where the inlet of one server is directly next to the exhaust of adjacent equipment resulting in the ingestion of hot air. This has direct consequences with regard to the reliability of that machine. This guideline attempts to steer manufacturers toward a common airflow scheme to prevent this hot air ingestion by specifying regions for inlets and exhausts. The guideline recommends one of the three airflow configurations as shown in Figure 2.

|

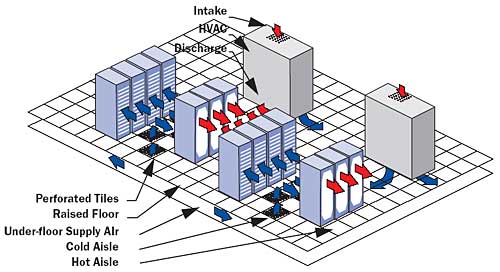

Figure 2. Recommended datacom equipment air flow protocols (ASHRAE).Once manufacturers start implementing the equipment protocol, it will become easier for facility managers to optimize their layouts to accommodate the maximum possible power density of IT equipment by following the guidelines recommending the hot-aisle/cold-aisle concept as shown in Figure 3. In other words, the front face of all equipment is always facing the cold aisle.

|

Figure 3. Example of hot and cold aisle with underfloor supply air (ASHRAE).Another issue that had to be addressed was the accurate reporting of heat load dissipated by the various electronic boxes manufactured by the datacom manufacturers. The ASHRAE guideline defines what information is to be reported by the information technology equipment manufacturer to assist the data center planner in the thermal management of the data center. The equipment heat release value is the key parameter that is reported. In addition, several other pieces of information are required if the heat release values are to be meaningful; for example, total system airflow rate, typical configurations of the system, airflow direction of the system, and the environmental class of the system.

Datacom Equipment Power Trends

Datacom equipment technology is advancing at a rapid pace and is resulting in relatively short product cycles and an increased frequency of datacom equipment upgrades. Since datacom facilities that house this equipment, as well as their associated HVAC infrastructure, are comprised of components that typically deliver long life cycles, any modern datacom facility design must be able to accommodate seamlessly the multiple datacom equipment deployments it will experience during its lifetime.

These changes may result in significant variances in load densities and location. If a facility is rigidly designed without a view to future accommodations, a situation may result in which datacom equipment redeployment is not possible due to the inflexibility of the cooling system or to the requirement for cooling system modifications that are financially unviable. Therefore, any information on the future power trends of datacom equipment represents an invaluable resource in the design of a cooling system for a datacom facility.

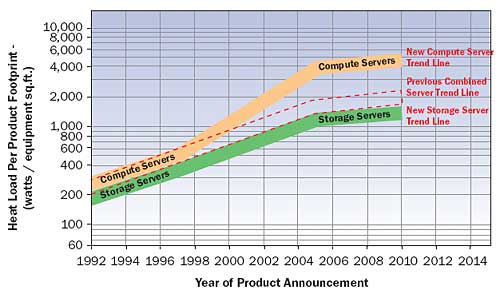

Based on the latest information from all the leading datacom equipment manufacturers, the upcoming ASHRAE special publication “Datacom Power Trends and Cooling Applications”, authored by the TC9.9 committee, provides new and expanded datacom equipment power trend charts. This information allows the datacom facility designer to predict more accurately the datacom equipment loads that a given facility may expect to accommodate in the future, and also provides ways of applying the trend information to datacom facility designs today.

Many members of the Thermal Management Consortium on Data Center and Telecom Rooms, which created the original power trends charts published by the Uptime Institute [10] also actively participated in the creation of the new power trends charts published in the new publication. The new trends are projected out to 2014 and their categories have increased from 4 to 7 to better delineate the evolution of the trend differences in the types of equipment.

One example of the evolution of the power trends charts is how the previously combined trend for all servers has been split into separate trends for storage servers and compute servers since their power trends have shown some differences over time (Figure 4).

|

Figure 4. New datacom equipment power trend chart – compute and storage servers split (ASHRAE).Also included in this publication is an overview of various air and liquid cooling system options that may be considered to handle the future loads, and an invaluable appendix containing a collection of terms and definitions used by the datacom equipment manufacturers, the facilities operation industry, and the cooling design and construction industry.

Future TC9.9 Activities

The TC9.9 committee is now working on a number of projects and activities. There is a lack of current information and guidelines on the topic of building cooling systems for facilities housing datacom equipment and the two publications (“Thermal Guidelines for Data Processing Environments” and “Datacom Equipment Power Trends and Cooling Applications”) represent the beginning of the committee’s efforts to provide the information needed to bridge the gap between the IT and Data Center facilities industries.

Other ongoing activities include:

- Complete rewrite of Chapter 17 of the ASHRAE Applications Handbook on design criteria and considerations for datacom facilities.

- Update ASHRAE Standard 127 on the method for testing and rating datacom facility cooling equipment such as Computer Room Air-Conditioning (CRAC) units.

- Measurements and modeling initiatives of existing high density datacom facilities.

- Sponsoring a High Density Cooling Issues symposium at the upcoming ASHRAE Winter Meeting in February 2005 and sponsoring two symposia at the ASHRAE Annual Meeting in June 2005.

- Numerous speaking engagements and presentations nationwide, including recent conferences by the Uptime Institute, Storage Networking World, IMAPS, Datacenter Dynamics. Semitherm, Itherm and the 7×24 Exchange.

The contact information and the activities of the TC9.9 committee can be followed from the ASHRAE TC9.9 website: www.tc99.ashraetcs.org

References

- Obler, H., “Energy Efficient Computer Cooling,” Vol. 54, No. 1, Jan. 1982, pp. 107 – 111.

- Levy, H.F., “Computer Room Air Conditioning: How to Prevent a Catastrophe,” Building Systems Design, Vol. 69, No. 11, November 1972, pp. 18-22.

- Goes, R.W., “Design Electronic Data Processing Installations for Reliability,” Heating, Piping & Air Conditioning, Vol. 31, No. 9, September 1959, pp. 118-120.

- Di Giacomo, W.A., “Computer Room Environmental Systems,” Heating/Piping/Air Conditioning, Vol. 45, No. 11, October 1973, pp.76-80.

- Ayres, J.M., “Air Conditioning Needs of Computers Pose Problems for New Office Building,” Heating, Piping and Air Conditioning, Vol. 34, No. 8, August 1962, pp. 107-112.

- Grande, F.J., “Application of a New Concept in Computer Room Air Conditioning,” The Western Electric Engineer, Vol. 4, No. 1, January 1960, pp. 32-34.

- Green, F., “Computer Room Air Distribution,” ASHRAE Journal, Vol. 9, No. 2, February 1967, pp. 63-64.

- Birken, M.N., “Cooling Computers,” Heating, Piping & Air Conditioning, Vol. 39, No. 6, June 1967, pp. 125-128.

- Levy, H.F., “Air Distribution Through Computer Room Floors,” Building Systems Design, Vol. 70, No. 7, October/November 1973, p. 16.

- The Uptime Institute, from the white paper: ” Heat Density Trends in Data Processing, Computer Systems and Telecommunications Equipment” www.uptimeinstitute.org.

- ASHRAE, “Thermal Guidelines for Data Processing Environments”, 2004, available from www.tc99.ashraetcs.org