Introduction

IBM announced its return to water cooling on April 19, 2005 with the introduction of a water cooled heat exchanger mounted to the back cover of a 19 inch rack. This is the first of the major datacom equipment manufacturers to employ water cooling for a rack of CMOS processors, using a cooling distribution unit supplying the water and rejecting the heat load to building chilled water. After a ten-year absence (IBM produced its last water cooled system in 1995 [1]) some customers were clearly under severe environmental constraints in their data center, necessitating a shift to a cooling technology other than air. It was clear that water cooling offered the opportunity to provide the solution and, in so doing, would follow a path that was forged when water cooling was first introduced into the data center for cooling computers in 1964.

Historical Perspective

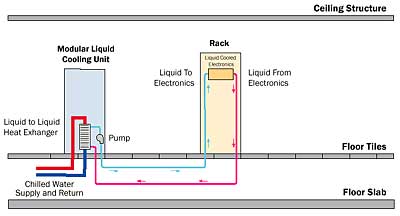

Water cooling began with IBM announcing their first water cooled product in 1964. In this product, building chilled water was supplied directly to the system where it was used to cool interboard heat exchangers to reduce the temperature rise across multiple stacks of boards populated with cards. Then, in 1965, a further step was taken to improve on system reliability by controlling the water quality with the implementation of a cooling distribution unit. This unit provided a buffer between the building chilled water and the secondary water loop that provided conditioned water to the computer system. A schematic of this arrangement is shown in Figure 1.

|

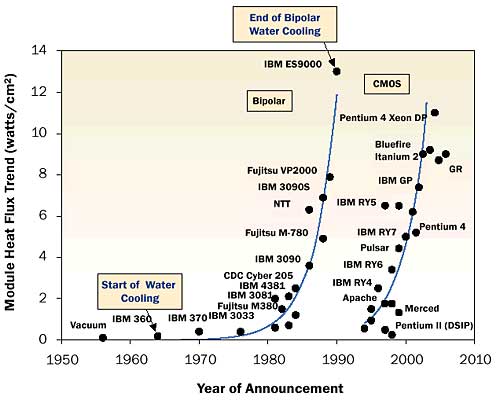

Figure 1. External liquid cooled rack with remote heat rejection (ASHRAE [2]).Improvements to those first water cooled systems continued over the next 30 years with many other companies in the mainframe business employing water cooling in their products as well. In 1987 at the height of the mainframe market the magazine Datamation published a list of mainframe manufacturers showing that 92% of the revenue from mainframes was generated by companies that employed liquid cooling. The manufacturing of these products continued into the early to mid-90’s, at which time the market shifted from bipolar technologies to CMOS where the power dissipation was an order of magnitude less and air cooling was the most cost effective choice for removing the heat. Thermal management primarily focused on the use of fans, blowers, and heat sinks. As module heat fluxes (Figure 2) continued to rise at an alarming rate enhanced air cooling technologies that utilized heat pipes, vapor chambers, high performance interface materials, and high performance air moving devices were employed. However, heat fluxes are now reaching the limits of air cooling at the die and module levels as well as at the server, rack and data center levels almost simultaneously.

|

Figure 2. Module heat flux trend. Module powers shifted by ~10 years from bipolar to CMOS.

Enhancement of CMOS Performance Using Liquid

Air cooling for CMOS continues to be the major cooling method adopted today. However, some significant challenges are facing the industry. When one reviews the historical trends of module level power density for both bipolar and CMOS over the past 40 years, a real crisis seems to be looming on the horizon. Figure 2 shows that the module level heat flux for CMOS technologies is now at the same level as the water cooled bipolar technology last shipped in the early 90’s. While all the bipolar systems shown on this graph employed some form of liquid cooling, most of the CMOS technologies shipped today continue to push the limits of air cooling, but with only marginal improvements in air cooling achieved with each succeeding generation. In recognition of this marginal improvement with air cooling, some computer manufacturers are now offering liquid cooling for their CMOS-based computers.

In 1993 IBM began transforming its mainframe computer technology from one based on bipolar to one based on CMOS technology. IBM’s S/390 system shipped in 1997 with 12 processing units all packaged within a single multichip package. This was a unique system in that it was the first IBM system that employed refrigeration cooling [3].

Fujitsu shipped a hybrid liquid cooled system in 2000 [4]. This system employed a refrigeration loop that connected to a loop that circulated water to cold plates attached to processor modules. The Fujitsu Model GS8900 lowered water temperatures to 5°C (41°F) and required careful considerations to eliminate any condensation concerns. The refrigeration system, installed in a half frame, was designed as a fully redundant system.

Cray is continuing to ship their Fluorinert™ based systems in their latest 252 processor Cray X1 [5]. This system has an internal spray cooling system for the processors employing Fluorinert with an external heat rejection to the building chilled water system. What is unique about this system is that the entire system is completely enclosed such that the heat generated is exchanged directly to chilled water with the use of air to water heat exchangers and Fluorinert to water heat exchangers.

Apple’s latest introduction of the Power Mac™ G5 (2.5 GHz) employs liquid cooling for its dual processor [6]. This is an internal enhanced liquid cooling system that employs copper cold plates that mate to bare PowerPC™ processor chips.

Removal of Rack Heat Load Using Liquid

Datacom facilities do have limitations and each is unique such that some facilities have much lower power density capacities than others. Traditional datacom facility cooling designs use multiple computer room air conditioning (CRAC) units located around the perimeter of the electronic equipment room. The cold supply air from the CRAC units must travel the distance from the unit to the electronic equipment racks and then return to the CRAC units. Some customers of server or storage racks, who do not have the capability to cool clusters of high powered racks, are either spreading the racks on their data center floor (for some data centers space is a premium and this is not an option) or they are partially populating the rack to reduce the rack heat load.

To resolve these environmental issues, manufacturers of building cooling equipment, and now IBM, have begun to offer alternative cooling solutions to aid in datacom facility thermal management. The objective of these new approaches is to extend the liquid media to heat exchangers that are closer to the source of the problem (i.e., the electronic equipment that is producing the heat). These solutions increase the capacity, flexibility, efficiency, and scalability of the cooling solutions.

|

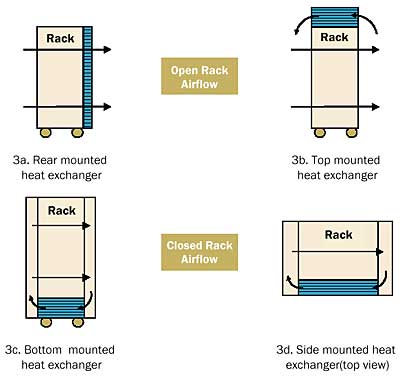

Figure 3. Data center thermal management.Several viable options based on this strategy have been developed for racks (Figure 3):

- Rear mounted liquid cooled heat exchanger with open rack airflow (Figure 3a)

- Top mounted liquid cooled heat exchanger with open rack airflow (Figure 3b)

- Liquid cooled heat exchangers located internal to a rack or a rack extension (Figures 3c and 3d)

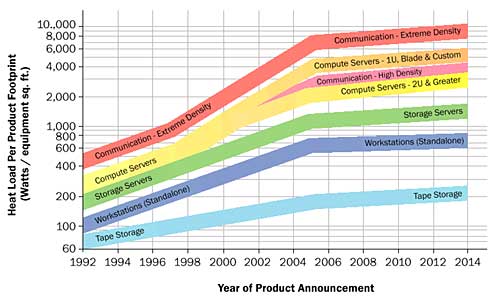

From a facility standpoint, each of the options listed represent liquid cooled solutions adjacent to an air cooled rack since the liquid delivery system/infrastructure has to be extended to the rack itself instead of to a more remote CRAC unit. The liquid media itself can be water or refrigerant based. These solutions and others will continue to be promoted with the increased power densities being shipped and the projections of the increased heat loads, as shown by manufacturers of datacom equipment in Figure 4.

|

Figure 4. New datacom equipment power trends (ASHRAE [2]).

Next Steps

As was the case with the introduction of water into the data center back in the early 60’s, education of the customer was key to its acceptance and the eventual embracing of water by the user community. Even though many current customers/facility operators have worked with water, some reeducation will be required, and it will take time for acceptance to occur. As before, once data centers start to deploy water cooling and the reliability is proven, its acceptance back into the data center will probably be evident. Beaty [7] discussed that water piping already exists in most datacom facilities in one form or another and that, through an engineered solution to liquid infrastructure design, it is possible to mitigate any perceived risks with techniques, such as diversion, containment, monitoring, detection and isolation. Equally vital is the need to adopt a comprehensive commissioning process to ensure that the design is executed accurately and with a high degree of quality.

There are many challenges in determining the specific piping configuration system that should be used for the delivery of the liquid to local heat exchangers/racks. The manufacturers of liquid cooling equipment need to guide customers in layout options that will minimize cost, include upgrade options, and offer schemes for detecting and reporting any problems with the liquid systems. Since each data center is unique, these options should include consultation with end users, and facility owners/operators of those design options.

Since leaks in a piping system are probably the main concern with returning to liquid cooling, additional emphasis must be placed on the design to handle any maintenance and repair without any datacom equipment having to be taken offline. This could be achieved with a combination of piping path diversity and the correct level of isolation. Again the manufacturer of datacom equipment, working with operators, can help guide the design so that a reliable liquid cooling delivery system is implemented.

Another important consideration involves the ability of the piping configuration to accommodate changes and future deployments of datacom equipment in a seamless manner. The issues include how to add more liquid cooling distribution infrastructure to the existing configuration and also how to avoid any disruption to the facility operation (i.e., new construction in areas that have operational computer equipment). This may require the inclusion of valved branch taps from the piping mains to allow for a plug and play type of system expansion. Another technique could involve the installation of an extended piping mains configuration throughout the facility as a part of the initial construction phase (i.e., extended to areas not initially occupied by operational computer equipment).

Although the thermal management aspects have been discussed herein, the reliability aspects of liquid cooling system are absolutely essential to the implementation of this technology. The materials used with the liquid loop must be extremely well thought out with consideration of material compatibility as well as long life, considering both corrosion and erosion.

Summary

The industry is at a crossroads. Should it sacrifice performance in the future in order to continue air cooling or switch to a liquid cooling technology that could boost performance and mitigate the thermal management challenges occurring in the data center? The liquid cooling technologies that are generally considered include water cooling, Fluorinert, and refrigerant. Several computer manufacturers have begun offering liquid cooling solutions that enhance processor performance, and several major HVAC equipment manufacturers have offered air/liquid hybrid solutions for improved thermal management in the data center.

The decisions that have to be made to switch to liquid cooling for datacom equipment manufacturers are critical and need to include a view of what effect such a move will have in its effect on datacom facility infrastructure strategies. The datacom equipment manufacturers recognize the challenge of air cooling, but also must consider the interests and preferences of their customers. The facility cooling infrastructure design team typically is challenged with balancing the datacom equipment requirements (throughout the life cycle of the infrastructure) with the spatial, performance, and preferences of the client (e.g., end user). Since datacom equipment often has a short life cycle (e.g., three to five years) and facility cooling infrastructure has a life cycle that can be four to five times greater than the datacom equipment, an important infrastructure attribute is scalability.

One consideration in implementing liquid cooling in the data center is that many air cooled data centers today employ liquid cooling for the CRAC units. Basically this means that chilled water is already being installed within the datacom equipment room to supply the CRAC units. Essentially this acts as a great enabler towards future types of cooling, including various liquid cooling methods. The scalable, transitional approach has increased focus on the location/routing of chilled water piping, valving, and taps to provide the chilled water source for future liquid cooling strategies, including liquid to the rack or to the datacom equipment.

The thermal management issues facing the datacom industry are extremely complex and challenging, but present many opportunities for innovative solutions. If these problems are not solved, then the performance increases that customers have come to expect may be not achievable in the future. We are truly at a crossroads in our industry, one that will require innovative thermal management solutions, including bringing liquid cooling back to the data center.

References

- Delia, D., Gilgert, T., Graham, N., Hwang, U., Ing, P., Kan, J., Kemink, R., Maling, G., Martin, R., Moran, K., Reyes, J., Schmidt, R., and Steinbrecher, R., “System Cooling Design for the Water-Cooled IBM Enterprise System/9000 Processors,” IBM Journal of Research and Development, Vol. 36, No. 4, July 1992, pp.791-803.

- Datacom Equipment Power Trends and Cooling Applications, 2005, ASHRAE Special Publication, 112 pages.

- Schmidt, R., and Notohardjono, B., “High End Server Low Temperature Cooling,” IBM Journal of Research and Development, Vol. 46, No. 6, Nov. 2002, pp. 739-751.

- Fujisaki, A., Suzuki, M., and Yamamoto, H., “Packaging Technology for High Performance CMOS Server Fujitsu GS8900”, IEEE Transactions on Advanced Packaging, Vol. 24, No. 4, Nov. 2001, pp. 464-469.

- Pautsch, G. “An Overview on the System Packaging of the CRAY SV2 Supercomputer,” Proceedings of IPACK’01, The Pacific Rim/ASME International Electronics Packaging Technical Conference and Exhibition, 2001, pp. 617-624.

- www.apple.com/powermac/design.html

- Beaty, D, “Liquid Cooling Friend or Foe,” ASHRAE 2004 Annual Meeting, Technical and Symposium Papers, 2004, pp. 612-620.