The Datacenter Quandary

Two significant challenges facing datacenter operators today are increased power and cooling costs [1]. In a recent survey of datacenter operators, 38% of respondents said they considered both power and cooling as equally important; another 21% indicated that cooling was their major challenge; and 12% cited power consumption as a big concern [2]. Another 2006 study reports datacenter power density as increasing by 15% annually, with power draw per rack having grown eightfold since 1996 [3]. More than 40% of datacenter customers surveyed in that study reported power demand outstripping the supply.

|

Figure 1. Fully Buffered DIMMs (FBDIMMs) from a leading vendor [8].

What About Memory Cooling?

Studies suggest that nearly 70% of the costs of running a datacenter is related to IT loads [4,5]. This includes infrastructure and maintenance costs for supplying power and removing waste heat. In the past, microprocessor cooling was one of the key contributors to datacenter cooling costs, but advances in memory technology to support the current generations of computers have led to higher storage capacities and faster signaling speeds, resulting in more traffic density and memory power dissipation. Thus, it is not surprising to note that memory cooling budgets can be as high as 35-50% of that for cooling CPUs [6,7]. For smaller systems, such as 4U/6U servers, memory sub-system power is expected to be higher due to more memory usage per server [7].

Types of PC/Server Memory

Various DIMM (Dual Inline Memory Module) cards have been developed for use in computer systems. These are in several form factors depending on the end-use application. For instance, memory in laptop computers conforms to the SODIMM (Small Outline DIMM) specification, while cards meant for desktop PCs and servers conform to the Double-Data-Rate (DDR1/DDR2) DIMM outline. All memory card specifications are driven by the Joint Electronic Device Engineering Council (JEDEC).

Much of the memory in servers today is the unbuffered DDR1/DDR2 format. Industrywide, there is an increasing adoption of the Fully Buffered DIMM (FBDIMM) format (Figure 1). FBDIMMs feature an additional device called the Advanced Memory Buffer (AMB), which handles the communication between the DDR2 cells and the rest of the computer. They come with various numbers of DDR2 devices on the card depending on the capacity- these are JEDEC Raw Card A (9 devices), B and C (18 devices) or Raw Card D (36 devices).

Thermal Requirements and Power

Memory vendors specify the maximum case temperature for the DDR2 packages at 85�C or at 95�C if the system architecture design supports double refresh capability. For AMBs, vendors often specify that junction temperature be less than 110�C.

|

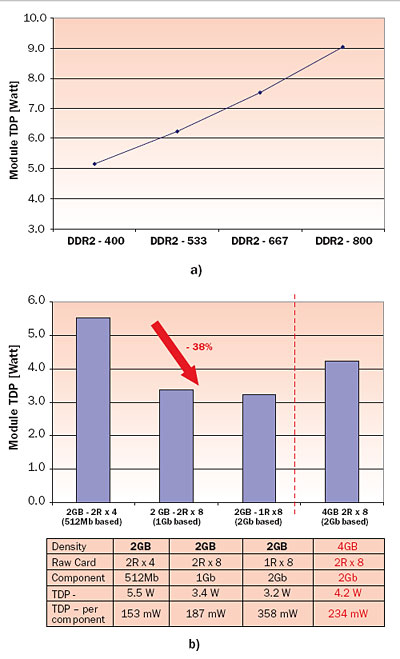

Figure 2. (a) DDR2-667 power dissipation trends [7].(b) Storage capacity and power levels [7].

Power dissipated by a memory card varies, ranging from ~5W for DDR2-400 MHz technology to about 9W for DDR2-800 MHz (Figure 2a). Intuitively, memory power would appear to be driven by storage capacity. However, this is not always the case, as Figure 2b shows. Scanning from left to right, the storage capacity increases from 512 Mb to 2 Gb for the first three cards, yet total power drops from 5 .5 to 3.3 W. These have 36, 18 and 9 DDR2 packages per card respectively with a thermal design power (TDP) of 153, 187 and 358 mW respectively. The rightmost column shows an increase in power even though storage capacity is same as in column 3; this is for an 18 DDR2 package design with a TDP of 234 mW per package and a total power of 4.2 W.

For FBDIMMs, the total power is that from the DDR2 packages plus the AMB wattage. In most applications the AMB is expected to consume between 3 and 5 W, meaning FBDIMM power could be as high as 14 W.

Cooling Memory

Thermal engineers must often work with banks of cards set at pitches of 10-12 mm. This is driven by the electrical line distance from the CPU/memory controller to the farthest DRAM cells on the memory.

System height constraints require mechanical designers to place memory cards upright or inclined; while the inclined mode is less common, it imposes stronger constraints on cooling.

Conventional memory cooling designs use forced air convection through the ‘channels’ formed by adjacent cards in an enclosure. In high density designs, thermal engineers specify the use of ducting to force the air through the memory channels. The airflow across the memory banks experiences a pressure drop due to skin friction and miscellaneous losses, which increases roughly parabolically with the airflow. For a given airflow the pressure loss increases with tighter pitch.

From first principles, the effectiveness of convective heat transfer is directly proportional to the surface area. The airflow past these devices in a given memory channel experiences segmented heat generating areas, which can be inefficient. To alleviate this situation, memory vendors provide a heatspreader spanning all the DDR2/AMB device(s). The heatspreader distributes the thermal energy from the various devices in the flow path and effectively creates a single large source of convective heat transfer.

|

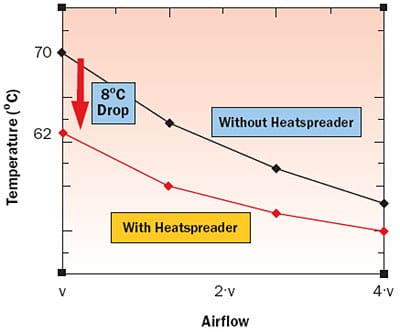

Figure 3. DDR2 case temperatures in FBDIMM cards

with and without heatspreaders. v=a reference flow rate.

As Figure 3 shows, the DDR2 temperatures on a fully buffered DIMM bank with heatspreaders at 10 mm pitch can be reduced by ~8�C, and these gains are diminishing by the time the airflow rate has quadrupled. Physically, the explanation for this phenomenon is akin to the asymptotic behavior of a heatsink. Beyond a certain airflow, the gains from the convective heat transfer are diminishing so that the effectiveness of the cooling solution reaches a stable maximum. Comparable reductions are noted for the AMB devices also.

Thermal ‘Control’

Typical memory subsystems using DDR1/DDR2 have no in-built thermal diodes for external temperature regulation. FBDIMMs, however, can be regulated indirectly. AMBs on the FBDIMMs can be programmed to respond to preset temperatures. When the AMB diode registers higher temperatures than the presets, it sends alert frames to a memory controller, which in turn regulates the read/write traffic to the memory bank and indirectly controls the power dissipated.

Which is Harder: Cooling CPUs or Cooling Memory?

Typical server systems today have multiple isolated CPUs, each dissipating up to 150W. Considering that memory can dissipate anywhere up to 9-15W, and recognizing that server systems typically contain anywhere between 8-64 memory cards, it is easy to see that memory power can add up quickly to a more significant quantity from a cooling design standpoint.

Memory subsystems often contain cards at tight pitches, making air delivery a tougher challenge. Additionally, in most server designs the CPUs receive ‘fresh’ air from the fans, while the memory subsystems usually receive preheated air.

Unless special design tradeoffs are made early enough, cooling memories can prove to be more challenging than cooling CPU(s).

What’s Ahead?

The past decade has seen an overwhelming adoption of DDR1/DDR2 memory. The industry is now ramping up adoption of FBDIMMs.

While these trends are ongoing, some companies are moving away from FBDIMMs. AMD, for example, has taken FBDIMMs off their roadmap. At the 2007 IDF, it was revealed that major memory manufacturers have no plans to extend FB-DIMM to support DDR3 SDRAM. Instead, only Registered DIMM (RDIMM) for DDR3 SDRAM had been demonstrated [9].

If the RDIMM is adopted, then memory subsystems will start looking like earlier generations of DDR1/DDR2 modules from a mechanical/cooling standpoint. They will also feature a BOB (buffer on board) located on the system motherboard, which is the equivalent of the AMB on FBDIMMs.

Conclusions

Performance needs of current and next-generation server systems call for increased collaboration between the system electrical and thermal/cooling designers. Gone are the days when electrical engineers could design the system and ‘throw it over the fence’ to thermal teams for cooling design. Partnership between these multidisciplinary teams can aid the development of elegant and cost -effective electrical/thermal solutions that meet the compelling demands of today’s end-user environment.

References

- Belady, C., “In the Datacenter, Power and Cooling Costs More Than the IT Equipment It Supports,” ElectronicsCooling, Vol. 13, No. 1, Feb. 2007, pp. 24 -27.

- Anon., “Power Consumption and Cooling in the Datacenter: A Survey,” AMD, 2006.

- Humphreys, J., and Scaramella, J., “The Impact of Power and Cooling on Datacenter Infrastructure,” IDC Report, Doc. 201722, 2006.

- Anon., “Power and Cooling in the Datacenter,” White Paper, AMD, June 2006.

- Rasmussen, N., “Calculating Total Cooling Requirements for Datacenters,” White Paper #25/Rev 1, APC, 2003.

- Anon., “Solving Power and Cooling Challenges for High Performance Computing,” White Paper, Intel Corporation, June 2006.

- Honeck, M., “The Ring of Fire: DRAM Power Consumption in Infrastructure Applications,” Proc. MEMCON, San Jose, Calif., Sept. 12-14, 2006.

- Samsung Electronics Corporation, Korea.

- Intel Developer Forum, Santa Clara, Calif., 2007.