Introduction: Evolution of Air and Water Cooling for Electronic Systems

Since the development of the first electronic digital computers in the 1940s, efficient removal of heat has played a key role in insuring the reliable operation of successive generations of computers. In many instances the trend toward higher circuit packaging density to provide reductions in circuit delay time (i.e., increased speed) has been accompanied by increased power dissipation.

An example of the effect that these trends have had on module heat flux in high-end computers is shown in Figure 1. Heat flux associated with bipolar circuit technologies steadily increased from the very beginning and really took off in the 1980s. By the mid-1960s it became necessary to introduce hybrid air-to-water cooling in otherwise air-cooled machines to control cooling air temperatures within a board column and thereby manage chip junction temperatures. With the precipitous rise in both chip and module powers that occurred throughout the 1980s, it was determined within IBM that the most effective way to manage chip temperatures in multichip modules was through the use of indirect water-cooling.

The decision to switch from bipolar to Complementary Metal Oxide Semiconductor (CMOS) based circuit technology in the early 1990s led to a significant reduction in power dissipation and a return to totally air-cooled machines. However, as may be seen in Figure 1, this was but a brief respite as power and packaging density rapidly increased, first matching then exceeding the performance of the earlier bipolar machines. These increases in packaging density and power levels have resulted in unprecedented cooling demands at the package, system and data center levels, requiring a return to water cooling.

Indirect Water Cooling for a New Supercomputer: Configuration and Operation

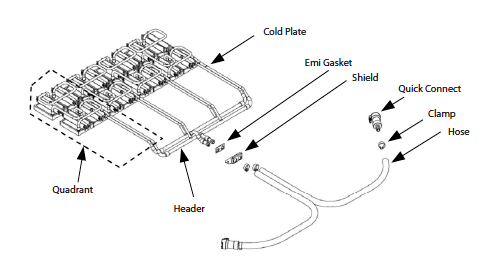

IBM returned to indirect (cold plate) water cooling in 2008 with the introduction of the Power 575 Supercomputing Node [1]. The node, packaged in a super-dense 2U (88.9 mm) form factor, contains 16 dual core processor modules. An assembly of 16 cold plates was developed to cool the processors. The assembly consists of the cold plates (one cold plate for each processor module), copper tubing that connects four groups of four cold plates in series, copper tubing that connects each grouping of four cold plates, or quadrant, to a common set of supply and return headers, and two flexible synthetic rubber hoses that connect the headers to system level manifolds in the rack housing the nodes (a rack can contain up to 14 nodes). Non-spill poppeted quick connects are used to connect the cold plate assembly to the system level manifolds.

Figure 2. Power 575 supercomputing system.

A photograph of several Power 575 Supercomputer node racks is shown in Figure 2 with one node drawer disengaged from the rack and the power supply/cover propped open so the node planar can be seen. In the racks shown, the upper sections contain the air-cooled bulk power assembly, below which reside several compute nodes, and in the bottom of the rack there are two modular water conditioning units (WCU).

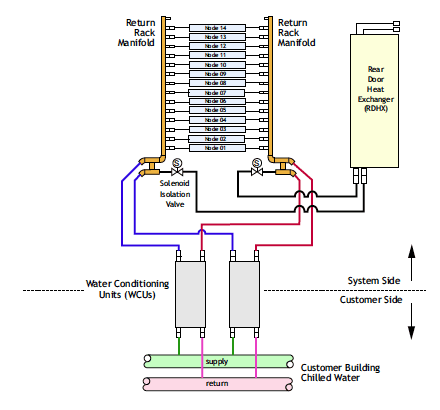

The liquid cooling system utilized by the Power 575 high performance water cooled supercomputer [2] is illustrated schematically in Figure 3. The computing nodes incorporate microprocessors, memory, an input/output assembly, and a power supply such that each node is a modular computer (within the rack’s water cooling and power distributing infrastructure). Any number from one to 14 nodes can be accommodated in a rack, each coupled, yet separable from the rack’s bulk power and liquid cooling infrastructures. The ability to remove a node from the liquid cooling system without adversely affecting the operation of the remaining system is provided by quick connects: fluid couplers that can be uncoupled quickly and easily with virtually no liquid leakage.

Figure 3. Schematic of the Power 575 water cooling system.

The nodes employ a hybrid cooling system comprising both water cooling and air cooling; the microprocessor modules are cooled by water flowing through cold plates while other components including power supplies, memory modules, and other supporting electronics are cooled by a forced flow of air. The water is supplied to each node in parallel by a rack level supply manifold, then is collected downstream of the nodes in a rack level return manifold. Also in parallel to the nodes, the rack level supply manifold supplies water to a rear door heat exchanger (RDHX), an air to liquid heat exchanger on the air exhaust side of the rack intended to cool the air exiting the rack to lessen the strain on the computer room air conditioning systems. Coolant leaving the RDHX is collected by the rack level return manifold.

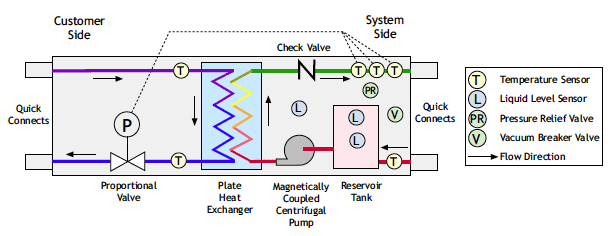

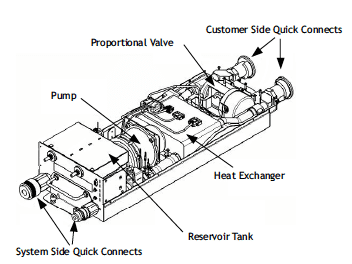

Two modular water conditioning units (WCUs) provide the system side water flow through the rack level manifolds, RDHX, and nodes; the WCUs are connected in a parallel flow arrangement to the rack level supply and return manifolds. Details of the WCU are shown in Figure 4a in schematic form, and in Figure 4b in a partially disassembled isometric drawing. The function of the WCU is to provide and control the flow of cooling water to the system at a specified temperature, pressure and flow rate, using the facility’s building chilled water as the ultimate sink for the system’s rejected heat. During normal operation, both WCUs are required to provide the necessary flow and heat transfer capacity to cool the maximum configuration of 14 nodes and the RDHX. If, however, a WCU were to fail (e.g., lose the capability to provide flow or to control coolant temperature), the failed WCU would be shut down, and a solenoid control valve located in the flow path between the rack level manifolds and the RDHX would be shut, thus isolating the RDHX from the water cooling system. The remaining WCU is sized to accommodate the cooling requirements of up to 14 nodes without the RDHX.

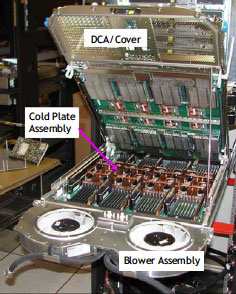

A close-up picture of a Power 575 liquid cooled node with the cover opened is shown in Figure 5. Starting at the front of the rack, the coolant supply and return hoses, each terminated by a quick connect fitting (bottom of Figure 5), are routed along the front of the node such that an operator standing at the front of the rack facing the node would see a supply hose to the left for connection to the rack level supply manifold also to the left, and similarly a return hose and manifold to the right. The coolant hoses proceed through the center of the blower assembly, which provides airflow for the air cooled components within the node. The supply and return coolant hoses are attached to a node level supply and return manifold, respectively. The coolant is supplied to the 16 modules in four parallel paths (see Figure 6), each consisting of four cold plates in series, each coupled to a single microprocessor module by conduction through a thermal interface material [3].

Figure 6 illustrates an exploded view of a cold plate and manifold assembly for the Power 575 system. The 16 individual cold plates are connected by copper tubing formed to allow each cold plate to be compliant with respect to the remainder of the assembly to allow for height and angle mismatch among module lids. The manifolds terminate at hose connections to the supply and return side quick connect fittings; at the interface between the manifold and hose, the assembly crosses the node package boundary and thus electromagnetic interference (EMI) shielding and a gasket is provided.

Figure 4a. Schematic representation of the water conditioning unit (WCU).

Figure 4b. Partially disassembled isometric drawing of the WCU.

Reasons and Advantages for Reintroducing Water Cooling

Although there are significant cost implications, there are several reasons and advantages for reintroducing water cooling [3]. First, a 34% increase in processor frequency results in roughly a 33% increase in system performance over an air cooled equivalent node (one useful metric for supercomputing nodes is floating point operations per second per Watt or FLOPs/W); this performance increase cannot be achieved in a 2U form factor with traditional air cooling. Second, the processor chips run at least 20oC cooler when water cooled, which results in decreased gate current leakage and hence better power performance; the processors can be run at a higher frequency and lower voltage level at the cooler junction temperatures than would be possible with the higher junction temperatures, which result from air cooling.

Figure 5. View of the Power 575 supercomputing node with the DCA/cover up.

Finally, energy consumption within the data center is reduced because computer room air conditioners (CRACs) are replaced by more efficient modular water cooling units (WCUs). For example, Figure 7 presents the results of a case study illustrating how much less power is required to transfer heat from the IT equipment to the outside air for a water cooled system versus a comparable air cooled system, both of which deliver equivalent system performance (for example, the systems produce equivalent sustained FLOPs when running a industry standard test code) [4]. In the example shown in Figure 7, the water cooled system cluster requires nearly 45% less power than that required for the air cooled system cluster.

Figure 6. Exploded view of a cold plate and manifold assembly.

In the case of the Power 575, the increased cost and complexity of a water cooling system was justified by a significant increase in computing performance in terms of both computing throughput and also in terms of computing throughput per unit of electrical energy.

Figure 7. Comparison in the data center power consumption for an air cooled versus water cooled system [4].

References

- IBM Power Systems Announce Overview, April 8, 2008, http://www-03.ibm.com/systems/resources/systems_power_news_announcement_20080408_annc.pdf

- Ellsworth, Jr., M.J., Campbell, L.A., Simons, R.E., Iyengar, M.K., Schmidt, R.R., and Chu, R.C., “The Evolution of Water Cooling for IBM Large Server Systems: Back to the Future,” Proceedings of the 2008 ITherm Conference, Orlando, FL, USA, May 28-31, 2008.

- Campbell, L.A., Ellsworth, Jr., M.J., and Sinha, A., “Analysis and Design of the IBM Power 575 Supercomputing Node Cold Plate Assembly,” Proceedings of the 2009 InterPACK Conference, San Francisco, CA, USA, July 19-23, 2009.

- Ellsworth, Jr., M.J., and Iyengar, M.K., “Energy Efficiency Analyses and Comparison of Air and Water Cooled High Performance Servers,” Proceedings of the 2009 InterPACK Conference, San Francisco, CA, USA, July 19-23, 2009.

![Figure 7. Comparison in the data center power consumption for an air cooled versus water cooled system [4].](https://electronics-cooling.com/wp-content/uploads/2009/08/graph.png)