The International Technology Roadmap for Semiconductors [1] predicts high performance processor power density to more than double by the year 2024, while during the same time allowable junction temperature will decrease from 90oC to 70oC, reducing the junction-to-ambient temperature difference to nearly half. This combined challenge to cooling will require the total thermal resistance to decrease by almost a factor of four. Even if these changes are only half as great, significant improvements in cooling will be required.

Yet independent of increases in power density, advantages to improved cooling can be demonstrated. Leakage current has become an increasing fraction of processor power with each technology node. As leakage current is strongly temperature dependent, processor power dissipation can be reduced through improved cooling. Achievable processor frequency is strongly dependent on temperature and voltage. The voltage dependence is approximately proportional, while temperature dependence of performance has reduced with each technology node. In the near future, the temperature dependence of performance may almost disappear.

Through improved cooling, temperature can be reduced while voltage and frequency are increased, resulting in higher system performance. For a given cooling configuration, a combination of voltage and temperature exists which maximizes system performance per watt. At higher powers, additional performance is achieved at the expense of energy. Such increases may be limited by electromigration and other failure mechanisms, which are functions of both temperature and voltage. The temperature dependence of the leakage current has been increasing through recent technology nodes. Significant decreases in energy consumption and/or increases in processor frequency can be achieved through improved cooling.

Leakage Current Effects

Leakage current is current which bypasses the transistor gate, which is always present regardless of whether the gate is active, and which increases with both voltage and temperature. It can be considered as wasted energy, unlike energy used to perform computation.

Leakage current effects have increased as semiconductor lithography has progressed well into the subcontinuum range [2]. In some ASICs, leakage current can be over half of the total power. As the industry moves from 45nm to 32nm to 22nm and beyond, designs are intended to limit leakage current to about one-third of total power dissipation. Some of this will be achieved by lower junction temperatures. The dependence of leakage current on junction temperature is also becoming stronger. At 65nm, leakage current would change by a factor of two over a temperature range of about 45oC; at 32nm, the range may shrink to 22oC [3]. So the energy savings due to a given temperature reduction will increase with technology improvements. From the viewpoint of effectiveness of improved cooling, a relevant metric is the percent reduction in total power versus temperature. Recent observations of processors on the market show this to range from about

0.5%/oC up to almost 2%/oC [4]

Improved Cooling

Ellsworth and Simons [5] discussed a variety of package cooling improvements which could be used to cool up to several hundred W/cm2. These began with traditional air cooling of a lidded package as shown in Figure 1. As the thermal path through the package substrate offers a high resistance, nearly all the heat flows upwards and outwards while spreading through the package lid and heatsink base, then finally to the heatsink fins and into the air stream. The heat flow path is through the following components:

- Silicon die

- Thermal interface material 1 (TIM1)

- Package lid

- Thermal interface material 2 (TIM2)

- Heatsink base

- Heatsink fins

- Coolant

Nonuniformity of power dissipation within the die increases package thermal resistance above that of a package with a uniformly powered by a significant factor, typically two or more [6, 7]. For a given nonuniform power distribution, this factor will increase as the thermal path is improved, moving asymptotically toward the ratio of the highest local power density to the average power density.

Recent developments in packaging and cooling largely consist of replacing traditional materials with those having higher thermal conductivity. In the package, organic TIM1 with metallic or ceramic fillers can be replaced by a completely metallic material, typically indium or indium alloy. Copper package lids can be replaced by composite materials, such as aluminum-graphite, copper-graphite, aluminum-diamond or silicon carbide-diamond. External to the package, heatsinks feature embedded heatpipes or vapor chambers, and in some cases the vapor chamber shares a continuous vapor/liquid space with heatpipes extending into the fins. A cold plate can replace the heatsink or become an integral part of the package, acting as the lid. Ultimately, coolant can contact the silicon with no interfaces between. At the system level, liquid can be used to transfer heat to air through a liquid-to-air heat exchanger, to another liquid through an interface or liquid-to-liquid heat exchanger, or the liquid can flow across the system boundary to transfer heat to the data center and beyond. Such technologies are described in detail by Kang and Miller [8].

Performance Modeling

The processor has a nominal power dissipation of 240W, operating at nominal conditions of 0.85V and 95oC.

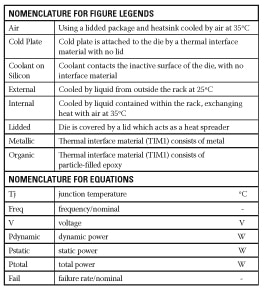

Thermal resistance components of various combinations of packaging and cooling are shown in Figure 2. Values shown are typical for a die approximately one square inch (645mm2) in modern flip-chip packaging. Design variations consist of the following:

Package thermal interface material (TIM1) – The TIM1 can be organic or metallic in a traditional lidded package.

Coolant on silicon removes the TIM1 and lid or cold plate, simplifying package construction.

Package types can be lidded, which requires another thermal interface material (TIM2) or cold plate lid, which eliminates TIM2.

The system cooling configuration can be one of three types:

Internal liquid, in which liquid is used to transfer heat to the air stream.

External liquid, in which heat is transferred to liquid without air as an intermediary. In this case, the external liquid temperature is fixed at 25oC, slightly above the allowable maximum dewpoint of a typical data center.

Electrical performance is based on a generic technology representative of recent and upcoming process nodes, and is modeled by a few simple assumptions:

In the region of interest on gate-dominated paths, achievable frequency is assumed to be proportional to voltage:

Freq αV [1]

Dynamic power to be proportional to frequency times voltage squared:

Pd αFreqV2 [2]

So dynamic power becomes proportional to voltage cubed:

Pd αV3 [3]

Static power (leakage current) is also proportional to voltage cubed.

At nominal conditions, static power is fixed at 35% of dynamic power:

Ps / Pt = Ps / Pt = Ps / (Ps + Pd) = 0.35 [4]

Dynamic power is assumed to change by a factor of two every 22oC, expressed by:

Pd α exp[0.0315(Tj – 95)] [5]

At nominal frequency (and voltage) the temperatures of various packaging components are shown in Figure 3. While most of the temperature reduction is due to improved cooling, some is due to the reduction in dynamic power. This positive feedback converges toward a lower operating temperature.

Reliability Concerns

One practical limit to increasing power is reliability, which is typically dominated by gate oxide failure. This is assumed to change by a factor of two every 10oC (typical of modern technology nodes, and happens to be equal to an often misapplied rule of thumb which has been around for decades) and/or every 15mV in the present study, resulting in:

Fail α exp{[0.0693(Tj – 95)] +

[46.4(V – 0.85)]} [6]

As the cooling performance is increased and thermal resistance is reduced, the reliability limit is reached at decreasing temperatures, higher voltages and slightly higher power than nominal. Ultimately leakage current is reduced to only one-seventh of its nominal value.

Frequency Improvements

Permitting the processor to operate at the highest combination of temperature and voltage, while maintaining gate oxide reliability equal or better than that of the nominal case, results in frequency improvements as high as 19%, as shown in Figure 4. The slopes of the lines from the origin to the operating point are proportional to computational efficiency, defined as frequency divided by power. In this case, as voltage is lowered, efficiency improves monotonically, with packaging and cooling technology making very little difference at the lowest values.

System Performance

If the processor provided all or nearly all of the power dissipation in a system, very low voltage (and frequency) operation would be most efficient. But processors consume, in all but a few cases, less power than memory. Volume systems typically feature fewer dual inline memory modules (DIMMs) per socket than high-end systems. High-end systems have been described as large boxes of memory with other hardware to drive it, and this is a reasonable description in terms of power dissipation, volume occupied and component cost.

Adding a constant memory power load changes power per socket and system performance to that of Figure 5. In this case memory power is equal to twice that of nominal processor power, typical of a modern high-end system. System performance is assumed to scale with the square root of processor frequency. This exponent relating processor frequency and system performance is at the low end of the range seen, which approaches a value of one at the high end and is strongly dependent on the program being exercised.

Unlike the example of a processor only, an optimum operating point at which the slope of power versus performance is maximized falls close to the midpoint of the range evaluated. This is slightly different for each packaging and cooling configuration. Dividing performance by power provides computational efficiency, which is shown compared to the nominal configuration in Figure 6. Here the optimum operating condition shifts to lower power as cooling is improved.

Total Cost of Ownership

While maximization of computational efficiency in terms of energy has been demonstrated, the total cost of ownership should be considered. Until just a few years ago, capital expenditure on computing equipment dominated energy cost, and the two expenses were the concern of different organizations with little communication. Recently higher energy costs, much higher energy usage and environmental concerns have resulted in a more holistic view of data center cost and efficiency.

In the present study, computing equipment cost is fixed to be equal to that of energy cost. At 11.4 cents per kilowatt-hour, a continuous watt of electricity costs exactly one dollar per year. This is close to an average data center’s electricity cost, so a rough approximation can be made that lifetime energy cost of a server in dollars is equal to its average power consumption in watts times its operational lifetime in years. Equal capital energy costs could thus be a server with a five year lifetime and capital cost equal to five times power consumption, for example.

Figure 7 plots normalized total cost of ownership (TCO) versus economic computational efficiency, a term invented for this study and defined as system performance divided by TCO. All optimum values feature TCO below the nominal value, and improvement in economic computational efficiency can be as high as 7%. As this is a simple model, some factors are neglected, which will alter the results if included. The cost of floorspace may be an additional factor, tax incentives and deductions will alter TCO calculations. Additional equipment external to the computing must be added or removed.

Conclusions

A case study showing energy reduction and/or performance maximization through improved cooling of a typical modern high-end server has been performed. In both energy efficiency and economic computational efficiency or TCO minimization, an optimum frequency (and voltage) exists for each packaging and cooling configuration. As cooling is improved, the advantage becomes more favorable. Energy computational efficiency can be increased to 15% above nominal, and economic computational efficiency can be increased to 7% above nominal. More detailed models will shift the values but should follow the same general trend. Lower temperature operation of processors is an available design option with immediate rewards.

Acknowledgments

This article is based on the author’s keynote presentation of the same title at “The Heat Is On: Performance and Cost Improvements through Thermal Management Design,” 21st March, 2011 [9]. The author thanks his colleagues Vadim Gektin (now at Huawei), who provided Figure 1, and Bruce Guenin, who invited him to write this article.

References

[1] International Technology Roadmap for Semiconductors, 2010, http://www.itrs.net/Links/2010ITRS/Home2010.htm

[2] N. S. Kim, T. Austin, D. Blaauw, T. Mudge, K. Flautner, J. S. Hu, M. J. Irwin, M. Kandemir and V. Narayanan, 2003, “Leakage Current: Moore’s Law Meets Static Power,” IEEE Computer, Vol. 36, No. 12, pp. 68-75

[3] S. Mukhopadhyay, A. Raychowdhury and K. Roy, 2003, “Accurate Estimation of Total Leakage Current in Scaled CMOS Logic Circuits Based on Compact Current Modeling,” Proceedings of the Design Automation Conference, pp. 169-174

[4] J. Wei, 2007, “Challenges in Package Cooling of High Performance Servers,” Proceedings of the 2007 International Electronic Packaging Technical Conference and Exhibition, IPACK2007-33637, ASME

[5] M. J. Ellsworth and R. E. Simons, 2005, “High Powered Chip Cooling – Air and Beyond,” ElectronicsCooling, Vol. 11, No. 3, https://electronics-cooling.com/2005/08/high-powered-chip-cooling-air-and-beyond/

[6] J. Deeney, 2002, “Thermal Modeling and Measurement of Large High Power Silicon Devices with Asymmetric Power Distribution,” Proceedings of the 35th International Symposium on Microelectronics, IMAPS

[7] D. Copeland, 2005, “64-bit Server Cooling Requirements,” Proceedings of the IEEE Twenty-First Annual IEEE Semiconductor Thermal Measurement and Management Symposium, pp. 94-98

[8] S. Kang and D. Miller, 2011, “Thermal Management Architectures for Rack Based Electronics Systems,” Proceedings of The Heat Is On: Performance and Cost Improvements through Thermal Management Design, MicroElectronics Packaging and Test Engineering Council (MEPTEC)

[9] D. Copeland, 2011, “Energy Reduction and Performance Maximization through Improved Cooling,” Proceedings of The Heat Is On: Performance and Cost Improvements through Thermal Management Design, MicroElectronics Packaging and Test Engineering Council (MEPTEC) l