by MP Divakar, PhD, Stack Design Automation

Technical Editor, Electronics Cooling Online

By now the tech community, especially the readers of Electronics Cooling, is familiar with Google’s Deepmind blog which published a true breakthrough of energy savings for data center cooling up to 40%. The savings come at a time when there is an ever increasing demand for compute and storage services from connected consumers and things. Google’s metrics show that the increase in data center compute power achieved today is over 350% for the same amount of energy over the preceding 5 years.

The primary metric based on which the energy savings was achieved is the Power Usage Effectiveness (PUE)– a measure (initially defined in 2007 by Green Grid) of how efficient a data center is in energy usage. It is defined as the ratio of total facilities energy to IT equipment energy:

Green Grid defined the inverse of PUE as DCiE (Data Center Infrastructure Effectiveness): tthe IT equipment energy divided by the total facility energy. But the industry seems to prefer using the PUE metric instead of DCiE which led to Green Grid updating the document in 2012 where PUE is now the main focus for data center energy metrics.

However, PUE is far from being perfect –it only measures the efficiency of the building infrastructure housing the data center and does not provide any granularity about the efficiency of the IT equipment itself. But advances in power monitoring enabled by smart cabinets, enclosures, and PDU (Power Distribution Units / Power Strips) can now provide more granularity on the energy consumption of the IT equipment. Furthermore, power monitoring at the chassis- and processor-level enabled by digital power management architectures in data center network appliances provides more detailed information on power consumption. In short, it is now possible to delineate power consumption in a data center from the building distribution point down to an individual processor on a mother board of network servers or switches. This is accomplished by DCIM (Data Center Infrastructure Monitoring) software can which can provide more accurate metrics to quantify infrastructure power consumption, thus making more accurate computation of PUE possible.

The deficiencies of PUE and DCiE aided by advances DCIM have led to the definitions of more specific energy metrics –Server Compute Effectiveness (SCE), partial PUE (PUE), Data Center Compute Efficiency (DCiE) and a few others dealing with water usage, carbon effectiveness, etc.

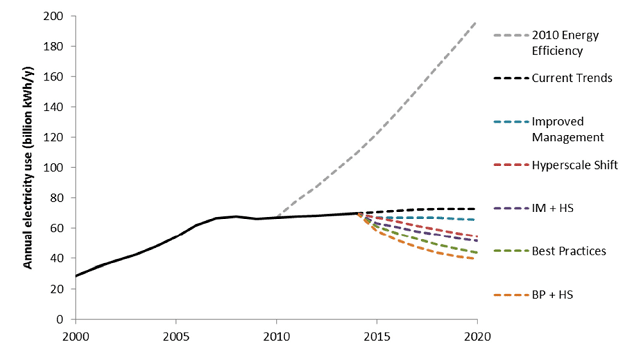

It is worthwhile to note how we got here to the era of mega data centers, also known as hyperscale data centers. On one hand, the convenience factor of accessing one’s data anywhere in the world on a cloud drove billions of users to it, whereas on the other, it was the higher energy metric that drove companies to abandon enterprise and small data center networks and move to the cloud hosted in the larger, mega data centers. The study published by Lawrence Berkeley National Laboratory (LBNL) confirmed that moving to cloud computing minimizes energy consumption (usage habits, wasteful- and redundant-computer operations, etc.).

Improved Management (IM), Best Practices (BP), Hyperscale Shift (HS)

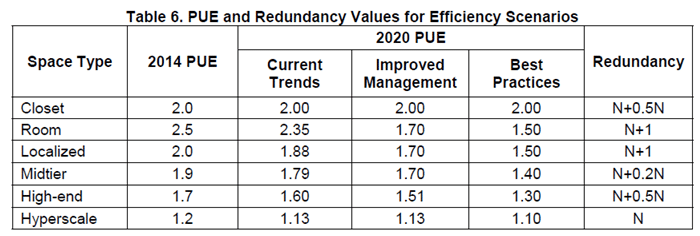

The LBNL study also published PUE and network redundancy values for efficiency scenarios, shown in the table below. The formulas in the “Redundancy” column represent the total number of servers needed for a data center containing “N” functional servers. Redundancy of “N+1” means that there is one redundant server present in each data center, while redundancy of “N+0.1N” means that there is one redundant server for every 10 functional servers. It is evident from the table that hyperscale data centers operate with the lowest PUE and redundancy numbers. Achieving even lower PUE numbers by going beyond what the best practices (to date or what is publicly known) seems to have been the motivation of hyperscale data center operators like Google, Facebook, Amazon, and others.

Data center power and cooling are two of the biggest challenges facing IT organizations today. To accommodate continued growth and future expansion, cooling system components such as chillers, cooling towers, computer room air handling units (CRAHs), computer room air conditioning units (CRACs), etc., need to be monitored, controlled, and optimized for minimum energy consumption.

Google’s Deepmind blog does not provide any information on how the cooling components of the data center were managed, tracked, and controlled, i.e., DCIM. Information on cooling techniques, forced air vs. immersion cooling (the latter has the lowest PUE among cooling techniques) for example, is also lacking.

However, Google does provide some information on how it achieves data center efficiency which shows an average PUE metric that has steadily decreased from 1.20 in 2008 to 1.12 in 2016 in its data centers. The following is some of the information that pertains to electronics cooling engineers:

- Although partial, there is some information on how Google’s servers were designed for optimal power consumption and hence minimal thermal dissipation. The use of very efficient power converters and the elimination of two AC/DC converters led to lower energy-consuming servers and switches (back up batteries are directly placed on server racks!). Some information on Google’s optimal server design can be found in its publication Energy-Proportional Computing.

- Google prefers to run the server fans at the minimal RPM to maintain a given temperature.

- The cold aisle temperature is set to 80°F (no longer the chilly 64°F) resulting in average hot aisle temperatures of 115°F. Note that Facebook prefers even higher cold aisle temperature of 85°F!

- Google uses ducting for hot air containment and even uses plastic curtains (like the ones you see in grocery cold aisle!) to prevent hot air – cold air mixing.

- Thermal modeling takes a front seat! The locations of CRAHs, CRACs, chillers, etc. are ‘floor-planned’ using CFD tools in the design phase and their performance further optimized during the data center’ operation phase.

The thermal management practices above are good for all data centers to adopt; they are the necessary ingredients of a modern data center of any scale.

But they are not sufficient! What the Deepmind blog is telling us is that even with the most ideal DCIM and the underlying sensory controls thereof, each data center has behavioral idiosyncrasies that are best understood, managed and controlled using neural networks. The operating configurations of hyperscale data centers and the interdependencies among its pods (of servers and switches) are too complicated to use deterministic approaches to achieve the lowest PUE possible. Therefore, machine learning based heuristics dictate the front end of a PUE-efficient data center.

Google is expected to reveal more on how it achieves 40% savings in the near future. Some of the information can be found in Googler Jim Gao’s paper on its website.

Google is not alone in chasing lower PUE numbers. Many hyperscale data center operators are well into this exercise. Facebook has begun yet another Open Compute project, this time on data centers with PUE at the forefront. Expect Amazon, NEC, Microsoft and others to reveal more about how their data centers are working toward lower PUE numbers.

We at Electronics Cooling will keep you updated.