Since the introduction of CUDA (Compute Unified Device Architecture, an acronym that is no longer in practice) in 2007, hundreds of millions of computers with CUDA parallel architecture-capable GPUs, or graphics processing units, have been shipped. If you have a gaming desktop, laptop with Nvidia GeForce GTX 1080 graphics card or Nvidia Shield, you are most likely familiar with the superb graphical and application performance enabled by the GPUs. These days GPU computing is quite well entrenched with many reported applications’ speedups ranging from 10X to 100X and higher.

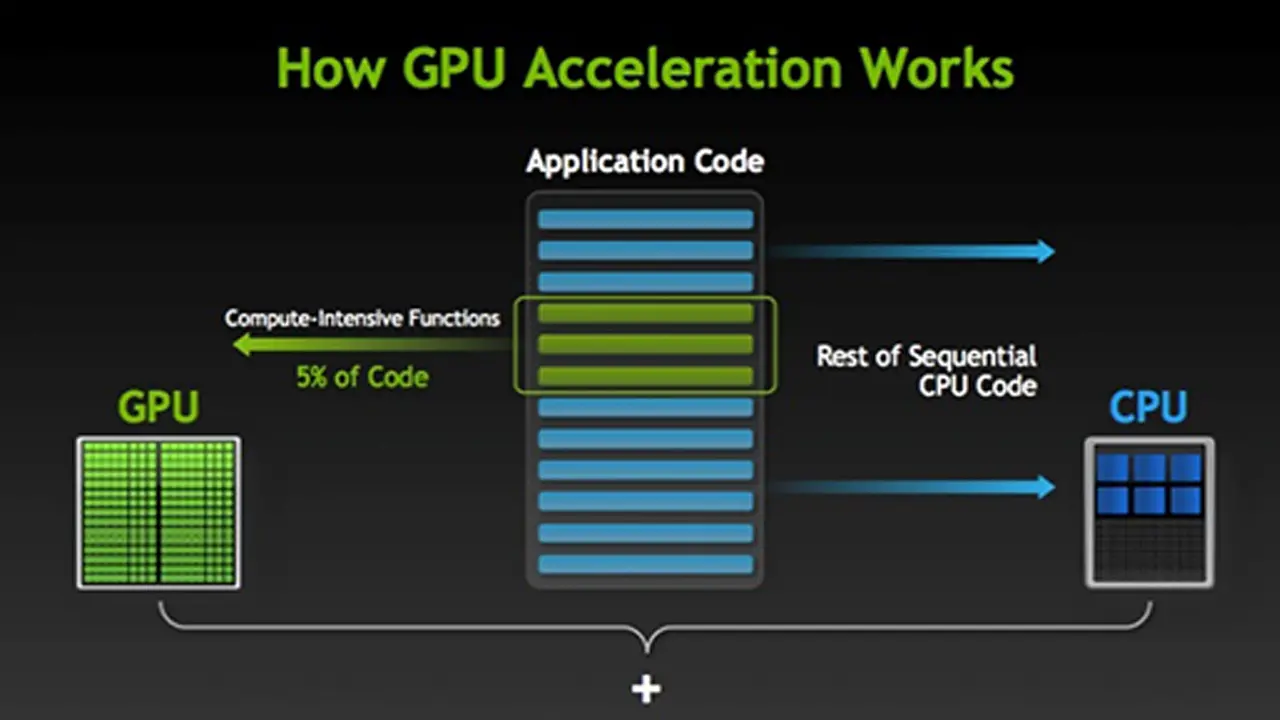

GPU-accelerated computing refers to the use of a GPU together with a CPU to accelerate deep learning, analytics, and engineering applications. GPU-accelerated computing offloads compute-intensive portions of the application to the GPU, while the remainder of the code still runs on the CPU (see Figure 1 below). From a user’s perspective, applications simply run much faster. Nvidia claims that for a typical data center application with a throughput of 1000 jobs per day, GPU-accelerated computing model amounts to 56% total cost of acquisition to that of a CPU-only compute model. A combination of GPU compute model with an appropriate cooling technology has the potential to yield the most cost effective and energy-efficient model for operating data centers. This blog will provide some background information on this to the readers of Electronics Cooling. Note that the topic of Liquid Immersion Cooling is not addressed here; it will be covered in a future blog.

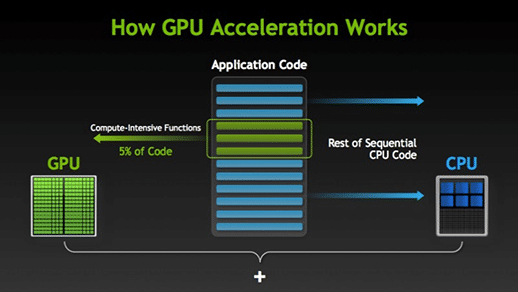

One of the practical issues that users face when using the n-core GPU workstations is the extraordinary power these systems consume and the heat that they produce. For example, 8-GTX 295 GPU workstation (using Nvidia’s Fermi architecture) the maximum temperature of the GTX 295 GPU during the simulations was 85degC and the GTX 480 GPUs crossed 90degC while the electrical power drawn by each computer was just below one kilowatt at full load (see Figure 2).

Most GPU’s have thermal throttle points set at or near 80degC. Nvidia which provides management tools like nvidia−smi (System Management Interface program) output continuous health monitoring data which includes GPU temperatures, chassis inlet/outlet temperatures, etc., as shown below:

Temperature

- GPU Current Temp : 34 C

- GPU Shutdown Temp : 93 C

- GPU Slowdown Temp : 88 C

Electronics Cooling presented some examples of cooling GPU-enabled gaming desktops in an earlier blog, Thermal Management – Enabled Products at CES 2017. An example of liquid cooling brackets for GPU cooling is described here.

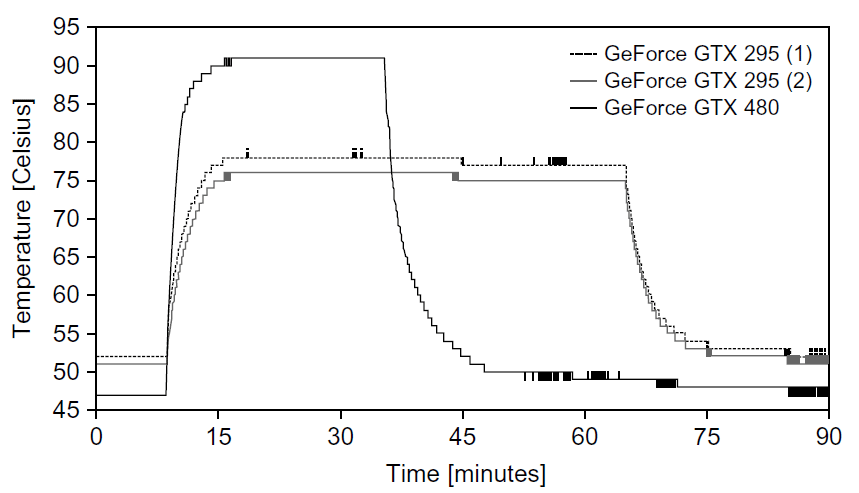

Liquid cooling of GPU-enabled servers are more complex than desktops and laptops. One has to know the end application / deployment environment of the servers. If the GPU-enabled server is to be deployed as a stand alone unit, completely self-contained sealed liquid cooling loops as shown in Figure 3 are the cost effective solutions. In cases where budget limitations are primary drivers or in a transitional data center (a mix of low density air cooled & high wattage liquid cooled racks), Server Level Sealed Loops appear to be the optimum choice. Another advantage of such a cooling methodology is that it requires no change to the existing data center infrastructure.

For liquid cooled data centers, a thermal management approach that integrates cooling of servers with that of a liquid cooling-integrated cabinet appears to be more energy efficient. Sealed cooling loops that exhaust hot air into the data center cooled by existing CRACs (Computer Room Air Conditioning unit) and chillers are ideal for thermal management of cabinets.

Alternately, sealed cooling loops can also be interfaced with an all liquid-cooled data center thus realizing a direct-to-chip liquid cooling system for servers. The objective of cooling data center servers for “free” in any climate and eliminate the need for expensive and energy-demanding mechanical chillers can be realized using warm–water-direct-to-chip liquid cooling.

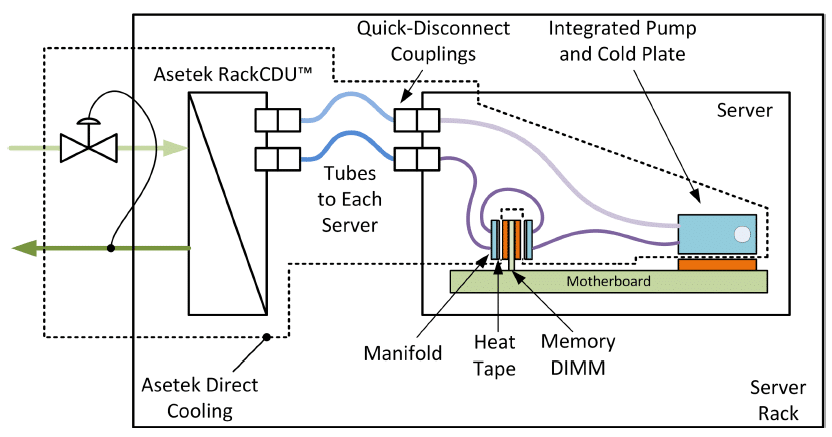

Warm water cooling of data center equipment as outlined in the report Direct Liquid Cooling for Electronic Equipment describes an approach where the commonly used facility chilled water loops are eliminated and the return water temperatures are much higher while providing adequate component cooling. This results in saving both capital and operating expenses. More over, the hotter return water can be used for serving the hot water needs of the facility resulting in further savings. The LBL report documents energy saving estimates ranging from 17 percent to 23 percent with respect to a base case using chilled water supply. A schematic of the direct cooling system is shown in Figure 4.

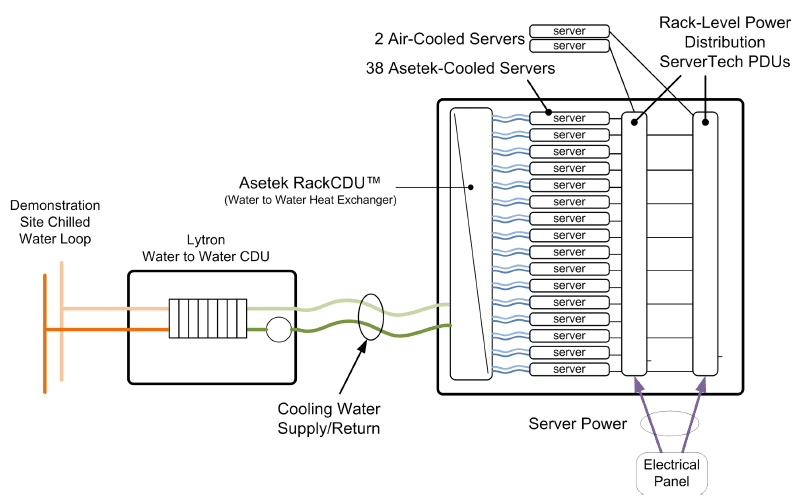

Figure 5 shows a schematic diagram of the demonstration equipment layout with 38 Cisco servers which were retrofitted with the warm water liquid cooling technology investigated in the above mentioned report.

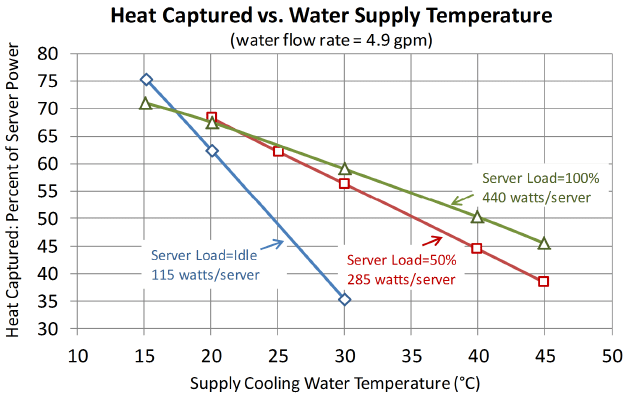

One of the important findings of the warm water liquid cooling study is that for all server power levels, the fraction of heat captured increases as the supply water temperature is lowered. However, at higher supply water temperatures, the fraction of heat captured becomes distinctly different between the three power levels; see Figure 6. At lower supply water temperatures, the fraction of heat captured is less different.

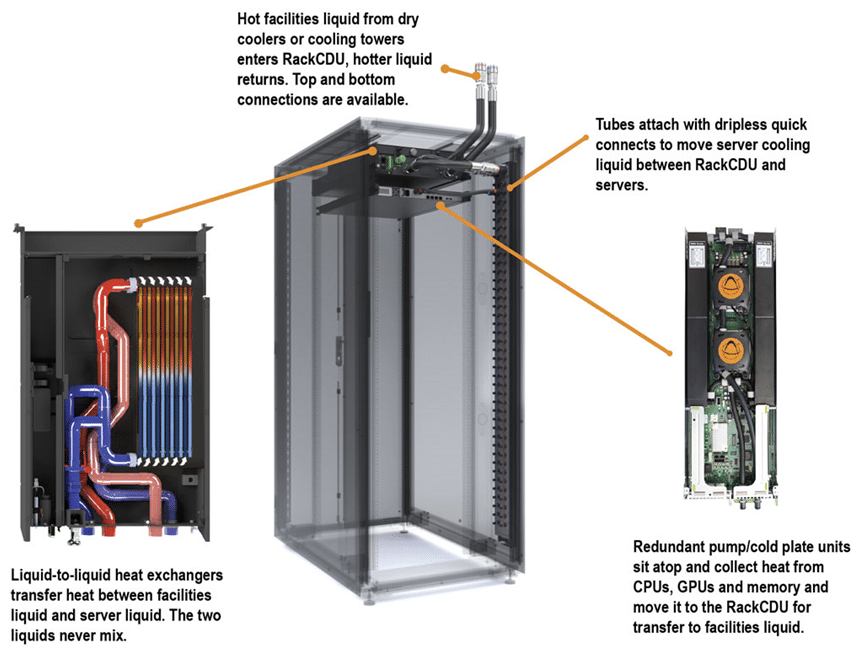

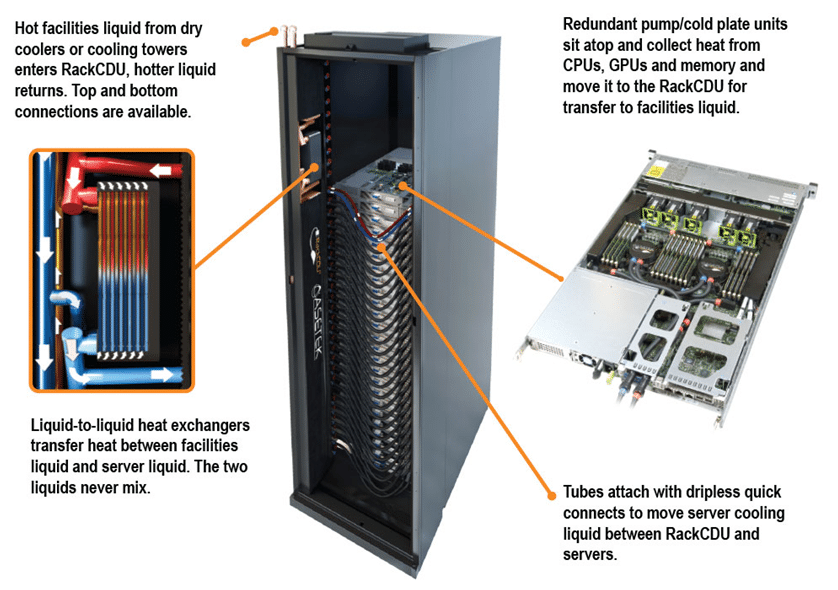

There are multiple choices available today to deploy liquid-cooled data center cabinets. Figures 7 and 8 below show one vendor’s offerings for Direct-to-Chip (D2C™) cabinet cooling options.

In one option shown in Figure 7, the InRackCDU™ D2C has top or bottom mounting configuration within the cabinet and is mounted in the server rack.

In the second option shown in Figure 8, the VerticalRackCDU™ D2C liquid cooling solution consists of a zero-U rack level CDU mounted in a 10.5 inch rack extension with space for 3 additional PDUs and direct-to-chip server coolers that are drop in replacements for standard server air heat sinks.

Warm-water direct to chip liquid cooling liquid cooling solution when deployed appropriately can capture between 60% and 80% of the server heat, reducing data center cooling cost by over 50%. This allows 2.5X to 5X increases in data center server density. These cooling technologies are the most ideal for data center servers with GPU acceleration.

Your comments are welcome.