A noteworthy trend in data centers is to reduce the power consumption of cooling facilities by increasing the supplied temperature to IT services, thereby requiring less work within the cooling facility to provide the targeted temperature. This will result in lower overall power consumption and higher computational effectiveness. However, higher inlet water temperature to the servers increases the operating temperature of the CPUs which can have a negative impact on the power consumption of the servers as well as the operational performance of the CPUs.

This blog will present a critical evaluation of the performance of hybrid (liquid/air) cooled racks under a range of operating temperatures within the ASHRAE W4 envelope for data center liquid cooling. It will include examining the power consumption required for cooling at the facility side, the total power consumption of the rack and the computational performance of the servers.

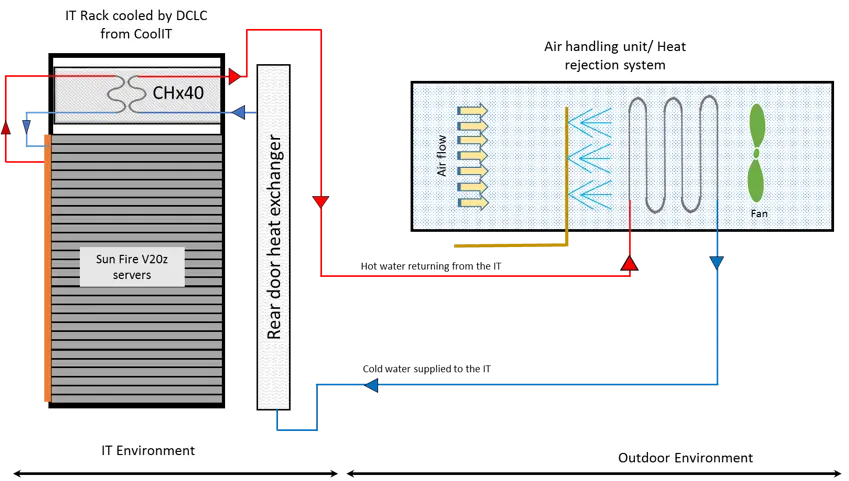

The basis of our data is a study conducted at the University of Leeds where a full scale chiller-less direct contact liquid cooled (DCLC) rack was designed and built. The design involves two sections: Information Technology (IT) environment and outdoor environment:

- The IT environment consists of thirty Sun Fire V20z servers arranged in a standard data center rack. These servers have their CPUs directly liquid cooled and the remaining components are air cooled. The heat generated by the CPUs of all the servers is collected through a coolant loop and directly transferred to an external loop through a liquid/liquid heat exchanger. The heat generated by the air-cooled components of the servers is transferred to the external coolant loop using a passive rear door heat exchanger.

- The outdoor environment involves a chiller-less heat rejection system which is called an Air Handling Unit (AHU), representing the final point at which the heat is transferred to the environment. The AHU was designed to utilize spray evaporation to increase the performance of the heat exchanger. The external loop is responsible for carrying the heat away from the IT environment to be transferred to the outdoor environment through the AHU.

Figure 1 Experimental setup of the hybrid (liquid/air) cooled rack.

Thermal, power, and performance data were collected for various inlet water temperatures (within the W4 envelope of ASHRAE recommendation for liquid cooling, ranging from 2 to 45°C) to the rack. The rack was loaded computationally with various CPU stress levels using Stress Linux script.

The most commonly used metric to describe energy effectiveness and usage in data centers is Power Usage Effectiveness (PUE). This metric was first proposed by Malone and Belady in 2006 and further popularized in 2007 by the Green Grid group. PUE is defined as the ratio between the total power required to operate the data center and the power required to operate the IT equipment. Therefore, the PUE was calculated for each temperature set point.

The most prominent metric that is used to assess the computing efficiency of the server is FLOPS/W. This metric, the most ranked of energy efficient HPC in the Green500 list, is also called the Performance/W metric, which is the ratio between the server floating point operation per second and the power consumption. The higher efficiency of the servers comes with the higher FLOPS/W the system can deliver. The performance of the CPUs was measured using the SPECpower_ssj2008.

The CPU’s temperature is strongly linked to the inlet coolant temperature to the rack. This influence is strongly affected by the computational load level. Some servers encountered shutdown at the high workload level when the inlet coolant temperature to the rack was high.

Increasing the inlet coolant temperature to the rack results in a high reduction in the power consumption of the AHU (cooling side of the PUE equation). On the other hand, the power consumption of the rack (IT power consumption of the PUE equation) increases due to the higher current losses and higher fan speeds inside the servers with higher CPUs temperature. However, decreasing the power consumption of the AHU and increasing the power consumption of the IT have improved the PUE with increasing rack inlet temperature. At idle operation of the rack where the servers are not under any computational process, there was a very marginal increase in the rack power consumption of less than 0.5% when increasing the rack inlet temperature from 20 to 38°C. However, this increase in the inlet water temperature resulted in a large reduction in the power consumption of the AHU which results in a high improvement in the PUE (about 25%). The power consumption of the rack showed a high increase of 4% when increasing the inlet temperature from 24°C to 42°C while the PUE decreased considerably (about 18%) as the power consumed in the cooling side decreased massively.

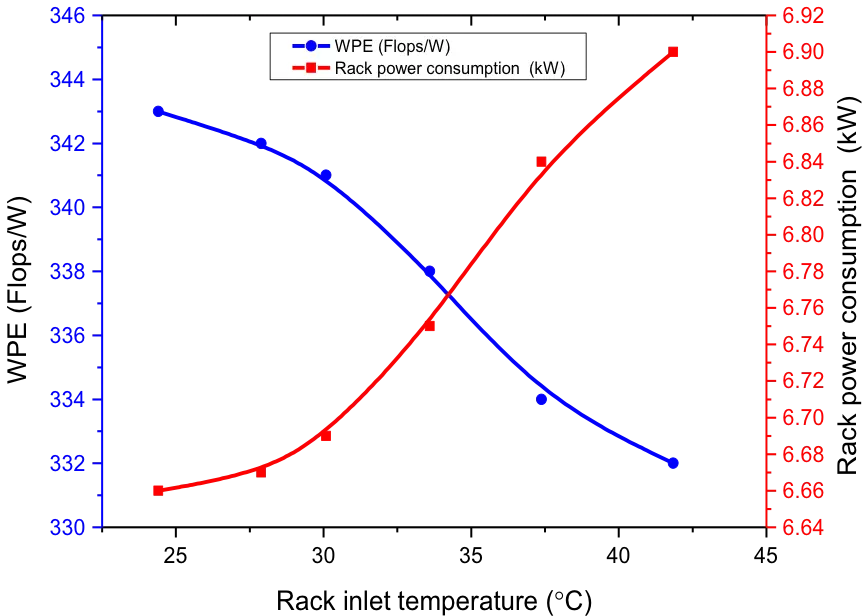

Depending on the IT load level, the computational efficiency of the servers was found to be adversely affected by increasing coolant inlet temperature. For example, at 75% utilization load, increasing the water inlet temperature from 22°C to 44°C resulted in a 4% reduction in the FLOPS/W of the rack. While at 100% utilization, the FLOPS/W reduced by 3% when the rack inlet temperature was increased from 24°C to 42°C.

Supplying high temperatures to the IT should be analyzed critically as its benefits of energy savings– of supplying less work on cooling– should be weighed up against the drawbacks of increasing rack power consumption and decreasing the computational efficiency as well as the detrimental effects on the operation of the servers such as server shutdown. The work also highlighted the important fact that the high effectiveness of the data center does not necessarily mean high efficiency. As the PUE could be improved by supplying less power for the cooling system while the servers’ performance can deteriorate.

Figure 2 Computation efficiency (Work Load Power Effectiveness (WPE) in FLOPS/W) and rack power consumption as a function of the rack inlet temperature at 100% load.