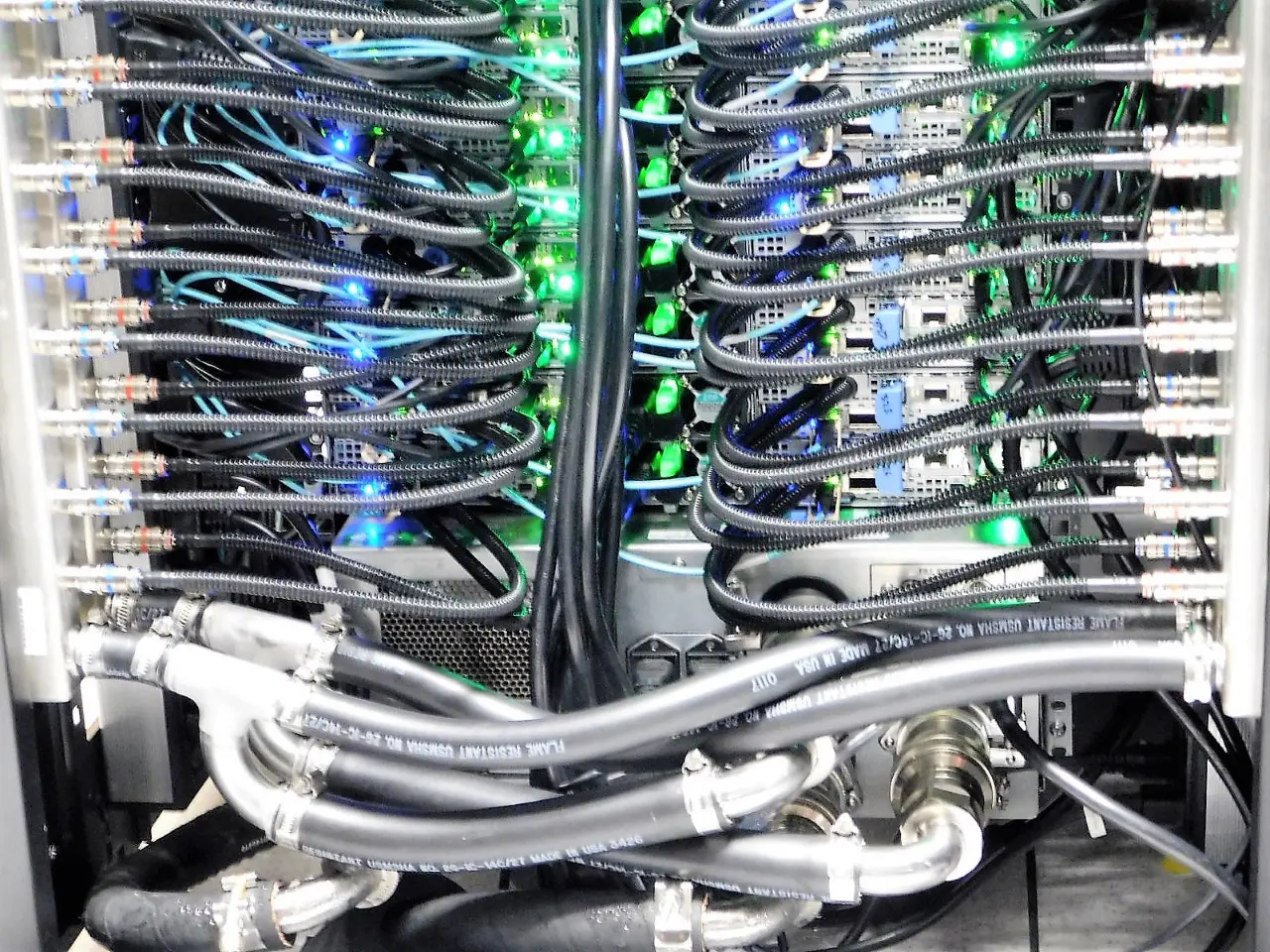

Figure 1: Liquid cooled 68-node rack at Dell EMC HPC.Ê (Source: CoolIT Systems)

Hyperion Research, a company that provides research, analysis, and recommendations for technologies, applications, and markets in high-performance computing and emerging technology areas, forecasts that the worldwide market for high-performance computing (HPC) server systems will grow at a healthy 9.8% CAGR to reach $19.6 billion in 2022. Accompanying this growth, in the companyÕs view, is a strong trend toward the use of liquid cooling to manage the fast-rising heat levels generated by increasingly large and more densely packed HPC servers.

HPC data centers have often had to rely on liquid cooling systems borrowed from other industries, and this mismatch may have exacerbated “data center hydrophobia” Ñ the fear of leaks damaging expensive electronic equipment. Fortunately, current growth in spending for HPC liquid cooling systems has begun to attract purpose-built solutions designed to handle the extreme cooling demands of HPC systems more effectively.

This article briefly reviews the rise of liquid cooling in the global HPC market, the technical challenges associated with this rise, and how liquid cooling vendors are addressing these challenges.

The Liquid Cooling Trend in the Global HPC Market

Since the start of the supercomputer era in the 1960s, the most powerful HPC systems have needed liquid cooling to keep their many tightly-packed ICs from overheating and damaging expensive electronic components. (Cooling with water or other liquids can be 3-4 times more efficient than air cooling.) In recent years, average processor counts and densities in the HPC market have skyrocketed. Between April 2000 and June 2018, the average peak performance of systems on the Top500 list of the world’s most powerful supercomputers jumped from 154GF (Giga floating point operations per second) to 2.4PF (Peta, or one quadrillion, floating point operations per second), a factor of 15,844. Today, HPC systems pack significantly more heat-generating components into much tighter confines. In addition, cooling can account for up to half of the energy costs for an HPC system.

As a result, even many midrange HPC systems and data centers have been making the move to liquid cooling. In a recent Hyperion Research worldwide study, entitled ÒPower and Cooling Practices and Planning at HPC SitesÓ, nearly all of the 100-plus surveyed sites employing air cooling said they were exploring liquid cooling alternatives to meet their future needs. Today’s liquid options include immersion cooling, cold plate cooling, indoor liquid cooling, and direct-to-chip cooling, using water at varying temperatures or with more esoteric liquids.

Liquid cooling options deployed in HPC centers have often been designed for other industrial uses. Even with these make-do solutions, coolant leaks at HPC data centers are uncommon, but when they occur they can cause extensive damage to computer systems that may cost millions, sometimes tens of millions, of dollars each. Also, cooling efficiency can be challenged when components cannot meet the flow rate, temperature, pressure or chemical compatibility needs of larger systems. But the growth in spending for liquid-cooled HPC systems and data centers has motivated some pioneering vendors to design products purpose-built to handle the demanding requirements associated with HPC liquid cooling deployments of on-premise and cloud environments.

Meeting Evolving Cooling Needs

As liquid cooling steadily advances in use and sophistication, system suppliers are increasingly focusing on purpose-built liquid cooling products and components for the worldwide HPC market.

One of the most commonly used critical components in data centers today is the metal quick disconnect (QD). As a key fluid management component, these QDs affect flow rates, allow hot swapping of equipment in liquid cooled racks if they have integrated stop-flow capabilities, and serve as a point of reliability or vulnerability in a comprehensive liquid cooling system. If QDs work as designed, thermal engineers and data center operators do not typically give them much thought. If they fail, they suddenly become the most scrutinized, critical components in the system.

QDs are now being expressly designed to handle the demanding flow rates and related pressures associated with liquid cooling deployments in HPC and other large data centers. Flow rates are typically low at the server (e.g., 0.5 liters/minute) but considerably higher at the coolant distribution unit (up to 70 l/min.), and flow rates that exceed the connectorÕs maximum capacity can produce seal failure or accelerate the erosion of parts. As a result, choosing the best QD for the application is a crucial step for reliability and performance.

For a growing number of data centers, new liquid cooling-specific QDs serve as alternatives to bulkier, more difficult to handle ball-and-sleeve hydraulic connectors. These newer products have been optimized for the temperature, pressure, and coolant needs of HPC systems and are made of durable metal and engineered polymers designed for dripless operation. Companies like CPC (Colder Products Company) in St. Paul, Minn., work with HPC manufacturers and data centers to deliver QDs specifically for their applications. Cray, for example, is among the HPC manufacturers to use CPC QDs, a version of which optimizes flow rates in a compact format while operating in tight spaces. To ease installation, these liquid cooling-specific QDs feature swivel joints, elbows, and low connection force. An integrated thumb latch allows one-handed installation, simplifying use even further. These seemingly small features take on added significance when you multiply installation, operational, and maintenance efforts across hundreds of racks and thousands of servers.

New to the liquid cooling market are thermoplastic QDs built specifically for liquid cooling use. The reported advantages of these couplers are their lightweight design, chemical compatibility with most conventional and specialty fluids, and significant creep and corrosion resistance. Other reported attributes of thermoplastic QDs made of polyphenylsulphone (PPSU) include:

- Flame retardant in accordance with UL94V0 rating

- Low water absorption

- Thermal insulator: No external condensation; not hot to the touch

- Leak tested to 10,000 cycles

- Broad operating temperature range: 0¡-240¡F (-17¡ to 115¡C)

Future Outlook

Hyperion Research sees spending for liquid cooling deployments as an escalating, enduring trend as average HPC system sizes and densities continue to increase. This spending growth will motivate more vendors to design products that specifically meet the demanding liquid cooling needs of advanced HPC systems and large data centers.