As the title indicates, since the middle of the last decade, there have been very pessimistic projections regarding the growth of the total annual energy used by data centers in the U.S. One of them suggested that by 2030, the energy consumption in the U.S. in the IT sector would be roughly 60% of that used by its entire industrial sector. [1,2,3]

What was the origin of this dire prediction? How is it holding up in light of more recent data?

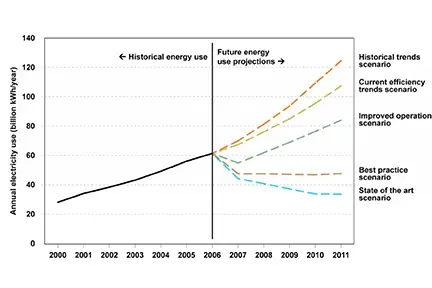

The first early warning of this risk resulted from a very comprehensive U.S. Environmental Protection Agency (EPA) energy report, released in 2007.[1] It did chronicle the very rapid increase in the annual energy use by data centers in the U.S. In fact, the reported annual rate of increase was 15%. This translates into a doubling of energy consumption in only 6 years and a tripling in 9 years. In addition to documenting energy use from 2000 to 2006, it also made predictions about the what the future would hold for us, subject to different technical assumptions. Figure 1 provides a graph from their report.

Figure 1. Comparison of Projected Electricity Use, All Scenarios, 2007 to 2011. [Fig. ES-1, Ref.2]

The predictions reflect a very nuanced analysis of what the future might hold. The trajectory of highest energy growth makes the assumption that energy consumption would continue at the very high rate manifested by their recent history. The implication is that there would be no improvement in efficiency in any aspect of running a data center. To accommodate the expected continued growth in data centers, companies would simply make more of the kinds of servers and infrastructure they had been doing in their recent past. Of course, on a moment’s reflection, one sees that this would be an absurd assumption, but it was provided nonetheless, since it should represent a worst-case situation and would therefore represent an upper bound on energy consumption. Then, as the possibility of efficiency improvements in various technical areas was accounted for, the trend line became less steep and, in the most optimistic case, actually showed a decreasing trend.

Not too surprisingly, it was the worst-case scenario that was picked up by the media and also various analysts.[4] This became the trend line that was most commonly used for forecasting energy trends.

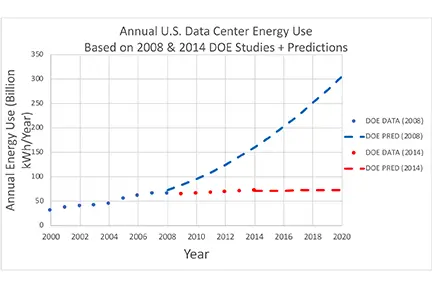

To analyze energy use since 2006, we make use of data extracted from a recent monumental study, conducted by the U.S. Department of Energy, that looked at power consumption while considering all aspects of data center IT configurations, equipment, and cooling practices over the full range of data center sizes.[5] This report provided actual energy consumption values for data centers up to the year 2014. It also makes predictions out to 2020 for various scenarios, representing a spectrum of assumptions regarding increases in the energy efficiency both in servers themselves and in all aspects of the infrastructure. It was the first report to quantify data center energy use over the entire U.S.

Figure 2. Comparison of Actual and Projected Energy Use in U.S. Data Centers, 2000 to 2020. [Based on data in Refs. 2, 5]

The graph in Figure 2 plots actual energy usage data from 2000 to 2014 extracted from the report. This shows a much more benign behavior than the worst-case projection plotted in Figure 1, which is represented by the dashed blue line in this graph. The projection of energy use from 2014 to 2020 is represented by the dashed red line that appears as a horizontal continuation of the recent data.

This report shows that, indeed, the many doomsayers in the media who took a worst-case scenario and institutionalized it were completely wrong. Rather than representing a steep, exponential increase in energy, the trend is linear with a slight upward slope, increasing by 1.6% per year.

It makes one wonder how so many people could have been so wrong. Fortunately, the 2014 DOE report provides an exhaustive analysis of the many factors affecting energy consumption by data centers. We’ll look at those shortly.

One benefit of the expected exponential growth in energy consumption was that it created a lot of concern in many sectors of our society. Naturally enough engineers shared this concern as well.

Faced with this alarming trend, engineers began to worry not only about the power consumed by the computing and networking electronics, but also about the power used to operate the fans, air conditioners, and other cooling equipment. The term PUE (Power Usage Effectiveness), a measure of data center cooling efficiency, was defined in 2006. It is defined as:

PUE = Total Facility Energy Consumed / Energy Powering the IT Equipment

For example, PUE = 1 if all the energy powers the IT equipment and PUE = 2 if amount of energy devoted to cooling equals the energy for powering the IT equipment.

PUE analyses immediately began to be broadly applied to data centers. The ratings were, in general, initially closer to 2 than 1. Quantifying the problem led to broad thinking about how to reduce it. It prompted a lot of analysis and subsequent action on how to reduce energy consumption.

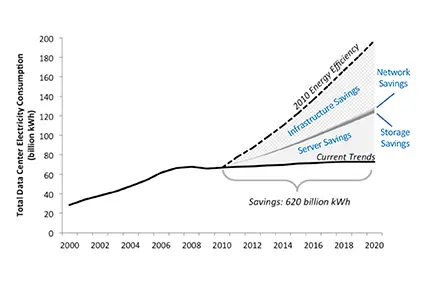

Figure 3, from the 2014 report, shows the past and projected growth rate of total U.S. data center energy use from 2000 until 2020, which is represented by the solid black line. There were various inflection points of note: 1) in the 2008 time frame, the energy consumption trend line bent so that the 15%/year slope was reduced to only 4%/year. This was mainly due to a smaller installed base of servers due to the economic recession and the related efficiency improvements driven by pressures to reduce energy and equipment costs. However, in the year 2010, as data center capacity began to increase again, the energy growth rate was less than 2% per year. The dotted line ascending from the actual trend line in 2010 represents the predicted growth in energy consumption if there were no efficiency improvements in IT hardware and infrastructure after 2010.

Figure 3. Data Center Electricity Consumption in Current Trends and 2010 Energy Efficiency Scenarios [Ref. 5, Fig. 24.]

This graph also quantifies the effect of improvements in energy efficiency in four major classifications of the IT infrastructure: servers, infrastructure, network, and storage. They are discussed below, in order of significance:

- Infrastructure – this deals mainly with cooling and power conversion and backup power, with cooling accounting for most of the energy usage. Readers of this publication will know of many ways in which the process of cooling IT equipment can be made more energy efficient. Better management of the incoming cool and outgoing warm air streams alone can account for significant savings. Also, introducing liquid cooling in as many sections of the heat flow path, as is practical, from the computing hardware to the outdoor environment can greatly enhance efficiency.

- Servers–energy savings are provided automatically by Moore’s Law scaling, as the energy required to implement a specific computational workload decreases with time. There are also advances in server computation architectures in enabling virtualization, that facilitates the routing of computational work to a specified server so that it operates at a high utilization rate. This not only reduces the total number of servers needed, it also improves the power efficiency. Those remaining servers operating at a low utilization rate are designed to significantly reduce their power consumption during those periods.

- Network – there are two factors responsible for reducing the energy required to transmit a given quantity of data over IT networks: 1) Increasing bandwidth. For example, in a given generation of silicon, increasing bandwidth from 10 to 40 Gb/s only increases the power by a factor of 1.7. 2) At a given bandwidth, for example 40Gb/s, Moore’s Law scaling provides roughly a 13% power reduction per year, that, over 6 years, reduces the power by 50%.

- Storage – The biggest contributor to the reduction in energy consumption by storage devices is the fact that the capacity in an individual device has been increasing at a rapid rate. This allows a smaller number of drives to meet a specific storage requirement than were required in the past.

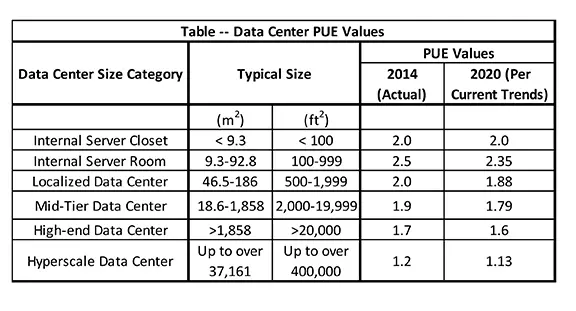

There is another dynamic that was operative in reducing the energy use by data centers since 2010, namely the trend in which the larger data centers continue to account for a higher share of the total number of the servers in the U.S. The following Table divides the data centers in the U.S. into 6 categories, ranked by the floor area in each center.[6]

They range from the classification of an “Internal Server Closet” whose size is under 9.3 m2 to a Hyperscale Data Center, whose size ranges from 1,900 to over 37,000 m2. The Internal Server Closet would be found at a small company to satisfy its internal IT needs. The hyperscale data centers would include the cloud computing and internet giants such as Google, Facebook, Amazon, and Microsoft.

The PUE values tend to be higher the smaller the data center. It is noteworthy that only the hyperscale data centers have PUEs near 1. The high-end data centers have values has a value of 1.7. All of the smaller ones have values in the range, 1.9 to 2.5. Furthermore, one expects only a small improvement in their PUEs by 2020.

The hyperscale data centers have been designed from the ground up to have maximum cooling and power conversion efficiency and often incorporate selective liquid cooling.

The smaller data centers lack the economies of scale of their hyperscale cousins. They typically are entirely air cooled and often have suboptimum air flow conditions where there is poor separation between hot and cold air streams, leading to hot spots. Lacking the resources to better manage air flow, the common practice is to deal with the hotspots by overcooling the datacenter. With the emphasis on avoiding downtime rather than minimizing the power consumption devoted to cooling, these data centers tend to have higher PUEs.

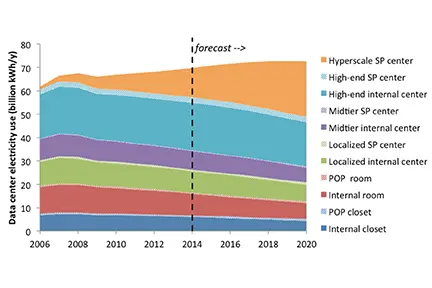

This macrotrend involving the migration of data center energy consumption from the smaller data centers to the large ones is captured by Figure 4. Of course, from an energy utilization perspective, this is a positive trend since the largest data centers get considerably more computing work per watt than do the smaller ones.

In spite of this trend, the report estimates that in 2020, 40% of the total energy will be consumed by data centers at the mid-tier size and smaller, with their lackluster PUEs of 2. Clearly, improving the energy efficiency of these small data centers would represent an opportunity for the thermal management community. It should be mentioned that in the future, there will be an increasing number of small-size data centers that will migrate to the cloud. As the preceding analysis indicates, if implemented at a cloud computing provider, their computational workload should be executed in a more energy efficient way than was possible in the original data center.

Figure 4. Data Center Annual Electricity Consumption Differentiated by Size Category [Ref. 5, Fig. 22]

CONCLUSIONS

This analysis provides some comfort in that we can expect that, in the near future, total energy consumption by U.S. data centers should be very manageable. However, we can expect that as the data center sector in the U.S. scales up to accommodate the computational and data management requirements of the Internet of Things, we will once again be challenged by the sheer scale of this enterprise and its immense energy needs.

REFERENCES

1. Report by the U.S. Energy Information, Report#: DOE/EIA- 0383(2008), Release date full report: June 2008; Next release date full report: February 2009.

2. Report to Congress on Server and Data Center Energy Efficiency Public Law 109-431 U.S. Environmental Protection Agency ENERGY STAR Program, August 2, 2007.

3. Madhusudan Iyengar and Roger Schmidt, “Energy Consumption of Information Technology Data Centers,” ElectronicsCooling, Vol. 16, No. 4, December, 2010.

4. Patrick Thibodeau, “Data centers are the new polluters,” Computerworld, Aug 26, 2014

5. Arman Shehabi, et al. “United States Data Center Energy Usage Report,” Lawrence Berkeley National Laboratory Document Number LBNL-1005775.

6. Bruce Guenin, “Data Center Power Trends – Where Do We Go from Here?,” Editorial, ElectronicsCooling, June, 2018.