Nomenclature

| A1, A2, A3, A4 | ASHRAE allowable thermal envelopes as defined in Thermal Guidelines for Data Processing Environments that represent where IT manufacturers test equipment to ensure functionality |

| ASHRAE | American Society of Heating, Refrigerating and Air-Conditioning Engineers |

| CAGR | Compound Annual Growth Rate |

| CITE | ASHRAE Compliance for IT Equipment |

| Datacom | Data processing and communication facilities, that include rooms or closets used for communication, computers, or electronic equipment. |

| DCIM | Data Center Infrastructure Management |

| ELC | Electrical Loss Component per ANSI / ASHRAE Standard 90.4 – 2016. The design ELC is the combined losses of three segments of the electrical chain: incoming electrical service segment, UPS segment, and ITE distribution segment. |

| ESD | Electro-static discharge |

| HVAC | Heating, ventilation and air conditioning |

| IT | Information Technology |

| MLC | Mechanical load component per ANSI / ASHRAE Standard 90.4 – 2016 is calculated by the sum of all cooling, fan, pump, and heat rejection annual energy use divided by the data center ITE energy (annualized MLC) or the sum of all cooling, fan, pump, and heat rejection design power divided by the data center ITE design power (design MLC). |

| TC | Technical Committee |

| W1 – W5 | ASHRAE liquid cooling classes for liquid-cooled IT equipment |

History of ASHRAE TC 9.9

In 1999, a group of thermal engineers from different IT manufacturing companies formed a thermal management consortium. This consortium evolved into ASHRAE Technical Committee 9.9 (TC 9.9). This history was documented in articles by Roger Schmidt (2012) [1] and Don Beaty (2005) [2]. This article serves as a continuation of those articles in documenting the activities of TC 9.9 since then.

TC 9.9 was formed in response to the lack of effective information transfer between the building, HVAC, and IT industries. Its mission is to be recognized by all areas of the datacom industry as the unbiased engineering leader in HVAC and an effective provider of technical datacom information. Since its formation, TC 9.9 has grown to be one of the most active ASHRAE technical committees, and its largest, with roughly 400 members. The committee is represented by a wide range of disciplines within the datacom industry, including, producers of datacom equipment (i.e. computing hardware, software, and services), producers of facility equipment (i.e. HVAC, DCIM, rack, and power solutions), users of datacom equipment (i.e. facility owners, operators, and managers), and general interest (i.e. government, utilities, consultants, academia, and laboratories).

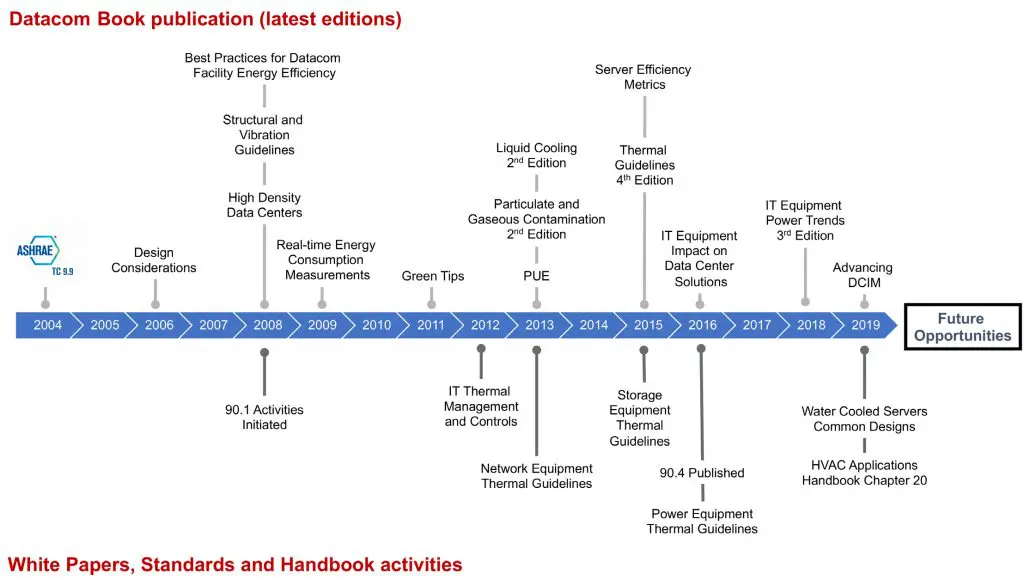

The activities of TC 9.9 have spanned all aspects of data center design and operation. Figure 1 provides a historical perspective on the activities of the committee, dating back to its formation in 2004. One of the cornerstone contributions of TC 9.9 has been the Datacom Series books. The series started with the publication of the Thermal Guidelines for Data Processing Environments and has been expanded to include a diverse range of topics, including data center structural and vibration guidelines, server performance characterization, and particulate and gaseous contamination, to name a few.

As of the publication of this article, 14 books have been released with several having multiple editions. These books serve as essential training for anybody with an interest in the datacom industry and have become global resources, with several having been translated into Mandarin and Spanish, and others being incorporated into regulation to support data center energy efficiency initiatives. In addition to the book series, TC 9.9 has played a role in the development of other significant resources.

Historically, TC 9.9 has initiated a new topic through the publication of a white paper, which is made freely available to the industry. This allows the material to be published in a timely fashion as either demand is expressed by the industry or a significant gap is seen in the industry by the committee. Subsequently, in many cases, this material is further developed and expanded into a Datacom Series book.

To supplement these activities, TC 9.9 also engages in industry/academia research programs. Furthermore, the material in these publications is used to develop other resource available through ASHRAE, including a new chapter in the ASHRAE HVAC Applications Handbook [3] and ANSI/ASHRAE Standard 90.4-2016 Energy Standard for Data Centers [4].

The remaining sections of this paper will highlight a few of the most recent activities of the committee that currently have broad interest amongst data center professionals globally.

Thermal Guidelines for Data Processing Environments

Now in its fourth edition, Thermal Guidelines for Data Processing Environments [5], remains the foundation of the Datacom series. When first established, the thermal guidelines represented the first comprehensive set of temperature and humidity conditions, established by the IT manufacturers, that linked the design of datacom equipment (i.e. servers and storage) and the data center. They established guidance to data centers on operating the datacom equipment for optimal performance, highest reliability, and lowest power consumption.

This publication has become the de-facto standard for the environmental design and operation of electronic equipment installed in a datacom facility. Furthermore, in 2018, the European Commission approved an Ecodesign Regulation for servers and data storage products [6]. As part of this regulation, IT manufacturers will be required to declare the environmental class of their product according to the ASHRAE environmental classes defined in the Thermal Guidelines for Data Processing Environments publication.

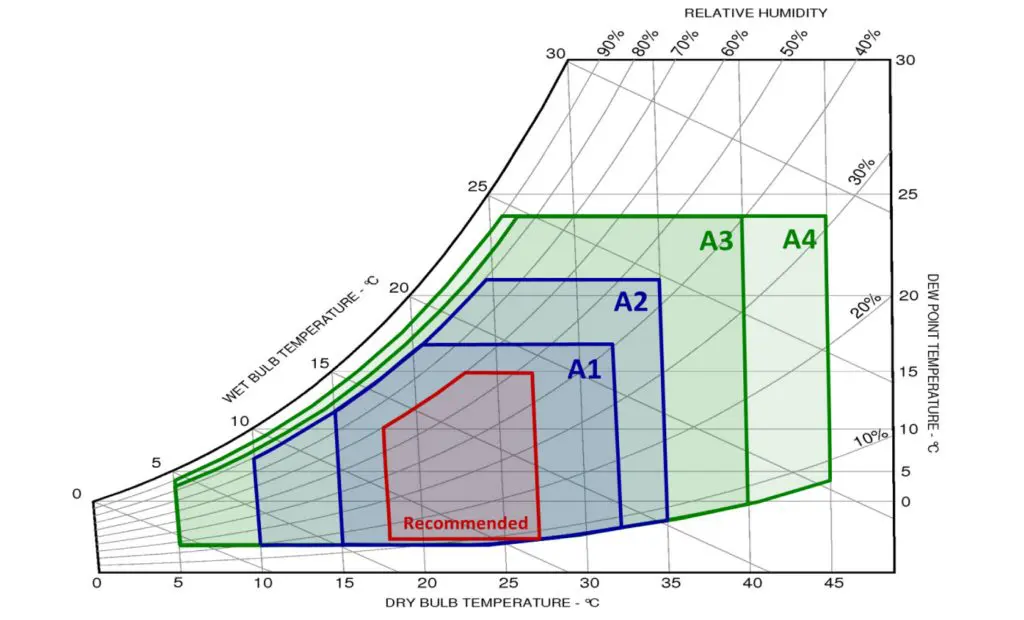

Prior to publication in 2004 of the first edition, there was no single source in the data center industry for ITE temperature and humidity requirements. In the second edition of the Thermal Guidelines for Data Processing Environments the recommended envelope was expanded to provide data center operators guidance on maintaining high reliability and also operating their data centers in the most energy efficient manner.

This envelope was created for general use across all types of businesses and conditions. The second edition also introduced new allowable envelopes (A1 and A2), that expanded the maximum allowable dry-bulb temperature to 32°C and 35°C, respectively, with a maximum relative humidity limit of 80% and a minimum relative humidity limit of 20%. These envelopes also differed in the maximum allowable dew point temperature. These allowable envelopes offered data center operators the flexibility in using an operating envelope that matched their business need and to weigh the balance between the additional energy savings of the cooling system versus the deleterious effects that may be created on total cost of ownership by operating outside the recommended range.

With the further pursuit of energy efficiency, the third edition added two expanded allowable envelopes (A3 and A4) to the already documented A1 and A2 in the second edition. These new classes enabled near full-time use of free cooling techniques in the vast majority of the world’s climates. However, using these envelopes added some complexity and trade-offs in terms of energy, reliability, and resiliency that requires more careful evaluation by the data center owner due to the potential impact on the IT equipment to be supported. The third edition also introduced, for the first time, environmental classes for liquid cooled IT equipment (W1 – W5).

As the datacom industry looked to further improve data center energy efficiency, from 2011 to 2014, ASHRAE funded research [7] to investigate the risk of electrostatic discharge (ESD) related events in data centers that operate at lower humidity. The results from this study showed that data centers could be operated with relative humidity levels as low as 8% without any noticeable impact on equipment reliability.

For data centers that implement a standard set of ESD-mitigation procedures, and as a result of this study, the ASHRAE environmental classes were further expanded to increase the energy saved in data centers by not requiring humidification at low moisture levels. These expanded envelopes are shown in Figure 2.

As has been the case since the second edition, the recommended environmental envelope provides guidance on where facilities should be designed to provide long-term reliability and energy efficiency of the IT equipment. The allowable envelopes (A1 – A4) are where IT manufacturers test their equipment in order to verify that it will function but ultimately are meant for short-term operation. The allowable classes may enable facilities in many geographical locations to operate year-round without the use of mechanical refrigeration, which can provide significant savings in capital and operating expenses in the form of energy use.

IT Equipment Power Trends

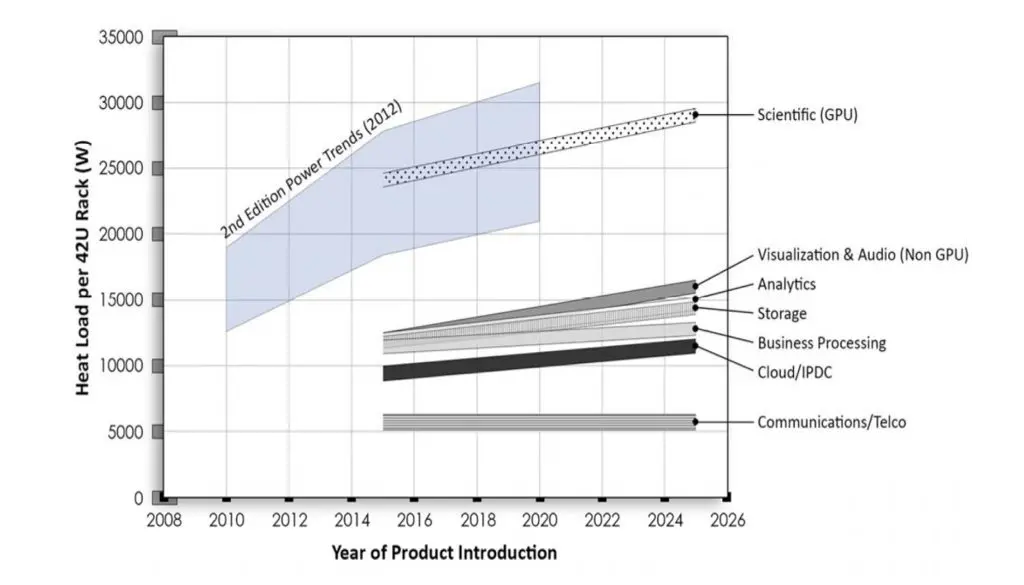

One of the first tasks of the thermal management consortium, referenced above, was the publication of power trends for IT Equipment through the Uptime Institute. About every five years, ASHRAE TC 9.9 has released updated power trends. Now in its third edition, IT Equipment Power Trends [8] (formerly, Datacom Equipment Power Trends and Cooling Applications), contains the best-known hardware power trends through 2025. For the first time in the series, the power trends have been segregated by workload type. This is a significant change from previous editions that focused only on the power trends for servers of a given form-factor (i.e. 1U vs. 2U).

By delineating the power trends by workload type, it provides the user with a more customized methodology to assess the power for a given configuration and/or workload. Of most practical use in the third edition was the inclusion of the compound annual growth rate (CAGR) of power for each workload and server form-factor. Ultimately, data center owners can use the CAGR with measurements of their current system power to get realistic projections of future power needs based on their specific deployment.

The third edition of IT Equipment Power Trends breaks down the workload types into eight general categories: Scientific, Analytics, Business Processing, Cloud / Internet Portal Data Center, Visualization & Audio, Communications / Telco, Storage, and Networking. The power trends by workload type reflects the change in the IT industry to support users’ needs in terms of server configurations, with targeted types of workloads to maximize IT equipment performance and efficiency.

The impact the workload has on the overall power trends is evident from Figure 3, which provides a historical perspective of the power trends for 2U, 2-processor servers. It is clear that a similar server, configured differently to meet a specific workload, can have widely different power dissipation. Ultimately, the move to a workload-based power trends should go a long way in helping data center operators design a more efficient data center that supports many generations of IT equipment.

Advancing DCIM with IT Equipment Integration

The newest book in the ASHRAE Datacom Series, Advancing DCIM with IT Equipment Integration [9] aims to demystify and extend the implementation of data center infrastructure management (DCIM) tools. With the large number of available data sources within the data center, DCIM has the potential to be the next step in further improving data center energy efficiency and increasing data center resiliency. To enable a more holistic view of the data center, DCIM requires the integration of many disparate data sources — facility equipment, IT equipment, Internet of Things devices, etc. This level of integration has failed to garner wide-spread adoption due to the sheer effort required to program and implement the large number of proprietary protocols, nomenclatures and implementations on the market.

ASHRAE DCIM Compliance for IT Equipment (CITE), for the first time, establishes an alignment on a common set of power and cooling telemetry and metrics within the IT industry for servers. ASHRAE TC 9.9 engaged with the industry standards body the Distributed Management Task Force (DMTF) to promote industry adoption of CITE. The DMTF Redfish implementation of CITE provides the proper schema mapping, physical context, and reading properties and has been adopted and accepted into the DMTF Redfish API Schema, release 2018.3.

Advancing DCIM with IT Equipment Integration [9] provides many more details on how an organization can leverage DCIM systems to help normalize and organize the large quantity of data that can be collected and how this data can be used to calculate key metrics to improve the data center’s operation. It also highlights key use cases that are already being practiced in many DCIM deployments.

ANSI/ASHRAE Standard 90.4-2016 Energy Standard for Data Centers

In 2010, in an effort to promote data center energy efficiency, Standard 90.1 Energy Standard for Buildings Except Low-Rise Residential Buildings [10] incorporated data centers. Prior to this, data centers were exempt due to their mission critical nature. However, data centers have markedly different load profiles and rate of technology innovation compared to the general commercial building industry, making the prescriptive nature of 90.1 relatively unattractive to the data center industry. An ASHRAE Standard 90.4 committee was formed, with participation from ASHRAE TC 9.9.

In 2016, ANSI/ASHRAE Standard 90.4-2016 Energy Standard for Data Centers [4], was released. This standard establishes the minimum energy efficiency requirements of data centers for design and construction, guidelines for creating a plan for operation and maintenance, and recommendations for utilizing on-site or off-site renewable energy resources. Standard 90.4 is a performance-based approach that provides flexibility for data center designers to innovate in the design, construction, and operations of their facility.

Standard 90.4 introduced two new design metrics: the mechanical load component (MLC) and the electrical loss component (ELC). Calculations of the MLC and ELC are made and then compared to the maximum allowable values provided in the standard. The MLC can be calculated on either an annualized or design basis and must be evaluated based on the data center’s climate zone. The design ELC is the combined losses of three segments of the electrical chain: incoming electrical service segment, UPS segment, and ITE distribution segment.

The designer can show compliance with Standard 90.4 by showing that either their calculated MLC and ELC values do not exceed the values contained in the standard at both 100% and 50% of the design IT load or they can follow an alternative compliance path that provides the designer a methodology to allow trade-offs between the MLC and ELC. Standard 90.4 does not use the Power Usage Effectiveness (PUE) defined by The Green Grid. One reason for this is that the PUE is an operational metric based on measured energy use data rather than design calculations. It should be recognized that the design calculations contained in Standard 90.4 would not likely match the actual operational PUE of the data center.

Conclusions

ASHRAE Technical Committee 9.9: Mission Critical Facilities, Data Centers, Technology Spaces, and Electronic Equipment remains one the most active technical committees within ASHRAE and the data center industry. The committee is currently focused on a number of efforts, including:

- Research into the impact of high humidity and gaseous contamination on IT equipment reliability

- Guidelines for energy modeling to show compliance to Standard 90.4

- Research on developing guidelines for computational fluid dynamics modeling of data centers.

- Creation of expanded content to support the rapidly materializing liquid cooling

This article was certainly not inclusive of all activities undertaken by the committee. The interested reader is referred to the TC 9.9 website (http://tc0909.ashraetcs.org) for the latest information. The website contains information on TC 9.9 upcoming meetings, previous meeting minutes, whitepapers, and links to purchase the Datacom books.

References

[1] Schmidt, R, December 18, 2012. “A History of ASHRAE Technical Committee TC9.9 and its Impact on Data Center Design and Operation.” Electronics Cooling. Online: https://www.electronics-cooling.com/2012/12/a-history-of-ashrae-technical-committee-tc9-9-and-its-impact-on-data-center-design-and-operation/ [Accessed: July 2, 2019]

[2] Beaty, D, February 1, 2005. “ASHRAE Committee Formed to Establish Thermal Guidelines for Datacom Facilities.” Electronics Cooling. Online: https://www.electronics-cooling.com/2005/02/ashrae-committee-formed-to-establish-thermal-guidelines-for-datacom-facilities/ [Accessed: July 2, 2019]

[3] ASHRAE, 2019. ASHRAE Handbook – HVAC Applications. Atlanta: ASHRAE.

[4] ASHRAE, 2016. ANSI / ASHRAE. Standard 90.4-2016 Energy Standard for Data Centers. Atlanta: ASHRAE.

[5] ASHRAE, 2015. Thermal guidelines for Data Processing Environments, 4th edition. Atlanta: ASHRAE.

[6] Commission Regulation (EU) 2019/424, 15 March 2019. Ecodesign requirements for servers and data storage products pursuant to Directive 2009/125/EC of the European Parliament and of the Council and amending Commission Regulation (EU) No 617/2013

[7] ASHRAE, 2015. “RP-1499 – The Effect of Humidity on Static Electricity Induced Reliability Issues of ICT Equipment in Data Center.” Atlanta: ASHRAE.

[8] ASHRAE, 2018. IT Equipment Power Trends, 3rd Edition. Atlanta: ASHRAE

[9] ASHRAE, 2019. Advancing DCIM with IT Equipment Integration. Atlanta: ASHRAE.

[10] ASHRAE, 2016. ANSI / ASHRAE / IES Standard 90.1-2016 Energy Standard for Buildings Except Low-Rise Residential Buildings. Atlanta: ASHRAE.