The fourth and future industrial revolutions will set new requirements for smart cities / factories, autonomous vehicles, augmented reality, smartphones, etc., resulting in more distributed architectures, expanding the convergence of new technologies and developments thus, transforming the term Internet of Things (IoT) to Internet of Everything (IoE). Centralized cloud computing has evolved to provide scalable computation capable of processing large amounts of data, while also ensuring storage and provisioning of resources according to user requirements. The explosion of cloud- and internet-based content has created new business opportunities with a natural “shift to the edge” closer to where the users are, in order to meet bandwidth requirements and to deliver faster services with minimal latency, the latter being fundamentally limited by the propagation speed of light in optical fibers. These demands, in turn, create a tremendous need for distributed datacenters where some portion of the compute function is shifted from the centralized cloud. The “shift to the edge” represents the most profound change in the network architectures that will impact for decades to come. The importance of edge computing to 5G radio access network (RAN) infrastructure is expected to kick off with enhanced mobile broadband as its first use case. Data scientists have predicted that by the end of 2025, there will be 2.8 billion 5G subscriptions, compared to about 190 million in 2020 [1, 2].

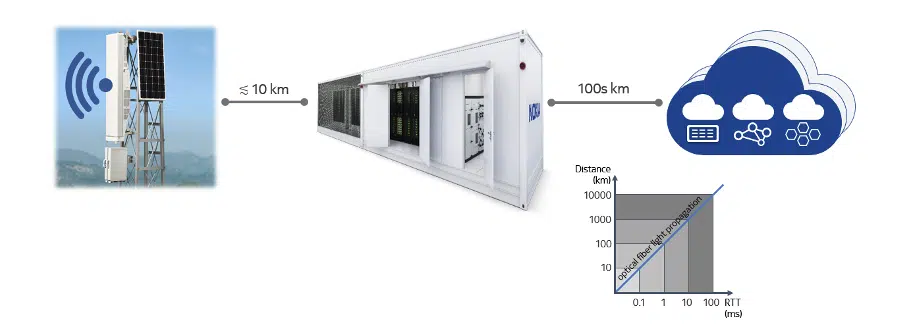

Emerging 5G networks are expected to have a service-based architecture, meaning that network functions can be delivered as service components with the implementation of edge computing on cloud-based hardware. In this context, 5G will become a major demand driver for distributed datacenters, which will be used to enhance network capabilities of an increased number of radio access points. It has been reported that the global edge computer hardware represents a potential value of $175 billion to $215 billion by 2025 [3]. Further forecasts show that over $700 billion in cumulative Capital Expense (CAPEX) will be spent within the next decade on edge information technology (IT) infrastructure and data center facilities including edge equipment at access network sites, e.g., radio base station tower sites (see Figure 1), pre-aggregation sites, e.g., street-side cabinets, and aggregation and central office sites rearchitected as data centers [4, 5]. In this context, major physical deployment challenges for edge datacenters can be summarized as follows:

• Enabling quick and volume scaling in order to meet the demands of dynamic cloud-based services;

• Increasing hardware densification, quantified as kW/m3, while reducing capital and operating expenses;

• Deploying energy efficient and eco-friendly installations, requiring minimal supporting infrastructure;

• Providing hardware platforms that are simple, reliable and easily maintainable, i.e., good serviceability.

Figure 1. Example of a cloud RAN architecture. The mobile base station (left) is connected by optical fiber to a closely located containerized edge datacenter (middle) that may handle radio processing aggregation and/or compute for low-latency service delivery. Non-latency critical traffic is sent to the centralized cloud compute (right) via the optical network. The architecture is fundamentally driven by the finite propagation speed of light along an optical fiber as indicated by the diagram in the lower right.

The most recent survey proposed by the Uptime Institute has indicated an average Power Usage Effectiveness (PUE) of 1.59 in 2020 across 1100 datacenters worldwide [6]. This implies that the IT energy consumption portion is ≈ 63% and non-IT energy consumption portion (thermal management solution, lights, equipment losses, etc.) is ≈ 37%. Our internal studies indicate that lighting, uninterruptible power supply system and other facilities losses typically do not exceed ~5% of the total energy budget; therefore the average thermal management energy consumption is ≈ 32%. The latter can be higher as mentioned in the same Uptime Institute report: “…there are still thousands of older datacenters that cannot be economically or safely upgraded to become energy efficient…”, including the ones to be re-used in the edge network architectures, e.g., CORD (Central Office Re-architected as a Datacenter). These results, combined with the massive grow of edge compute, necessitates a paradigm shift in the thermal management solution to support emerging 5G networks. The next two sections provide an overview of the proposed thermal management solution, including a discussion on the performance metrics, and a Total Cost of Ownership (TCO) analysis for a specific 5G use case, namely a containerized data center deployment, highlighting the business benefits.

TWO-PHASE THERMAL MANAGEMENT SOLUTION

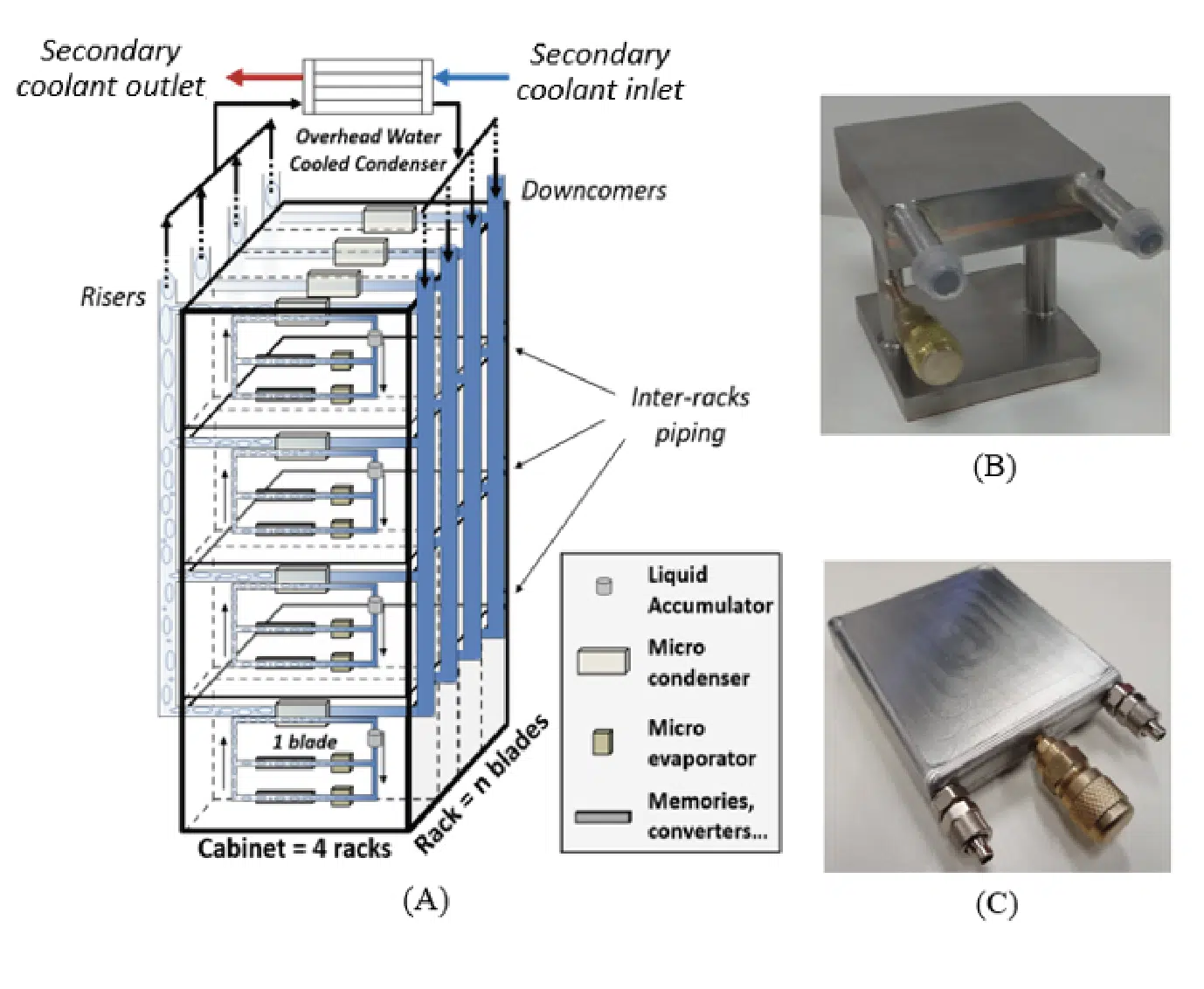

Passive two-phase cooling represents a viable and long-term solution for efficient cooling of datacenters, achieving high-performance and reliability [7]. The proposed technology leverages several two-phase heat sink implementations, e.g., cold plate evaporators, low-height thermosyphons, thermo-heat pipes, etc., to dissipate the heat generated by the critical components inside the servers. Then, the heat is transferred to multiple rack-level thermosyphons, equipped with an overhead compact heat exchanger that dissipates the total heat from the rack into the room-level cooling loop. A schematic of the technology is reported in Figure 2A, while close-up views of the server-level heat sinks are shown in Figures 2B and 2C.

The system level advantages of this thermal management approach include passive thermal energy transport to the rack edge, high thermal performance obviating the needs for active cooling at the room-level, e.g., air-conditioning, under European Telecommunications Standard Institute (ETSI) and Network Equipment Building System (NEBS) deployment environments and the use of reliable two-phase heat sinks. The performance data of the heat sink prototypes shown in Figures 2B and 2C can be found in Amalfi et al. [8] and Cataldo et al. [9].

Figure 2. Passive thermal management solution for next-generation high-performance datacenters. (A) Rack-level thermosyphon architecture to transport heat from the servers to a heat exchanger at the periphery of the rack. Examples of server-level two-phase heat sinks: (B) a thermosyphon with a minimum thermal resistance of ≈ 0.085 K/W; (C) a thermo-heat pipe with a minimum thermal resistance of ≈ 0.065 K/W.

CASE STUDY: BUSINESS BENEFIT OF ADVANCDED THERMAL MANAGEMENT OF EDGE COMPUTE IN 5G NETWORKS

Radio access networks (RAN) consume significant amounts of energy and, despite massive improvements in efficiency in terms of J/bit, this is expected to grow in the 5G era. Important 5G radio architectures, including multi-band remote radio heads (RRH) and massive Multiple Input Multiple Output (MIMO) active antennas, coupled with edge datacenters for radio processing aggregation and low-latency compute capability will be key features in these advanced RAN. In principle, passive two-phase cooling could be applied to efficiently cool multiple sub-systems across the 5G RAN. However the main focus of this section is to investigate the impact of the thermal management solution for a containerized edge compute deployment, including a TCO analysis to highlight the potential economic benefits.

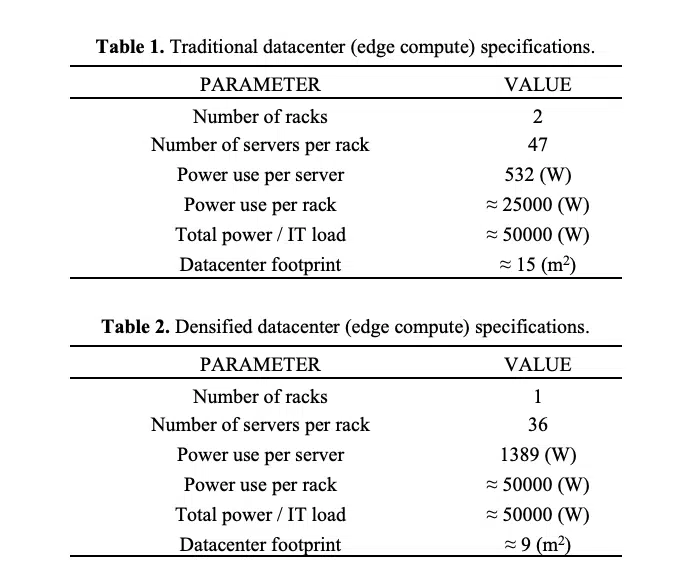

Air-based cooling coupled with in-row air-conditioning units is compared to passive two-phase cooling in a TCO analysis for a containerized edge datacenter considering the specifications listed in Table 1. In addition, Table 2 presents the case where the datacenter is reduced in size with a more densified IT hardware deployment, enabled by the use of the passive two-phase cooling technology (higher power servers and footprint reduction). The TCO analysis incorporates site infrastructure costs, IT capital costs, operating expenses and energy costs informed by historical deployment projects.

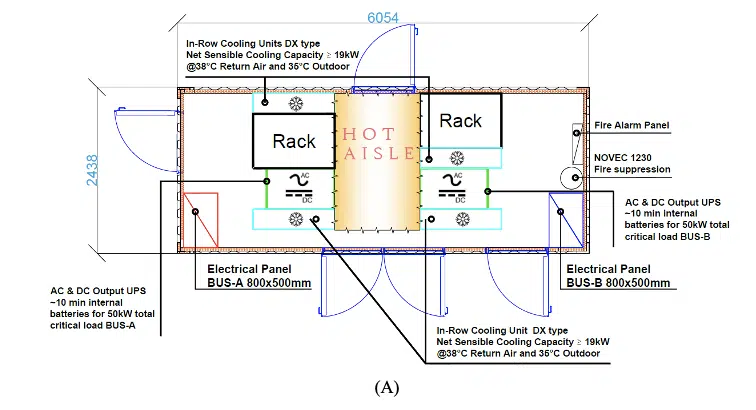

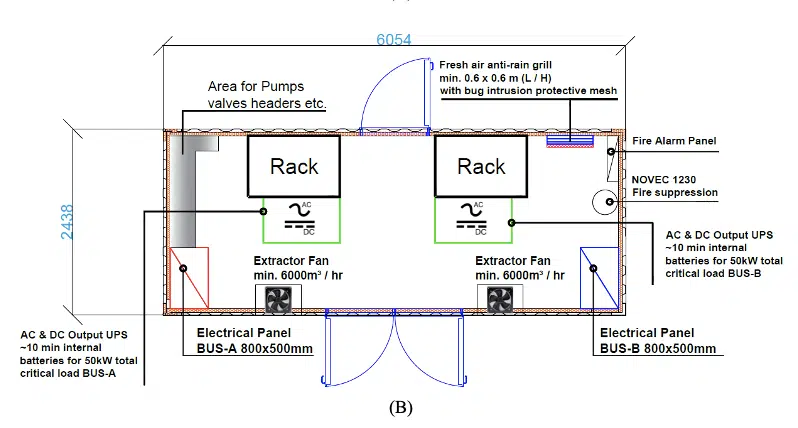

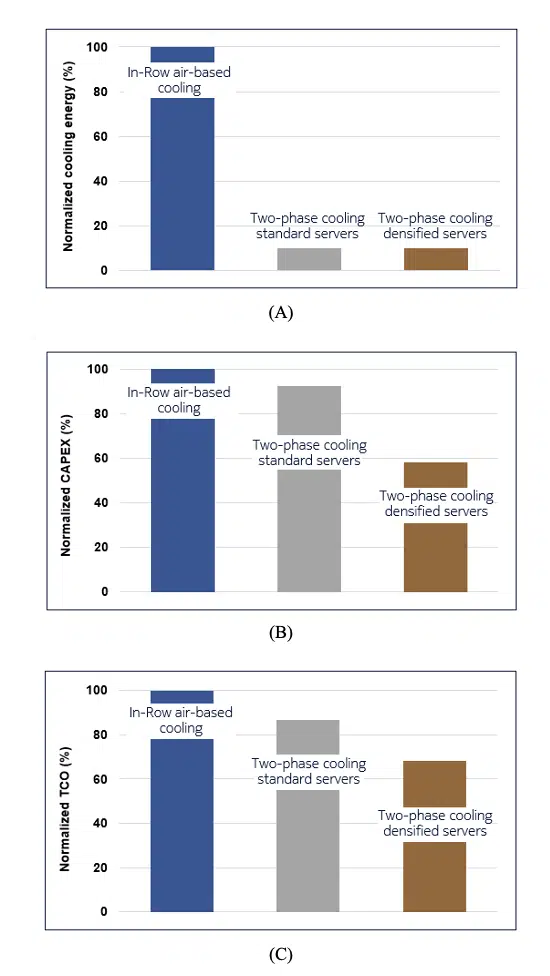

The layouts of the three deployment scenarios are illustrated in Figure 3. The baseline air-cooled edge datacenter (see Figure 3A) uses four in-row air-conditioning units for room-level cooling. The passive two-phase cooled edge datacenters (see Figure 3B and 3C) incorporate two dry-coolers and two ventilation fans for room-level cooling. The cost estimates of the subsystems, such as in-row cooling units, dry coolers, etc., are based on market available data. The hardware costs of servers and racks, between in-row air cooling and passive two-phase cooling solutions, take into account the additional expenses associated with quick couplings, inter-rack piping, server-level heat sinks (see Figures 2B and 2C), overhead heat exchanger (see Figure 2A) and refrigerant charge.

Figure 3. Deployment scenarios of a far-edge datacenter: (A) in-row air-based cooling with standard servers; (B) two-phase cooling with standard servers; (C) two-phase cooling with densified servers.

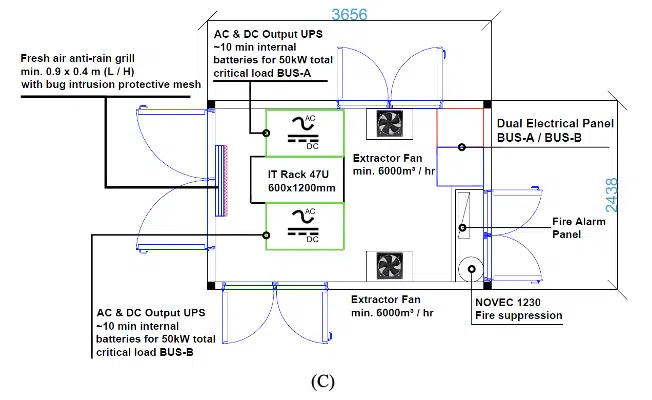

The economic benefits identified in the TCO analysis are discussed based on three figures of merits reported in Figure 4: the cooling energy, CAPEX, and TCO, which are all normalized with respect to the reference deployment scenario of in-row air-based cooling. There are significant energy savings when switching from in-row air cooling to passive two-phase cooling due to the passive thermosyphon nature, coupled with a more efficient cooling approach at room-level. PUE calculations for the two cases show a reduction from 1.38 to 1.08, where the cooling energy portion represents 24% and 3% of the total energy cost, respectively. CAPEX is reduced when going from air-based cooling to passive two-phase cooling with standard servers, mainly due to the cost savings of the in-row units. A more significant CAPEX reduction can be achieved when the datacenter is deployed with passive two-phase cooling and densified servers due to the additional savings in the IT capital costs. This translates into a projected 32% TCO reduction for densified servers that is enabled by passive two-phase cooling in comparison to the baseline air-based cooling case, where it is assumed that the server cost per Watt is reduced by ≈ 50%.

Figure 4. Summary of the results considering the three deployment scenarios introduced earlier: (A) normalized cooling energy per year; (B) normalized CAPEX per year; (C) normalized TCO per year.

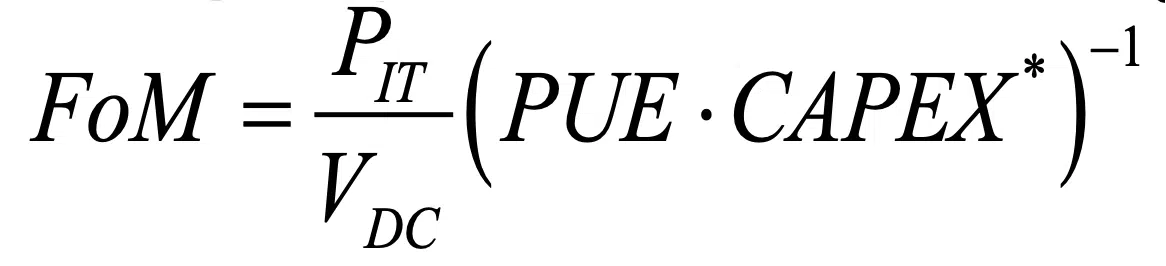

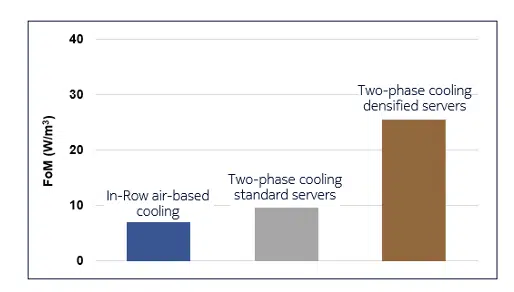

Finally, the three deployment scenarios of a far-edge datacenter, including in-row air-based cooling, two-phase cooling with standard servers and two-phase cooling with densified servers, are compared using an overall Figure of Merit (FoM) as reported in Figure 5. The FoM proposed here takes into account the IT load as a function of datacenter size, energy efficiency and datacenter deployment cost:

where PIT is the IT load (W), VDC is the overall datacenter volume (m3) and CAPEX* is the datacenter deployment cost normalized to the reference air-cooling deployment cost case (-), as reported in Figure 4B. The FoM favors low-cost, energy-efficient and dense IT deployments. Thus, the FoM increases when a far-edge datacenter is deployed with two-phase cooling, and in particular, the highest FoM is found for two-phase cooling with densified servers as the power density is maximized, while optimizing PUE and providing a reduced CAPEX.

CONCLUSIONS

Emerging 5G networks will enable new use cases and drive a services delivery model for the telecommunications industry. The need for reduced latency to deliver these new use cases, and an increased density of radio access points, requires the deployment of edge compute to aggregate radio processing and shift some workloads from the cloud. Edge compute must be deployed in a way that maximizes energy efficiency and minimizes cost to establish the business case and achieve critical sustainability goals. Two-phase cooling applied at the edge is projected to deliver efficiencies rivaling highly optimized hyper scale data center deployments at costs significantly lower than those achieved in the current air-cooling paradigm.

Figure 5. Proposed FoM for the three deployment scenarios considered in this study.

REFERENCES

[1] M. Bourke, Working in More-than-Moore, Lam blog on technology, Lam Research, October 2019, (https://blog.lamresearch.com/working-in-more-than-moore/), [Accessed December 2020].

[2] G. Sharma, Big data & cloud computing: the roles & relationships, IEEE Computer Society, Publications/Tech News/Trends, 2020, (https://computer.org/publications/tech-news/trends/big-data-and-cloud-computing), [Accessed January 2021].

[3] J.M. Chabas, C. Gnanasambandam, S. Gupte, M. Mahdavian, New demand, new markets: What edge computing means for hardware companies, McKinsey & Company, November 2018, (https://mckinsey.com/industries/technology-media-and-telecommunications/our-insights/new-demand-new-markets-what-edge-computing-means-for-hardware-companies#), [Accessed November 2020].

[4] M. Trifiro, J. Smith, About State of the EDGE report, The Linux Foundation Projects, 2020 (https://stateoftheedge.com/about/), [Accessed January 2021].

[5] Technical Steering Team (TST), Central Office Re-architected as a Datacenter (CORD), Open Networking Foundation (ONF), (https://opennetworking.org/cord/), [Accessed December 2020].

[6] R. Bashroush, A. Lawrence, Beyond PUE: tackling IT’s wasted terawatts, Uptime Institute Survey, April 2020, (https://journal.uptimeinstitute.com/). [Accessed October 2020].

[7] R.L. Amalfi, F. Cataldo, J.R. Thome, The Future of Datacenter Cooling: Passive Two-Phase Cooling, Electronics Cooling Online Journal, Section of Data Centers, Free Air Cooling and Liquid Cooling, May 2020.

[8] R.L. Amalfi, F. Faraldo, T. Salamon, R. Enright, F. Cataldo, J.B. Marcinichen, J.R. Thome, Hybrid two-phase cooling technology for next-generation servers: thermal performance analysis, IEEE Intersociety Conference on Thermal and Thermo-mechanical Phenomena in Electronic Systems (ITHERM), 2021.

[9] F. Cataldo, Y.C. Crea, R.L. Amalfi, J.B. Marcinichen, J.R. Thome, Novel pulsating heat pipe for high density power data centres: performance and flow regime analysis, IEEE Intersociety Conference on Thermal and Thermo-mechanical Phenomena in Electronic Systems (ITHERM), 2021.