Abstract

Data centers face challenges in balancing space and power, with studies showing that when power density exceeds 7kW per rack, IT equipment space utilization drops to 50%. Traditional cooling methods are reaching limits due to increasing demands from deep learning and AI, necessitating more space. Two-phase immersion cooling offers simplicity and cost savings compared to traditional methods. Submerging equipment in liquid allows for significant energy savings and accommodates future load densities. This article explores power density from the perspective of two-phase immersion cooling, comparing it with air cooling at higher densities. Two-phase immersion cooling increases floor space density by over six times, simplifying server design and reducing costs. At higher densities, the cost of fluid relative to electronics becomes negligible.

Introduction

Increased power density (the amount of power per unit volume) is often listed among high priorities for data center operators who rightly perceive that higher rack density can drive down data center costs. However, in traditional air-cooled data centers, increased density does not necessarily translate to improved economics [1].

Higher-density servers increase IT capacity per rack, but this can strain fan power and computational efficiency [2]. This rise in IT density can decrease data center footprint but requires sufficient temperature and airflow conditions [3]. Capital costs escalate with the additional plena, filters, and heat exchangers needed for these denser racks. Modern servers utilize multiple fans to direct air toward hot components, increasing power consumption, noise, and the risk of system failures [2]. While modern processor designs distribute work across low-power processors to mitigate heat, air cooling limits potential processor density [3]. Liquid cooling technologies enable a much higher power density. However, direct-to-chip water cooling and even liquid/single-phase immersion cooling eventually reach density levels at which the flow of the coolant becomes an engineering challenge in a manner similar to air-cooling [4-6].

Passive 2-phase immersion cooling (P2PIC) is different in this respect because many aspects of P2PIC improve with density. A certain level of density is a requisite to economically justify this approach, with economics that continues to improve with higher density. To explore how and why this is so, it helps to think of power density not in traditional units like kW/rack or kW/m2 but to consider it as Archimedes would, by volume displacement.

In this study, the true volume of the electronics and air-cooling hardware in a 2U server is measured in this way, permitting direct calculation of the fluid volume required to cool it by immersion. These data are then used to extrapolate to higher hardware power densities where the advantages of immersion emerge.

Experimental Procedures

Measurement of Power Density by Displacement

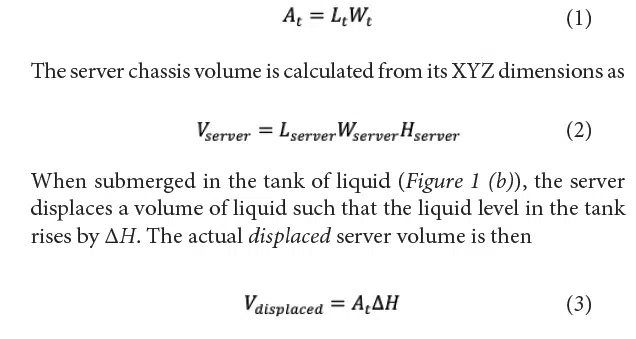

FIGURE 1 (a.) shows a tank of liquid and a server chassis. The cross-sectional area of the rectangular tank as seen from above is

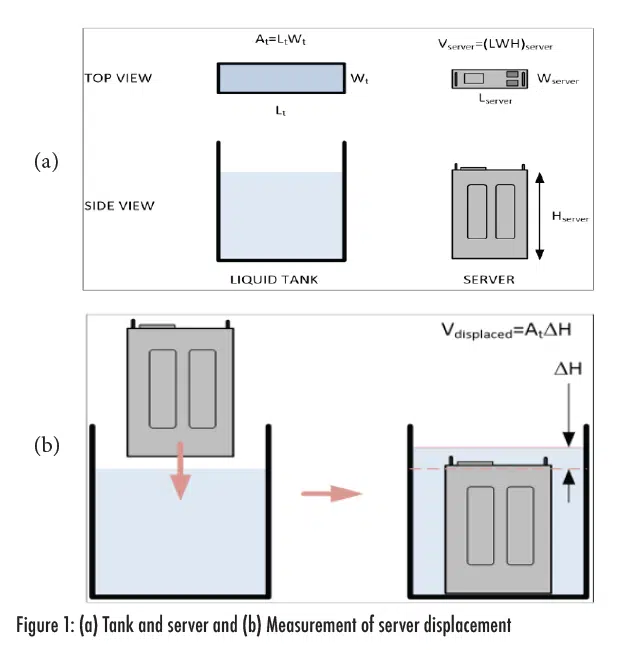

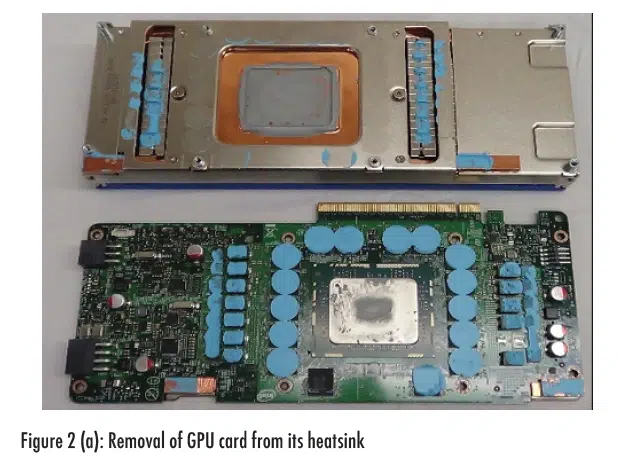

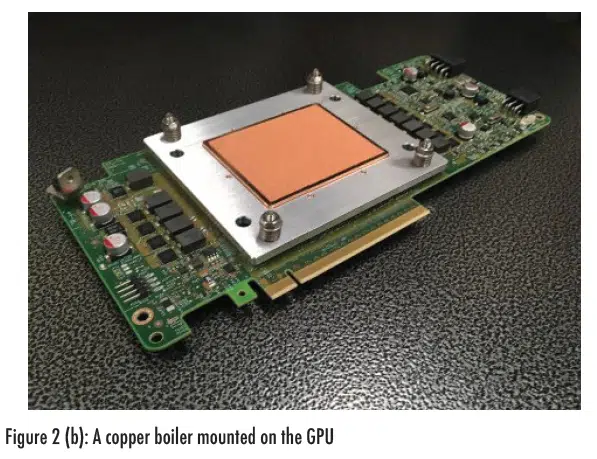

Additional experiments were conducted to isolate the displacement of various components of a server as shown in FIGURE 2 (a.). These were broadly grouped into 2 categories: The first, Vother, includes those components that exist purely for air cooling, such as fans, heat sinks, shrouds, ducts, etc. It also includes the steel used to make the chassis and to arrange the electronics within. The second, Velectronics, includes the rest of the server, those things that would be difficult to dispense with as they ARE the server. These include the PCBs, chips, capacitors, power supplies, etc. that comprise the server. A copper boiler made by Cooler Master (Taiwan) is mounted on an Intel Xeon Phi math coprocessor as shown in FIGURE 2 (b.). Such a boiler is the only additional volume required for 2-phase immersion. The remaining volume is air but also represents the volume that must be filled with liquid if the same server is to be immersion-cooled.

Results and Discussion

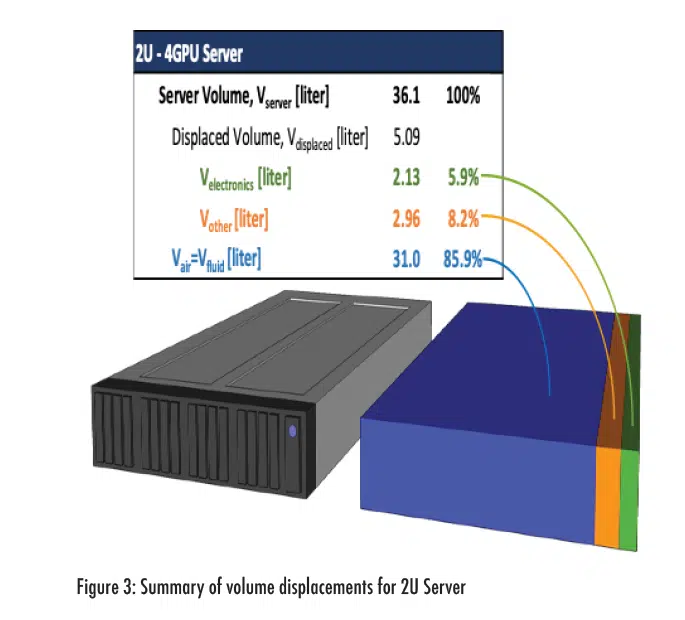

The results of these experiments are shown in FIGURE 3.

Though the server chassis is 14% solid matter, only 6% of its total volume is occupied by electronics (FIGURE 3). Calculations like these point to the illogicality of immersion cooling servers that were designed to be cooled by air.

The 5mm Datacenter

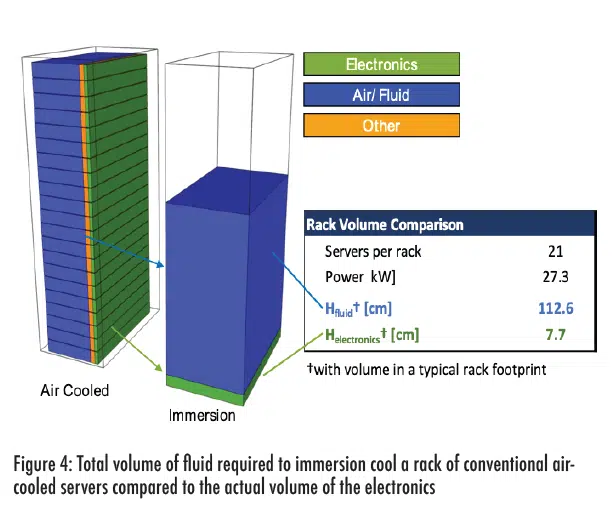

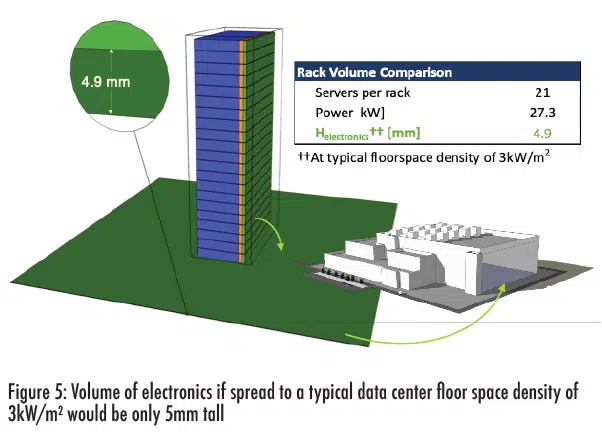

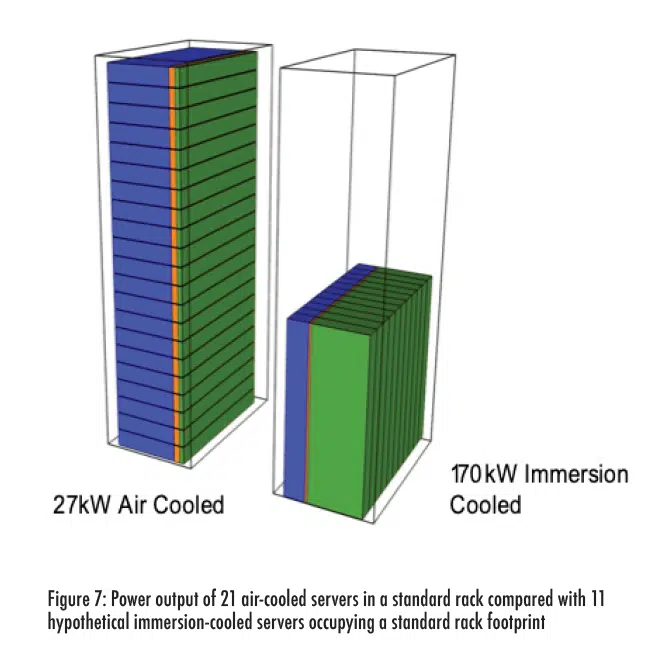

If you could air cool 21 of these servers (27.3 kW) in a conventional rack (23 inches wide x 39 inches deep x 2-meter height), the total volume of the electronics ((21 servers x Velectronics = 2.13 liter from FIGURE 3 x 1000) cm3) would represent only 8cm (4%) of the rack’s 2-meter height, with the fluid required to fill them reaching another 112cm (FIGURE 4). If the volume of the electronics was instead distributed at a typical data center floor space density of 3kW/m2, it would be only 5mm thick, a result that belies the use of a multi–story facility that might house it (FIGURE 5).

Extrapolating to Higher Density

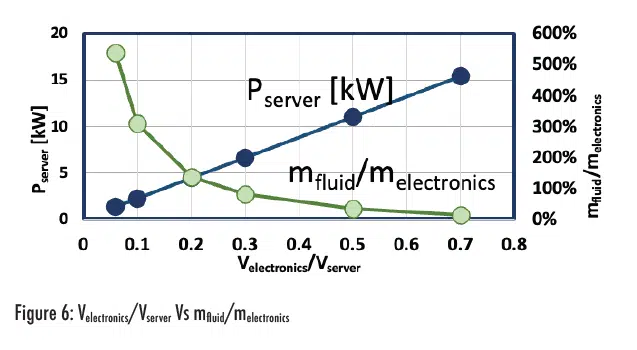

These data can be used to extrapolate to higher power density. For this analysis, the volume of the steel chassis (found to be half of the volume of Vother) is assumed to remain constant (TABLE 1). The mass of electronics per displaced volume, which averages 4.5 kg/liter, and the electronics power density of Delectronics=0.61 kW/liter (from the server power and FIGURE 3) are also held constant. A fluorochemical fluid density of 1.6 kg/liter is assumed. The power density, expressed as Velectronics/Vserver, is then increased while Vserver is kept constant.

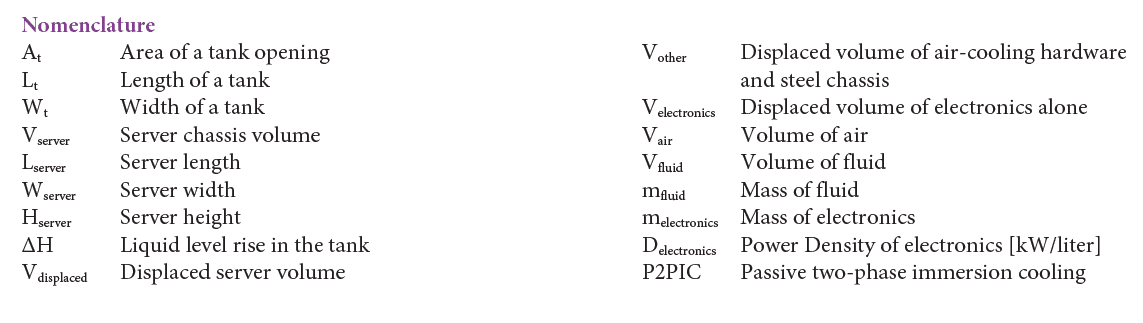

This analysis shows how the mass of fluid becomes less significant relative to the mass of the electronics as the power density increases (TABLE 1). When Velectronics/Vserver= 70%, the fluid requirement has dropped from 25 to <1 Liter/kW (TABLE 1 and FIGURE 6) and 11 of the 15kW servers can be placed in a 170kW tank with 6 times the floor space density of the air-cooled rack (FIGURE 7).

Reality Check

Will P2PIC even function at this density? One 40MW two-phase immersion facility [6] uses less than 2 liters of fluid per kW with 12kW 1U modules housed in 250kW tanks that use 48-53ºC water to cool. These same tanks are sized for up to 500kW to accommodate next-generation hardware. One can look also at the refrigeration industry, in which 2,000+kW low-pressure chillers [7] are deployed with evaporator footprints no larger than these tanks. Lastly, technology demonstrations with simulated computer hardware showed that fluid power densities, Dpower, fluid <0.1 Liter/kW are possible [8].

Additional Considerations

There are additional savings to be gained at the server level:

- The cost of engineering an air-cooled solution for a modern server can be quite high and involves computational fluid dynamics simulations, wind tunnel testing, and validation.

- A myriad of heat sinks, interface materials, isolation pads, conformal coatings, fans, and baffles can be eliminated from servers and power supplies.

There will, of course, be costs incurred in immersion cooling and these are primarily associated with more rigorous pre-cleaning of hardware, cleaner cabling insulations, and the capital cost of nontraditional test and burn-in equipment for hardware that can no longer be cooled by air.

However, savings at the facility scale can also be realized and most have been demonstrated in the aforementioned 40MW facility of detailed projections:

- Elimination of raised floors, fans, air filters, baffles, plena, adiabatic, or refrigerated cooling equipment coupled with reductions in telecom requirements can reduce facility costs by up to 43% [9].

- Elimination of fire protection equipment would reduce facility costs but will require modification of relevant industry standards. The merits of submerging IT equipment in a clean agent fire suppressant are already recognized [10].

- Ability to re-use tanks and mechanical infrastructure for future generations of hardware.

Simplicity

- Ability to design hardware, to densities that reduce the fluid requirement to <0.1 Liter/kW [8], without regard for how it will be cooled. No airflow simulations, thermal solution validation, etc. needed.

- Passive heat transfer process. No use of rack pumps, redundant pumps, controls, etc.

- Elimination of node-level cooling hardware. No requirements of cold plates, interfaces, manifolds, quick disconnects, hoses, etc.

- Reusable, environmentally sustainable working fluids like Hydrofluoroethers (HFEs), fluoroketones, hydrofluoroolefin (HFOs), etc., which have no Ozone Depletion Potential (ODP) and very low Global Warming Potential (GWP). [14].

Challenges on the Path to x86

- As is always true with any burgeoning technology, challenges to the implementation of two-phase liquid immersion cooling exist; including concerns about fluid loss, fluid selection, material compatibility, safety, and environmental impact. While outside the scope of this work, these must be evaluated for this technology to succeed for a given application.

- The challenges in advancing x86 technology include securing a reliable supply chain for compatible materials, ensuring effective maintenance and fluid loss mitigation, developing an ecosystem that supports high power density, statistically meaningful demonstrations in the realm of x86, and commercially available turn-key systems.

- Given the importance of fluorochemical fluids in two-phase liquid immersion cooling, it’s understandable that readers may have concerns about their future availability, especially in light of recent developments at 3M. However, the current state of the market indicates no significant issues with the availability of these fluids. Although this article does not delve into supply chain specifics, it’s important to note that the availability of fluorochemical fluids remains stable. Some key suppliers include Unistar Chemicals, Inventec Performance Chemicals, and Chemours. These companies ensure both a reliable supply and alternative options. Of course, testing the viability of these potential alternatives will be crucial.

Conclusion

Though comprised of energy-dense GPU hardware, the volume of the chassis in this study is more than 90% air. The low power density of the air-cooled server hardware intensely affects the economic feasibility of immersion cooling. A modern 1.3kW, 2U GPU server was only 6% electronics on a volumetric basis. The electronics comprising 21 of these servers (27kW, 42U) would fill only the bottom 8cm of a rack footprint. The fluid required to fill them would require an additional 112cm and the fluid mass would be 5X that of the server.

Higher-density hardware, already realized in Bitcoin mining, enables the value proposition of immersion [11,12,13]. If a server could instead be 70% electronics, it would be 15kW, enabling 170kW dissipation to be housed in the footprint of a traditional rack. The fluid required to submerge this hardware would represent around 14% of the electronics’ mass.

In a nutshell, a volume displacement analysis reveals just how low the power density in a typical facility is. At 3kW/m2, a typical air-cooled data center floor space density, the electronics in a typical data center would fill only the bottom 5mm of the building which is often several stories tall. P2PIC can increase floor space density 6 times or more while simplifying server design and reducing facility capital and operating costs. With increased density comes increased interconnect bandwidth and the potential to reduce E-waste.

Bios

Jimil M. Shah, PhD

Jimil M. Shah, PhD, is an Immersion Cooling Staff Engineer at a Stealth Startup, spearheading the development of cutting-edge

two-phase immersion cooling systems for hyperscale data centers. His work focuses on enhancing energy efficiency and reducing

operational costs, particularly in thermal and reliability aspects. Previously, Dr. Shah served as Senior Director of Thermal

Sciences at TMGcore, and as an Application Development Engineer for Server Liquid Cooling at 3M Company. His research

in advanced cooling solutions for data centers includes direct-to-chip and immersion cooling with dielectric fluids. Dr. Shah

received his doctorate in Mechanical Engineering from the University of Texas at Arlington and has numerous patents and publications in the field.

Phillip E. Tuma

Phillip Tuma worked for 27 years at 3M Company developing applications for fluorinated heat transfer fluids in various industries

including pharmaceuticals, military/aerospace, power electronics, and semiconductor processing. For the past 15 years he

has studied the mechanical systems and the physical, organic, and electrochemical processes that enable the use of passive

2-phase immersion for cooling high-power density compute systems. He holds a BA from the University of St. Thomas, a BSME

from the University of Minnesota, and a MSME from Arizona State University.

REFERENCES

[1] Bob West, 29 Aug 2018, https://www.datacenters.com/news/understanding-the-interplay-between-data-center-power-consumption-data-center-en

[2] Cho J, Park B, Jeong Y. Thermal Performance Evaluation of a Data Center Cooling System under Fault Conditions. Energies. 2019; 12(15):2996. https://doi.org/10.3390/en12152996

[3] Alex Carroll, 13 March 2019, https://lifelinedatacenters.com/data-center/data-center-power-density/

[4] Stansberry, M., “UPTIME INSTITUTE DATA CENTER INDUSTRY SURVEY 2015,” Copyright Uptime Institute, 2015.

[5] Brown, K., Torell, W., and Avelar, V., “Choosing the Optimal Data Center Power Density,” White Paper 156, Copyright 2014, Schneider Electric, http://www.apc.com/salestools/VAVR-8B3VJQ/VAVR-8B3VJQ_R0_EN.pdf

[6] Anthony Horekens, 12 November 2018, https://www.allied-control.com/publications/Allied-Control-to-Exhibit-Immersion-Cooling-Solutions-with-Orange-Silicon-Valley-at-SC18-Conference.pdf

[7] “Trane® CenTraVac® Chillers”, Accessed on 10th April, 2019, CenTraVac meets today’s efficiency and sustainability challenges, https://www.trane.com/commercial/north-america/us/en/products-systems/chillers/water-cooled-chillers/centrifugal-liquid-cooled-chillers.html

[8] Tuma, P. E., “The Merits of Open Bath Immersion Cooling of Datacom Equipment,” Proc. 26th IEEE Semi‐Therm Symposium, Santa Clara, CA, USA, pp. 123‐131, Feb. 21‐25, 2010.