Steadily increasing demand for data storage from processing applications and online websites has contributed to increased data center power consumption for more than a decade [1]. For example, the worldwide power consumption of data centers in the year 2005 was twice that of the consumption in the year 2000. By 2010 this increased by a further 56% to constitute 1.3% of global power consumption [2]. Going forward the power consumption of data centers is expected to grow up 15 to 20% yearly [3].

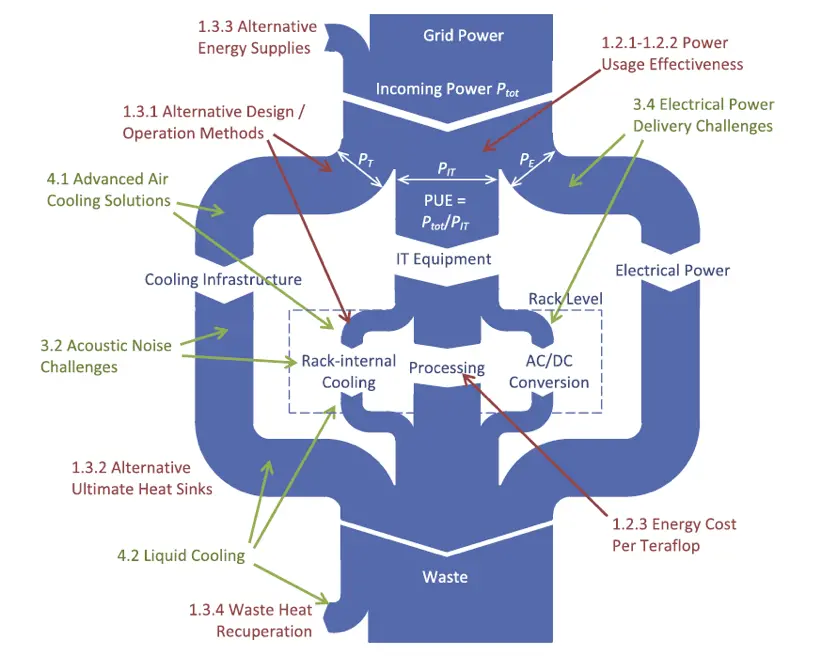

In a breakdown of energy consumption in data centers, the energy consumed by the actual IT equipment of the racks and its contents is only about half of the power supplied to the whole data center [2, 4]. However, the remaining power is considered as overhead losses spent on cooling, electrical power delivery losses, lighting, and other facilities. The electrical delivery losses include voltage losses due to conversions, uninterrupted power supply unit (UPS) losses and power transportation losses within the data center [5]. Among these overhead losses, the power spent on cooling systems of a typical data center constitutes the largest portion of power consumption which is reported by many publications to be 35% of the total power consumption of a data center [2, 5, 6]. Figure 1 shows the power distribution for the different parts of a typical data center.

Figure 1: Distribution of power into three main components of a typical data center (computing, cooling, and power losses) [11]

The demand for improved energy efficiency in data centers has raised the necessity to optimize existing technologies and find innovative new methods on both the IT side and the supporting infrastructure. A focus of this has been the cooling infrastructure, which represents the largest service requirement.

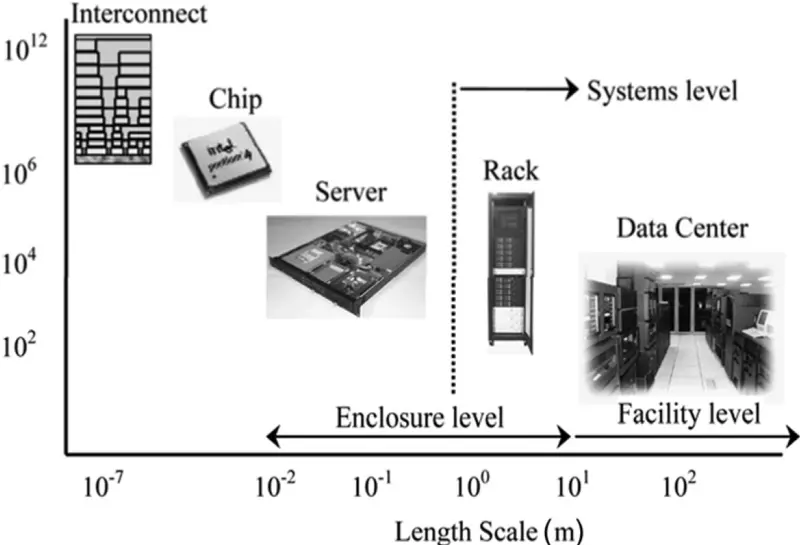

The operation of the IT equipment results in converting almost all the electrical power into heat (data flows carry small amount of energy). This heat is generally transported through multiscale subsystems from the heat generator to the environment as shown in figure 2 [7]. It is mainly generated at the chip level of nanometer scale from the transistors and the interconnects, to the server level of the meter scale, to the rack level of meters in scale to the data center level at hundreds of meters before been transferred to the environment at the facility level [8]. This series of multiscale subsystems of transferring the heat has made the thermal management of data centers challenging for the designers and engineers as the cooling is initiated at the facility level and then conducted back to take the heat away from the chip level.

Figure 2: Thermal management of data centers through multiscale systems [9]

The microsystems packaging has focused mainly at the chip, server and rack levels while the data centers and facility levels have been the focus of heating ventilation and air conditioning (HVAC) engineers [7]. Therefore, holistically looking at the cooling system from the chip to the environment is necessary to minimize the power consumption of cooling especially with the high increase in the IT equipment density.

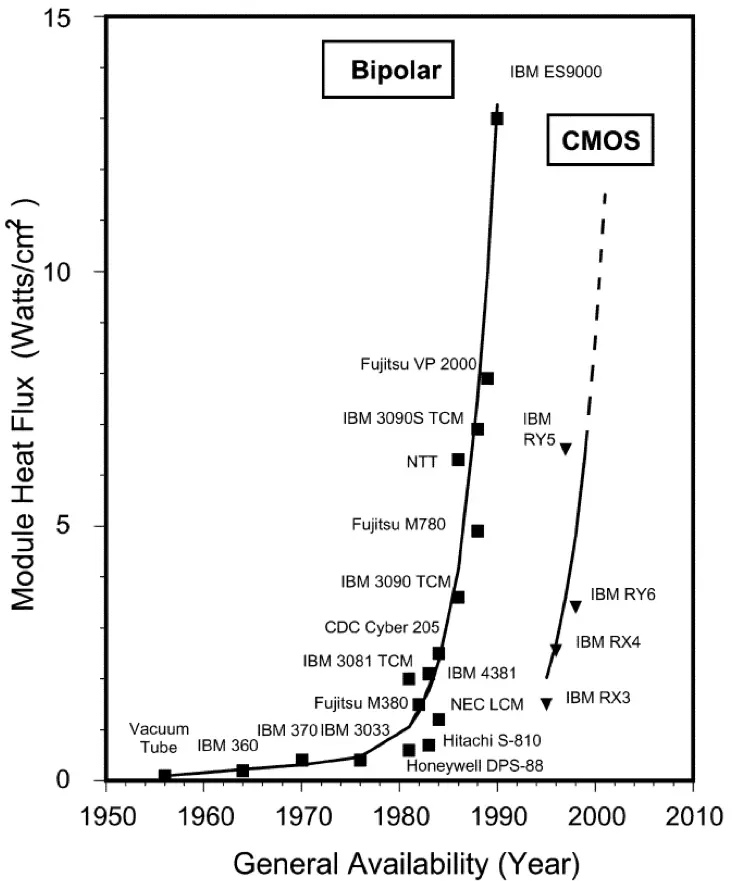

Datacom systems use different approaches to remove the heat and the most common is to use air. This can be done in numerous ways such as underfloor plenums, overhead ducts, containment and side fed [10]. However, by the year 2020, it is expected that the heat generated by the general purpose processors will increase to 190W [11]. Moreover, the generated heat by the high-performance computer processors (HPC) is already as high as it was recorded to be 300W in 2012 [11]. It is clearly shown that the air cooling has reached its limitations of heat transfer as the effective convection coefficient of air ranges between 0.04 to 0.07 with the difference between the inlet and outlet temperatures across the rack of 15 [12]. Therefore, it is necessary to reintroduce liquid cooling as an efficient heat transfer medium to cool the IT as it has been already used in electronics cooling prior to introducing the Complementary Metal Oxide Semiconductor transistor (CMOS) in the 1990s as shown in figure 3 [13].

The increasing heat dissipation within data centers and the projected development trend has seen a reconsideration of liquid cooling technologies. Concerns raised by using liquid cooling over traditional air cooling include leakage and complicated infrastructure requirements possibly increasing costs [14]. The principle of the design of liquid cooling systems is to bring the liquid medium closer to the IT enclosure. This can be achieved using different methods such as rear door heat exchanger, or bringing the liquid in direct contact with the chip such as cold plate direct liquid cooling or it can go even beyond that by immersing all the electronics in dielectric liquids [10].

Figure 3: History of the module heat flux in Bipolar and CMOS transistors [13]

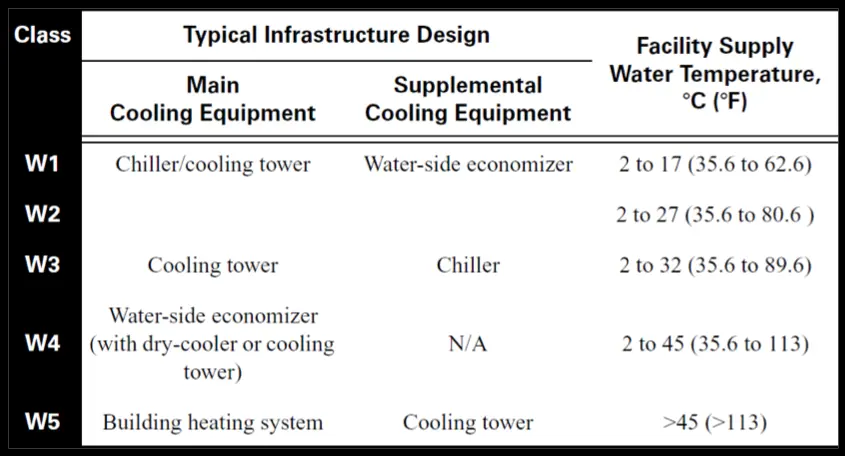

The facility environment tiers and the combined boundaries of liquid cooling in data centers define the IT equipment operation limits. ASHRAE [15] describes the thermal guidelines of liquid cooling and categorizes them into five classes depending on the type of facility cooling equipment. These classes are ranging from W1 to W5 and each class has its own temperature boundaries as shown in table 1. Classes W1 and W2, use chiller and cooling tower (depending on the geographical area whether it uses an economizer for energy improvements or not). Class W3, typically uses the cooling tower for most of the time and uses the chiller under certain conditions. Class W4, uses only a dry cooler or cooling tower to improve the energy performance of the data center. Finally, W5 is completely based on the heat reuse where the data center dissipates its heat to heat buildings or to be used in any other facilities. The operation environmental class should be chosen based on non-failure conditions with full operation of the IT under the specific environmental conditions.

Table 1: ASHRAE Liquid cooled thermal guidelines [16]

References

- Brown, R.E., et al., Report to congress on server and data center energy efficiency: Public law 109-431. 2007, Ernest Orlando Lawrence Berkeley National Laboratory, Berkeley, CA (US).

- Koomey, J., Growth in data center electricity use 2005 to 2010. A report by Analytical Press, completed at the request of The New York Times, 2011.

- Ebrahimi, K., G.F. Jones, and A.S. Fleischer, A review of data center cooling technology, operating conditions and the corresponding low-grade waste heat recovery opportunities. Renewable and Sustainable Energy Reviews, 2014. 31: p. 622-638.

- Koomey, J.G., Worldwide electricity used in data centers. Environmental research letters, 2008. 3(3): p. 034008.

- Garimella, S.V., et al., Technological drivers in data centers and telecom systems: Multiscale thermal, electrical, and energy management. Applied energy, 2013. 107: p. 66-80.

- VanGeet, O., Best practices guide for energy-efficient data center design. 2010, EERE Publication and Product Library.

- Joshi, Y. and P. Kumar, Energy efficient thermal management of data centers. 2012: Springer Science & Business Media.

- Alkharabsheh, S., et al., A Brief Overview of Recent Developments in Thermal Management in Data Centers. Journal of Electronic Packaging, 2015. 137(4): p. 040801.

- Rambo, J.D., Reduced-order modeling of multiscale turbulent convection: application to data center thermal management. 2006, Georgia Institute of Technology.

- Khalaj, A.H. and S.K. Halgamuge, A Review on efficient thermal management of air-and liquid-cooled data centers: From chip to the cooling system. Applied Energy, 2017. 205: p. 1165-1188.

- ASHRAE, T., Datacom equipment power trends and cooling applications. 2012, American Society of Heating, Refrigeration and Air-Conditioning Engineers Atlanta, GA.

- Kheirabadi, A.C. and D. Groulx, Cooling of server electronics: A design review of existing technology. Applied Thermal Engineering, 2016.

- Chu, R.C., et al., Review of cooling technologies for computer products. IEEE Transactions on Device and materials Reliability, 2004. 4(4): p. 568-585.

- Ohadi, M., et al. A comparison analysis of air, liquid, and two-phase cooling of data centers. in Semiconductor Thermal Measurement and Management Symposium (SEMI-THERM), 2012 28th Annual IEEE. 2012. IEEE.

- Steinbrecher, R.A., Data Center Environments ASHRAE’s Evolving Thermal Guidelines. ASHRAE Journal, 2011. 53(12): p. 42.

- ASHRAE, Thermal Guidelines for Data Processing Environments, ed. T. Edition. 2012. 152.