Introduction

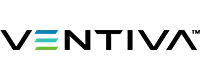

Let’s begin with two images that may be familiar, but will provide a very good illustration to help us frame the current market trend and why liquid cooling is on the rise. The two charts in Figure 1 show actual CPU and GPU power consumption over the years, and Figure 2 shows an overall trend in chip power. As we can see here at this point, in 2023 at 700 watts, that is essentially your latest GPU chip. Even today air cooling is reaching its limits to be able to cool these high-power chips efficiently. For example, over the years it’s common architecture for these AI, machine learning GPU servers to have up to eight GPUs per server. The server heights have steadily grown over the years to be able to provide more space for more fans, bigger fans, and bigger heat sinks.

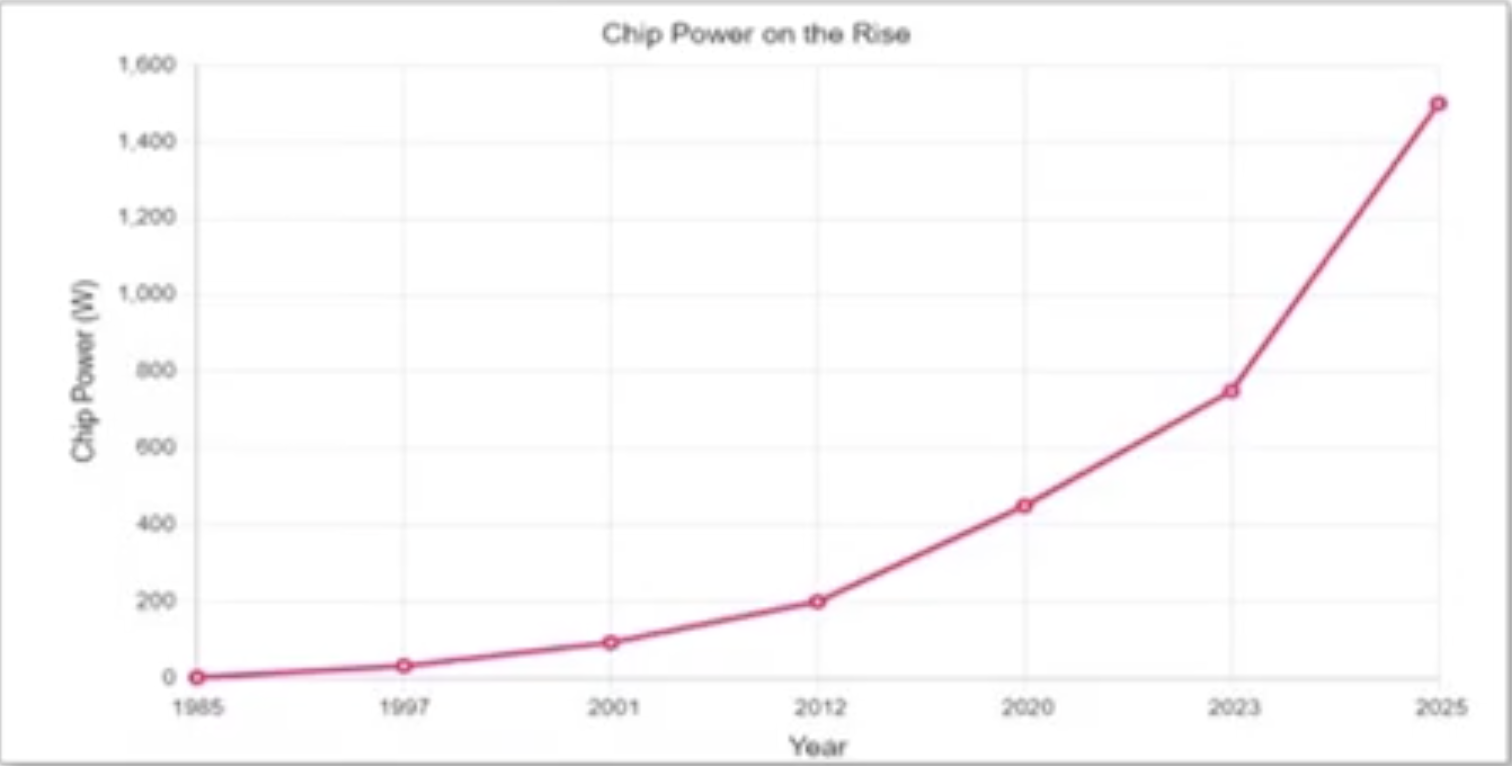

And at the same time we’ve seen companies that are starting to investigate liquid cooling. They’re able to, in some cases, reduce the server size back down to one unit dramatically increasing efficiency and reducing costs. As demand for more and more generative AI applications and infrastructures, they will continue to drive for more powerful chips and denser solutions. Next, we’ll look at what this increase in chip power means for data centers. The IEA estimated that data centers globally consumed about 200 terawatt hours of electricity in 2022, and that is expected to double by 2030 as you can see in Figure 3 below. AI workload will also ship data center rack power capacities up to about 50 to a hundred kilowatts per rack up from what we typically see today with less than about 10 kilowatts per rack. Data centers measures its efficiencies via a term called PUE or power usage effectiveness. Specifically, this measures how much energy is used by the computing equipment in contrast to the peripheral support equipment and cooling equipment.

In 2022, the global average for data center POE is 1.55, which roughly translates to about 550 watts of support equipment power used for every 1000 watts of IT equipment. Larger companies have been able to bring this down over the years with investments in newer and more purpose built data centers, which gives them both a commercial and a reputational advantage over its competitors errors. One of the more impactful and practical ways to handle both the increase in power demand per rack and to improve data center efficiencies is to move towards liquid cooling, which outperforms air cooling in multiple categories, including heat rejection capacity, energy efficiency, space utilization, acoustics flexibility, and long-term sustainable climate impacts.

How can Boyd help?

Boyd has its beginnings in industrial fabrication in California since 1928 and over the years we’ve evolved into a trusted global innovator for high performance integrated solutions. For the most advanced markets, Boyd has been providing liquid cooling solutions to medical, semiconductors and industrial markets for decades. Certain segments of the data center community such as HPC and certain companies have adopted liquid cooling years ago, and now the larger industry is getting ready for that transition as well. Boyd has the experience, the capabilities, and the global support structure to help bring data centers into the new age.

Boyd understands there’s a natural fear of water from the data center folks, and for years the biggest concern from data centers has been leaks. We do our best to try to educate the broader market with our track record and our processes. Our data here is around what we consider the most challenging part of the liquid cooling solution: the cold plates and the loops. We’re proud to report that over the 16 plus years of deployment, we have no field leads and that is credited to our engineering, manufacturing, and quality teams that oversee the custom initial design, the stringent manufacturing processes, and a hundred percent testing procedures.

Another important aspect of a complete and reliable liquid system is to understand the interactions between the different components and their wedded material chemistry compatibility. Through Boyd’s experiences in various industries and working with different materials, have the expertise to design compatible material, whether we’re designing a completely new system or one that’s integrated into an existing customer infrastructure. This will help avoid long-term issues such as galvanic corrosion when you mix copper and aluminum that will eventually lead to clogging, reduce performance and leaks in the system for liquid cooling.

Boyd thermal systems have solutions in three major categories. First, we have the thermal control units TCUs. These are used to maintain very specific temperatures for testing and burning purposes. We also have subambient chillers that utilizes refrigerants to bring down and maintain processed fluid temperatures to as low as negative 80 degrees Celsius. And for our CDUs cooling distribution units that are mainly designed for mass heat rejection, as we work with different industries that have different form factors and capacity needs. Our units are also designed to be flexible to our customer’s needs. For each category, we have different baseline models that will fit within the rack on benchtops or within a facility.

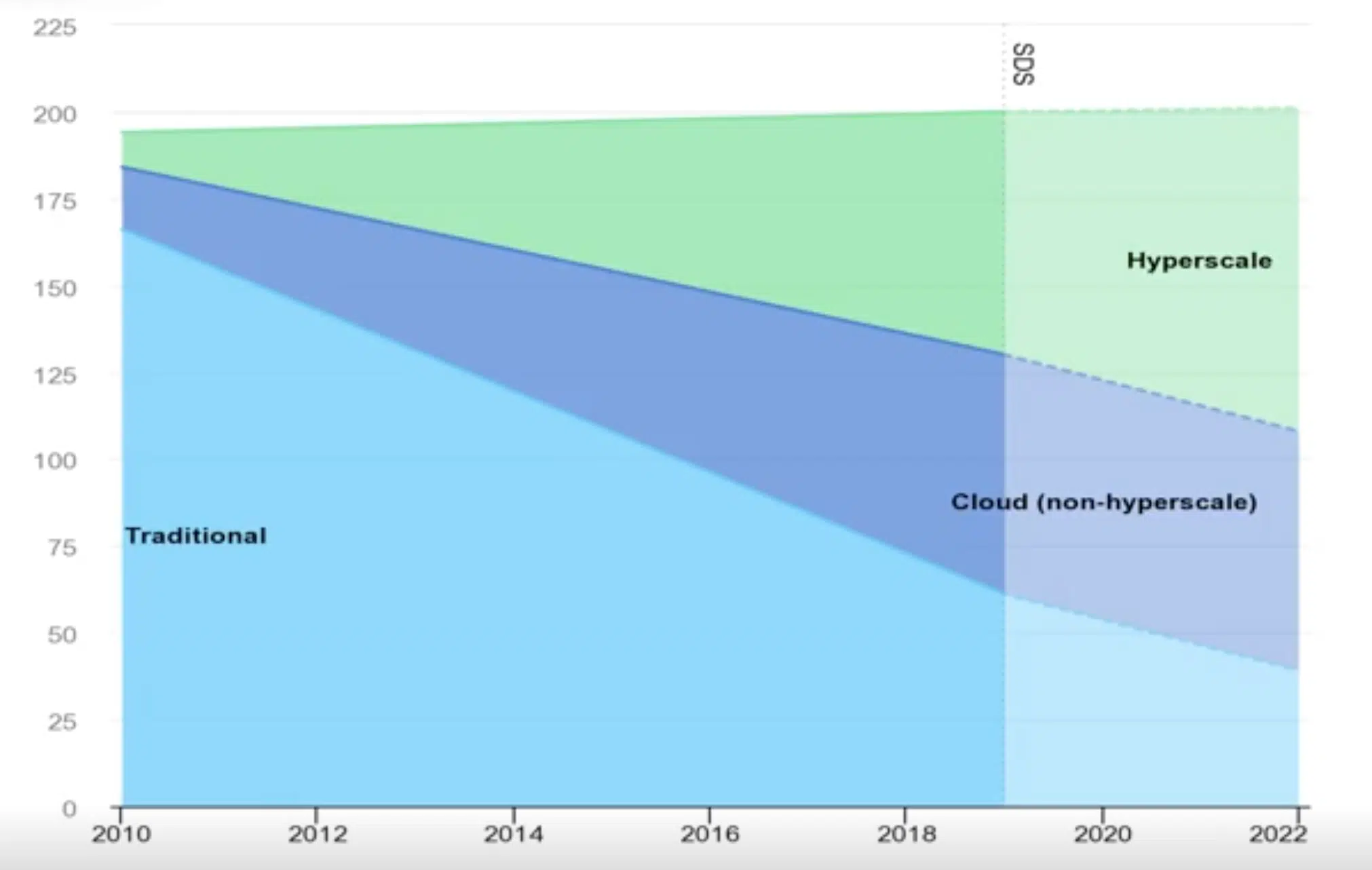

For data centers, Liquid Cooling Boyd started on the cold plates. This is the most challenging and critical component of the entire system and as we design various cold plate solutions for CPUs, GPUs, basic memories and optic modules, our engineers naturally would like to understand how they’re interacting with the systems. And as we follow the liquid path and start to solve the flow network for cold plates to loops to manifolds to CDUs, we believe that we can add even more value and optimization if the design is looked at holistically. Analyzing the entire flow network may also become increasingly important in the industry as debates continue on whether data centers should only liquid cool the high power chips while air cooling the rest or to liquid cool everything.

In Figure 4, we take a closer look at a complete flow network. Our team is very experienced with modeling and tweaking the system. We can break out and analyze the individual key elements such as the cold plates, the loops, the manifolds or the CDUs. We can even drill down into the components within the CDUs such as the pumps, the heat exchangers, or the fans. So in this flow network below, we are looking at a liquid to air version of our CDU, which is why you see a fan here in the liquid to air version. The heat is exhausted directly into the facility air via the fans. If it was a liquidated liquid version of our CDU, then the facility water will be carried carrying away the heat and you’ll only see a pump here. With our simulation, we can vary the boundary conditions provided by the MBN air of this facility or by the facility water as well. Our flow network can also work in combination with CFD inputs for individual sub-components.

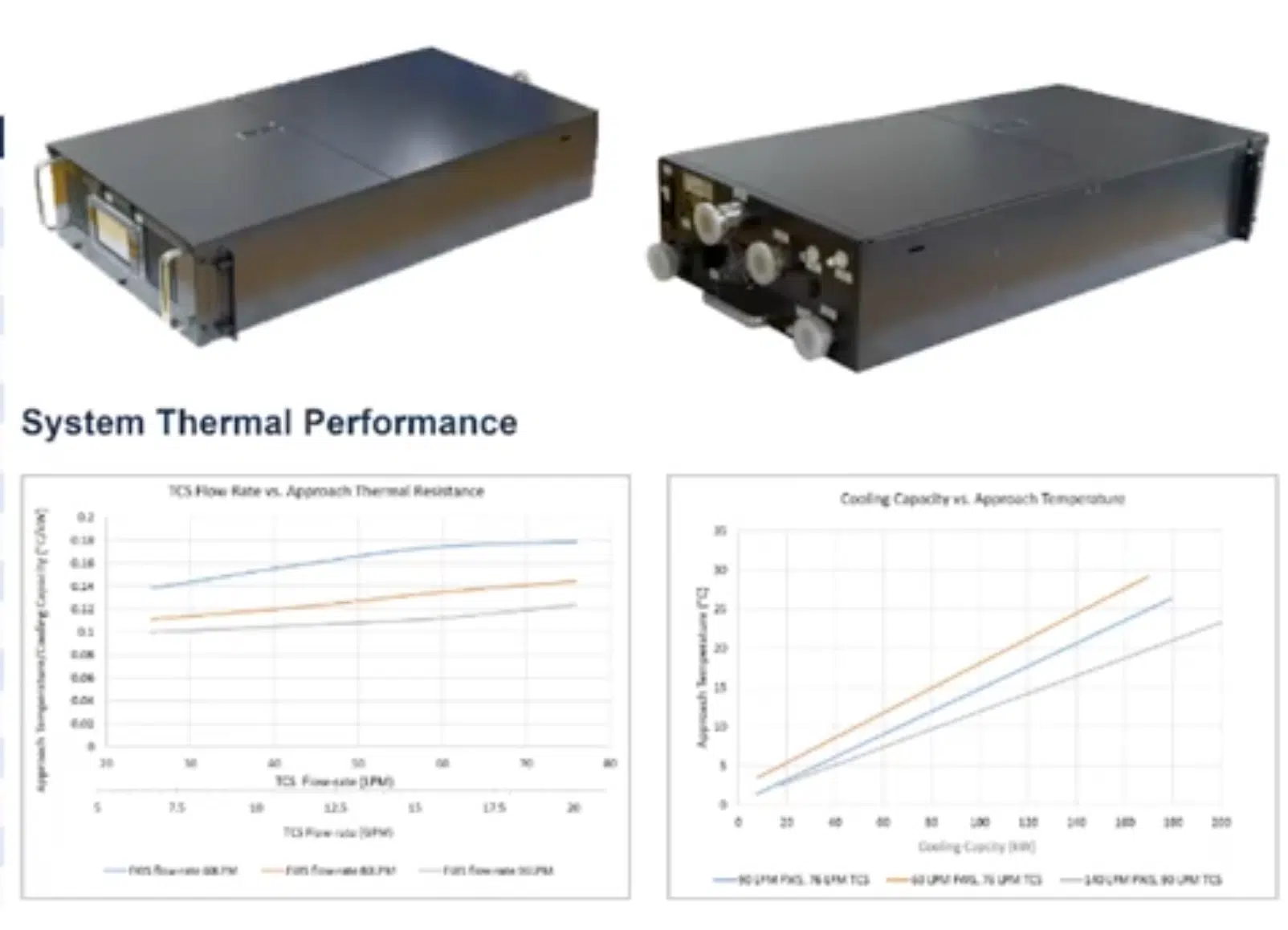

This way we can vary the performance for loops, pumps, exchangers, heat exchangers, and solve them together. This helps us to see where we can tweak and adjust to provide better optimization. When Boyd entered the CDU market, our team looked closely at the current industry conditions. We researched future trends and demands, and we worked closely with our customers regarding their needs and challenges. Based on our research, our team have put together the following products in two categories. First is cater to data center facilities or various locations that may not have access to facility water. In this case, the CDU will be a liquid to Air CDU. The second category is for facilities that do have access to facility water and in this case the CDU will be a liquid to liquid CDU. For the liquid air we have a 10U modular unit that can either be racked up to four units per rack or functional as a standalone either bench top or under the tape type of unit. We also have the capabilities to provide a RPU plus rear door heat exchanger, a version of the liquid to air. However, so far we have now seen a strong demand for this option in the market. For the liquid or liquid options, we have the four U in RAC unit as well as the larger in enroll unit.

In Figure 5, we have the 4U liquidated liquid CDU. This unit only comes in a 19 inch version, but can be adapted to fit within a 21 inch rack if needed. The liquid of liquid CDU cooling capacity scales with a pump. So we don’t necessarily need to increase the chassis size to improve performance. These are what we would consider the workhorse units that will be deployed in data centers, big and small as long as they have access to facility water. This is our largest form factor CDU. It is an in-road, CDU designed to integrate directly with facilities that have purpose, build different capabilities to fully utilize its capacity. It can also be combined into pos of two or four units through software to meet the redundancy requirements of data center customers. Boy have over 40 manufacturing and design locations globally. I’m highlighting here the ones that are currently part of our liquid cooling product footprint. And as demand for liquid cooling solutions grow, we’re also investing in additional capacities. This coverage also allows us to work closely with our customers no matter what time zone they’re in. Throughout each step of the engagement process, from design to manufacturing to post-sale support for larger products such as CDUs, we can also manufacture close to the point of consumption, which will help save both freight and lead time for our customers.

Conclusion

The deployment of a Boyd CDU is the start of a partnership. We understand this technology is relatively new to a lot of end users and we offer comprehensive program to provide onsite commissioning training, preventative maintenance, and fruit support. We also have a global network of service providers that can provide timely onsite support to minimize any potential downtime in the future. So in conclusion, Boyd has decades of experience providing liquid cooling solutions across multiple industries. We have an excellent track record of reliable products. We can provide a holistic liquid cooling architectural design from chip to infrastructure and boy have a very robust global manufacturing and design footprint to be able to produce these large systems close to deployment. We look forward to hearing from you at online@boydcorp.com