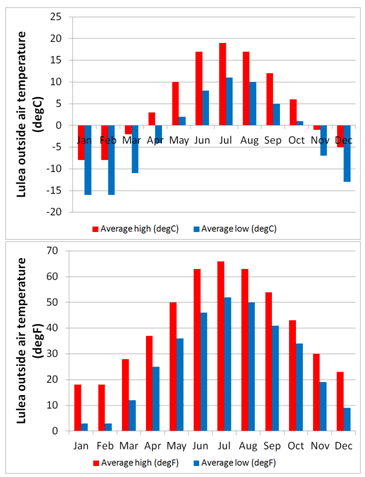

What do you call someone who doesn’t believe in Father Christmas? A rebel without a Claus. Right, that’s that out of the way. This week Facebook announced that it plans to build a 3x 300,000 ft^2 data centers in Lulea, Sweden. The Lulea business agency who helped attract Facebook to their city are dutifully excited as should Facebook be as they look set to capitalise on 10 months each year of free cooling air. With data centers consuming between 1.1 and 1.5% of the entire worlds electrical usage in recent years, the need to reduce unnecessary energy consumption to minimise cost of ownership is a critical business driver. With about the same amount of money spent on cooling a data center as spent on it actually working, any opportunity to reduce it’s PUE is attractive.

As a society, especially since the industrial revolution, we have taken a reductionist approach to quantifying the world around us. We use efficiency metrics to gauge how close we are to optimal. For data centers the PUE, the power usage effectiveness, relates the total energy consumed by the data center to the power needed to run the IT equipment. The difference due to things such as lighting, but most dominantly, cooling. A PUE of 1.0 would mean that all energy consumed was used to drive the equipment directly. A PUE of 2.0 would mean that for each $ spent on running the equipment, a $ was spent on cooling it (+lighting the room etc.). The average used to be 2.0. Recently it’s crept down to 1.8. Google, Facebook, Yahoo, Microsoft have all reported values down to between 1.07 and 1.2.

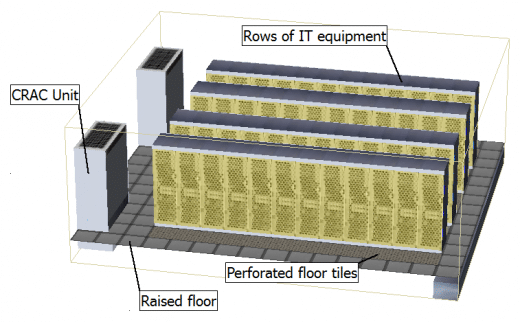

If the air temperature outside the (sealed) data center is lower than the required CRAC unit supply temperature then you have the opportunity to use this external air instead. Likely it will still have to be conditioned to control humidity and remove particulate contamination but it won’t have to be cooled.

FloVENT is used extensively for such data center cooling simulation applications. We even used it to help design Mentor’s own new data centers! Working with APC, we introduced an innovative FloVENT ‘capture index’ feature to help identify the cooling effectiveness of a proposed data center layout. Yet another step in taking simulation beyond simply indicating ‘what is happening’ to understanding ‘why it is happening’ and thus get designers/operators closer to ‘how can I get it to function properly.’ APC is one of a large number of FloVENT users. Not all of whom I am allowed to mention which is a shame in the context of this blog.

28th October 2011, Ross-on-Wye.